高级算法 (Fall 2021) and 高级算法 (Fall 2021)/Min-Cut and Max-Cut: Difference between pages

imported>TCSseminar |

imported>Etone (Created page with "= Graph Cut = Let <math>G(V, E)</math> be an undirected graph. A subset <math>C\subseteq E</math> of edges is a '''cut''' of graph <math>G</math> if <math>G</math> becomes ''d...") |

||

| Line 1: | Line 1: | ||

= Graph Cut = | |||

Let <math>G(V, E)</math> be an undirected graph. A subset <math>C\subseteq E</math> of edges is a '''cut''' of graph <math>G</math> if <math>G</math> becomes ''disconnected'' after deleting all edges in <math>C</math>. | |||

< | |||

Let <math>\{S,T\}</math> be a '''bipartition''' of <math>V</math> into nonempty subsets <math>S,T\subseteq V</math>, where <math>S\cap T=\emptyset</math> and <math>S\cup T=V</math>. A cut <math>C</math> is specified by this bipartition as | |||

:<math>C=E(S,T)\,</math>, | |||

where <math>E(S,T)</math> denotes the set of "crossing edges" with one endpoint in each of <math>S</math> and <math>T</math>, formally defined as | |||

:<math>E(S,T)=\{uv\in E\mid u\in S, v\in T\}</math>. | |||

Given a graph <math>G</math>, there might be many cuts in <math>G</math>, and we are interested in finding the '''minimum''' or '''maximum''' cut. | |||

| | |||

| | = Min-Cut = | ||

| | The '''min-cut problem''', also called the '''global minimum cut problem''', is defined as follows. | ||

| | {{Theorem|Min-cut problem| | ||

*'''Input''': an undirected graph <math>G(V,E)</math>; | |||

*'''Output''': a cut <math>C</math> in <math>G</math> with the smallest size <math>|C|</math>. | |||

| | }} | ||

| | |||

| | Equivalently, the problem asks to find a bipartition of <math>V</math> into disjoint non-empty subsets <math>S</math> and <math>T</math> that minimizes <math>|E(S,T)|</math>. | ||

We consider the problem in a slightly more generalized setting, where the input graphs <math>G</math> can be '''multi-graphs''', meaning that there could be multiple '''parallel edges''' between two vertices <math>u</math> and <math>v</math>. The cuts in multi-graphs are defined in the same way as before, and the cost of a cut <math>C</math> is given by the total number of edges (including parallel edges) in <math>C</math>. Equivalently, one may think of a multi-graph as a graph with integer edge weights, and the cost of a cut <math>C</math> is the total weights of all edges in <math>C</math>. | |||

| | |||

| | A canonical deterministic algorithm for this problem is through the [http://en.wikipedia.org/wiki/Max-flow_min-cut_theorem max-flow min-cut theorem]. The max-flow algorithm finds us a minimum '''<math>s</math>-<math>t</math> cut''', which disconnects a '''source''' <math>s\in V</math> from a '''sink''' <math>t\in V</math>, both specified as part of the input. A global min cut can be found by exhaustively finding the minimum <math>s</math>-<math>t</math> cut for an arbitrarily fixed source <math>s</math> and all possible sink <math>t\neq s</math>. This takes <math>(n-1)\times</math>max-flow time where <math>n=|V|</math> is the number of vertices. | ||

| | |||

| | The fastest known deterministic algorithm for the minimum cut problem on multi-graphs is the [https://en.wikipedia.org/wiki/Stoer–Wagner_algorithm Stoer–Wagner algorithm], which achieves an <math>O(mn+n^2\log n)</math> time complexity where <math>m=|E|</math> is the total number of edges (counting the parallel edges). | ||

| | |||

If we restrict the input to be '''simple graphs''' (meaning there is no parallel edges) with no edge weight, there are better algorithms. A deterministic algorithm of [https://dl.acm.org/citation.cfm?id=2746588 Ken-ichi Kawarabayashi and Mikkel Thorup] published in STOC 2015, achieves the near-linear (in the number of edges) time complexity. | |||

| | == Karger's ''Contraction'' algorithm == | ||

| | We will describe a simple and elegant randomized algorithm for the min-cut problem. The algorithm is due to [http://people.csail.mit.edu/karger/ David Karger]. | ||

| | |||

| | Let <math>G(V, E)</math> be a '''multi-graph''', which allows more than one '''parallel edges''' between two distinct vertices <math>u</math> and <math>v</math> but does not allow any '''self-loops''': the edges that adjoin a vertex to itself. A multi-graph <math>G</math> can be represented by an adjacency matrix <math>A</math>, in the way that each non-diagonal entry <math>A(u,v)</math> takes nonnegative integer values instead of just 0 or 1, representing the number of parallel edges between <math>u</math> and <math>v</math> in <math>G</math>, and all diagonal entries <math>A(v,v)=0</math> (since there is no self-loop). | ||

| | |||

Given a multi-graph <math>G(V,E)</math> and an edge <math>e\in E</math>, we define the following '''contraction''' operator Contract(<math>G</math>, <math>e</math>), which transform <math>G</math> to a new multi-graph. | |||

| | {{Theorem|The contraction operator ''Contract''(<math>G</math>, <math>e</math>)| | ||

| | :say <math>e=uv</math>: | ||

:*replace <math>\{u,v\}</math> by a new vertex <math>x</math>; | |||

:*for every edge (no matter parallel or not) in the form of <math>uw</math> or <math>vw</math> that connects one of <math>\{u,v\}</math> to a vertex <math>w\in V\setminus\{u,v\}</math> in the graph other than <math>u,v</math>, replace it by a new edge <math>xw</math>; | |||

| | :*the reset of the graph does not change. | ||

| | }} | ||

| | |||

| | In other words, the <math>Contract(G,uv)</math> merges the two vertices <math>u</math> and <math>v</math> into a new vertex <math>x</math> whose incident edges preserves the edges incident to <math>u</math> or <math>v</math> in the original graph <math>G</math> except for the parallel edges between them. Now you should realize why we consider multi-graphs instead of simple graphs, because even if we start with a simple graph without parallel edges, the contraction operator may create parallel edges. | ||

| | |||

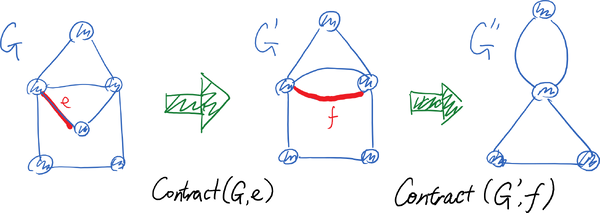

| | The contraction operator is illustrated by the following picture: | ||

[[Image:Contract.png|600px|center]] | |||

Karger's algorithm uses a simple idea: | |||

| | *At each step we randomly select an edge in the current multi-graph to contract until there are only two vertices left. | ||

| | *The parallel edges between these two remaining vertices must be a cut of the original graph. | ||

| | *We return this cut and hope that with good chance this gives us a minimum cut. | ||

The following is the pseudocode for Karger's algorithm. | |||

{{Theorem|''RandomContract'' (Karger 1993)| | |||

:'''Input:''' multi-graph <math>G(V,E)</math>; | |||

---- | |||

:while <math>|V|>2</math> do | |||

:* choose an edge <math>uv\in E</math> uniformly at random; | |||

:* <math>G=Contract(G,uv)</math>; | |||

:return <math>C=E</math> (the parallel edges between the only two vertices in <math>V</math>); | |||

}} | |||

Another way of looking at the contraction operator Contract(<math>G</math>,<math>e</math>) is that we are dealing with classes of vertices. Let <math>V=\{v_1,v_2,\ldots,v_n\}</math> be the set of all vertices. We start with <math>n</math> vertex classes <math>S_1,S_2,\ldots, S_n</math> with each class <math>S_i=\{v_i\}</math> contains one vertex. By calling <math>Contract(G,uv)</math>, where <math>u\in S_i</math> and <math>v\in S_j</math> for distinct <math>i\neq j</math>, we take union of <math>S_i</math> and <math>S_j</math>. The edges in the contracted multi-graph are the edges that cross between different vertex classes. | |||

This view of contraction is illustrated by the following picture: | |||

[[Image:Contract_class.png|600px|center]] | |||

The following claim is left as an exercise for the class: | |||

:{|border="2" width="100%" cellspacing="4" cellpadding="3" rules="all" style="margin:1em 1em 1em 0; border:solid 1px #AAAAAA; border-collapse:collapse;empty-cells:show;" | |||

| | |||

*With suitable choice of data structures, each operation <math>Contract(G,e)</math> can be implemented within running time <math>O(n)</math> where <math>n=|V|</math> is the number of vertices. | |||

|} | |||

In the above '''''RandomContract''''' algorithm, there are precisely <math>n-2</math> contractions. Therefore, we have the following time upper bound. | |||

{{Theorem|Theorem| | |||

: For any multigraph with <math>n</math> vertices, the running time of the '''''RandomContract''''' algorithm is <math>O(n^2)</math>. | |||

}} | |||

We emphasize that it's the time complexity of a "single running" of the algorithm: later we will see we may need to run this algorithm for many times to guarantee a desirable accuracy. | |||

== Analysis of accuracy == | |||

We now analyze the performance of the above algorithm. Since the algorithm is '''''randomized''''', its output cut is a random variable even when the input is fixed, so ''the output may not always be correct''. We want to give a theoretical guarantee of the chance that the algorithm returns a correct answer on an arbitrary input. | |||

More precisely, on an arbitrarily fixed input multi-graph <math>G</math>, we want to answer the following question rigorously: | |||

:<math>p_{\text{correct}}=\Pr[\,\text{a minimum cut is returned by }RandomContract\,]\ge ?</math> | |||

To answer this question, we prove a stronger statement: for arbitrarily fixed input multi-graph <math>G</math> and a particular minimum cut <math>C</math> in <math>G</math>, | |||

:<math>p_{C}=\Pr[\,C\mbox{ is returned by }RandomContract\,]\ge ?</math> | |||

Obviously this will imply the previous lower bound for <math>p_{\text{correct}}</math> because the event in <math>p_{C}</math> implies the event in <math>p_{\text{correct}}</math>. | |||

:{|border="2" width="100%" cellspacing="4" cellpadding="3" rules="all" style="margin:1em 1em 1em 0; border:solid 1px #AAAAAA; border-collapse:collapse;empty-cells:show;" | |||

| | |||

*In above argument we use the simple law in probability that <math>\Pr[A]\le \Pr[B]</math> if <math>A\subseteq B</math>, i.e. event <math>A</math> implies event <math>B</math>. | |||

|} | |||

We introduce the following notations: | |||

*Let <math>e_1,e_2,\ldots,e_{n-2}</math> denote the sequence of random edges chosen to contract in a running of ''RandomContract'' algorithm. | |||

*Let <math>G_1=G</math> denote the original input multi-graph. And for <math>i=1,2,\ldots,n-2</math>, let <math>G_{i+1}=Contract(G_{i},e_i)</math> be the multigraph after <math>i</math>th contraction. | |||

Obviously <math>e_1,e_2,\ldots,e_{n-2}</math> are random variables, and they are the ''only'' random choices used in the algorithm: meaning that they along with the input <math>G</math>, uniquely determine the sequence of multi-graphs <math>G_1,G_2,\ldots,G_{n-2}</math> in every iteration as well as the final output. | |||

We now compute the probability <math>p_C</math> by decompose it into more elementary events involving <math>e_1,e_2,\ldots,e_{n-2}</math>. This is due to the following proposition. | |||

{{Theorem | |||

|Proposition 1| | |||

:If <math>C</math> is a minimum cut in a multi-graph <math>G</math> and <math>e\not\in C</math>, then <math>C</math> is still a minimum cut in the contracted graph <math>G'=contract(G,e)</math>. | |||

}} | |||

{{Proof| | |||

We first observe that contraction will never create new cuts: every cut in the contracted graph <math>G'</math> must also be a cut in the original graph <math>G</math>. | |||

We then observe that a cut <math>C</math> in <math>G</math> "survives" in the contracted graph <math>G'</math> if and only if the contracted edge <math>e\not\in C</math>. | |||

Both observations are easy to verify by the definition of contraction operator (in particular, easier to verify if we take the vertex class interpretation). The detailed proofs are left as an exercise. | |||

}} | |||

Recall that <math>e_1,e_2,\ldots,e_{n-2}</math> denote the sequence of random edges chosen to contract in a running of ''RandomContract'' algorithm. | |||

By Proposition 1, the event <math>\mbox{``}C\mbox{ is returned by }RandomContract\mbox{''}\,</math> is equivalent to the event <math>\mbox{``}e_i\not\in C\mbox{ for all }i=1,2,\ldots,n-2\mbox{''}</math>. Therefore: | |||

:<math> | |||

\begin{align} | |||

p_C | |||

&= | |||

\Pr[\,C\mbox{ is returned by }{RandomContract}\,]\\ | |||

&= | |||

\Pr[\,e_i\not\in C\mbox{ for all }i=1,2,\ldots,n-2\,]\\ | |||

&= | |||

\prod_{i=1}^{n-2}\Pr[e_i\not\in C\mid \forall j<i, e_j\not\in C]. | |||

\end{align} | |||

</math> | |||

The last equation is due to the so called '''chain rule''' in probability. | |||

:{|border="2" width="100%" cellspacing="4" cellpadding="3" rules="all" style="margin:1em 1em 1em 0; border:solid 1px #AAAAAA; border-collapse:collapse;empty-cells:show;" | |||

| | |||

*The '''chain rule''', also known as the '''law of progressive conditioning''', is the following proposition: for a sequence of events (not necessarily independent) <math>A_1,A_2,\ldots,A_n</math>, | |||

::<math>\Pr[\forall i, A_i]=\prod_{i=1}^n\Pr[A_i\mid \forall j<i, A_j]</math>. | |||

:It is a simple consequence of the definition of conditional probability. By definition of conditional probability, | |||

::<math>\Pr[A_n\mid \forall j<n]=\frac{\Pr[\forall i, A_i]}{\Pr[\forall j<n, A_j]}</math>, | |||

:and equivalently we have | |||

::<math>\Pr[\forall i, A_i]=\Pr[\forall j<n, A_j]\Pr[A_n\mid \forall j<n]</math>. | |||

:Recursively apply this to <math>\Pr[\forall j<n, A_j]</math> we obtain the chain rule. | |||

|} | |||

Back to the analysis of probability <math>p_C</math>. | |||

Now our task is to give lower bound to each <math>p_i=\Pr[e_i\not\in C\mid \forall j<i, e_j\not\in C]</math>. The condition <math>\mbox{``}\forall j<i, e_j\not\in C\mbox{''}</math> means the min-cut <math>C</math> survives all first <math>i-1</math> contractions <math>e_1,e_2,\ldots,e_{i-1}</math>, which due to Proposition 1 means that <math>C</math> is also a min-cut in the multi-graph <math>G_i</math> obtained from applying the first <math>(i-1)</math> contractions. | |||

Then the conditional probability <math>p_i=\Pr[e_i\not\in C\mid \forall j<i, e_j\not\in C]</math> is the probability that no edge in <math>C</math> is hit when a uniform random edge in the current multi-graph is chosen assuming that <math>C</math> is a minimum cut in the current multi-graph. Intuitively this probability should be bounded from below, because as a min-cut <math>C</math> should be sparse among all edges. This intuition is justified by the following proposition. | |||

{{Theorem | |||

|Proposition 2| | |||

:If <math>C</math> is a min-cut in a multi-graph <math>G(V,E)</math>, then <math>|E|\ge \frac{|V||C|}{2}</math>. | |||

}} | |||

{{Proof| | |||

:It must hold that the degree of each vertex <math>v\in V</math> is at least <math>|C|</math>, or otherwise the set of edges incident to <math>v</math> forms a cut of size smaller than <math>|C|</math> which separates <math>\{v\}</math> from the rest of the graph, contradicting that <math>C</math> is a min-cut. And the bound <math>|E|\ge \frac{|V||C|}{2}</math> follows directly from applying the [https://en.wikipedia.org/wiki/Handshaking_lemma handshaking lemma] to the fact that every vertex in <math>G</math> has degree at least <math>|C|</math>. | |||

}} | |||

Let <math>V_i</math> and <math>E_i</math> denote the vertex set and edge set of the multi-graph <math>G_i</math> respectively, and recall that <math>G_i</math> is the multi-graph obtained from applying first <math>(i-1)</math> contractions. Obviously <math>|V_{i}|=n-i+1</math>. And due to Proposition 2, <math>|E_i|\ge \frac{|V_i||C|}{2}</math> if <math>C</math> is still a min-cut in <math>G_i</math>. | |||

The probability <math>p_i=\Pr[e_i\not\in C\mid \forall j<i, e_j\not\in C]</math> can be computed as | |||

:<math> | |||

\begin{align} | |||

p_i | |||

&=1-\frac{|C|}{|E_i|}\\ | |||

&\ge1-\frac{2}{|V_i|}\\ | |||

&=1-\frac{2}{n-i+1} | |||

\end{align},</math> | |||

where the inequality is due to Proposition 2. | |||

We now can put everything together. We arbitrarily fix the input multi-graph <math>G</math> and any particular minimum cut <math>C</math> in <math>G</math>. | |||

:<math>\begin{align} | |||

p_{\text{correct}} | |||

&=\Pr[\,\text{a minimum cut is returned by }RandomContract\,]\\ | |||

&\ge | |||

\Pr[\,C\mbox{ is returned by }{RandomContract}\,]\\ | |||

&= | |||

\Pr[\,e_i\not\in C\mbox{ for all }i=1,2,\ldots,n-2\,]\\ | |||

&= | |||

\prod_{i=1}^{n-2}\Pr[e_i\not\in C\mid \forall j<i, e_j\not\in C]\\ | |||

&\ge | |||

\prod_{i=1}^{n-2}\left(1-\frac{2}{n-i+1}\right)\\ | |||

&= | |||

\prod_{k=3}^{n}\frac{k-2}{k}\\ | |||

&= \frac{2}{n(n-1)}. | |||

\end{align}</math> | |||

This gives us the following theorem. | |||

{{Theorem | |||

|Theorem| | |||

: For any multigraph with <math>n</math> vertices, the ''RandomContract'' algorithm returns a minimum cut with probability at least <math>\frac{2}{n(n-1)}</math>. | |||

}} | |||

At first glance this seems to be a miserable chance of success. However, notice that there may be exponential many cuts in a graph (because potentially every nonempty subset <math>S\subset V</math> corresponds to a cut <math>C=E(S,\overline{S})</math>), and Karger's algorithm effectively reduce this exponential-sized space of feasible solutions to a quadratic size one, an exponential improvement! | |||

We can run ''RandomContract'' independently for <math>t=\frac{n(n-1)\ln n}{2}</math> times and return the smallest cut ever returned. The probability that a minimum cut is found is at least: | |||

:<math>\begin{align} | |||

&\quad 1-\Pr[\,\mbox{all }t\mbox{ independent runnings of } RandomContract\mbox{ fails to find a min-cut}\,] \\ | |||

&= 1-\Pr[\,\mbox{a single running of }{RandomContract}\mbox{ fails}\,]^{t} \\ | |||

&\ge 1- \left(1-\frac{2}{n(n-1)}\right)^{\frac{n(n-1)\ln n}{2}} \\ | |||

&\ge 1-\frac{1}{n}. | |||

\end{align}</math> | |||

Recall that a running of ''RandomContract'' algorithm takes <math>O(n^2)</math> time. Altogether this gives us a randomized algorithm running in time <math>O(n^4\log n)</math> and find a minimum cut [https://en.wikipedia.org/wiki/With_high_probability '''with high probability''']. | |||

== A Corollary by the Probabilistic Method == | |||

The analysis of Karger's algorithm implies the following combinatorial proposition for the number of distinct minimum cuts in a graph. | |||

{{Theorem|Corollary| | |||

:For any graph <math>G(V,E)</math> of <math>n</math> vertices, the number of distinct minimum cuts in <math>G</math> is at most <math>\frac{n(n-1)}{2}</math>. | |||

}} | |||

{{Proof| | |||

Let <math>\mathcal{C}</math> denote the set of all minimum cuts in <math>G</math>. For each min-cut <math>C\in\mathcal{C}</math>, let <math>A_C</math> denote the event "<math>C</math> is returned by ''RandomContract''", whose probability is given by | |||

:<math>p_C=\Pr[A_C]\,</math>. | |||

Clearly we have: | |||

* for any distinct <math>C,D\in\mathcal{C}</math>, <math>A_C\,</math> and <math>A_{D}\,</math> are '''disjoint events'''; and | |||

* the union <math>\bigcup_{C\in\mathcal{C}}A_C</math> is precisely the event "a minimum cut is returned by ''RandomContract''", whose probability is given by | |||

::<math>p_{\text{correct}}=\Pr[\,\text{a minimum cut is returned by } RandomContract\,]</math>. | |||

Due to the [https://en.wikipedia.org/wiki/Probability_axioms#Third_axiom '''additivity of probability'''], it holds that | |||

:<math> | |||

p_{\text{correct}}=\sum_{C\in\mathcal{C}}\Pr[A_C]=\sum_{C\in\mathcal{C}}p_C. | |||

</math> | |||

By the analysis of Karger's algorithm, we know <math>p_C\ge\frac{2}{n(n-1)}</math>. And since <math>p_{\text{correct}}</math> is a well defined probability, due to the [https://en.wikipedia.org/wiki/Probability_axioms#Second_axiom '''unitarity of probability'''], it must hold that <math>p_{\text{correct}}\le 1</math>. Therefore, | |||

:<math>1\ge p_{\text{correct}}=\sum_{C\in\mathcal{C}}p_C\ge|\mathcal{C}|\frac{2}{n(n-1)}</math>, | |||

which means <math>|\mathcal{C}|\le\frac{n(n-1)}{2}</math>. | |||

}} | |||

Note that the statement of this theorem has no randomness at all, while the proof consists of a randomized procedure. This is an example of [http://en.wikipedia.org/wiki/Probabilistic_method the probabilistic method]. | |||

== Fast Min-Cut == | |||

In the analysis of ''RandomContract'' algorithm, recall that we lower bound the probability <math>p_C</math> that a min-cut <math>C</math> is returned by ''RandomContract'' by the following '''telescopic product''': | |||

:<math>p_C\ge\prod_{i=1}^{n-2}\left(1-\frac{2}{n-i+1}\right)</math>. | |||

Here the index <math>i</math> corresponds to the <math>i</math>th contraction. The factor <math>\left(1-\frac{2}{n-i+1}\right)</math> is decreasing in <math>i</math>, which means: | |||

* The probability of success is only getting bad when the graph is getting "too contracted", that is, when the number of remaining vertices is getting small. | |||

This motivates us to consider the following alternation to the algorithm: first using random contractions to reduce the number of vertices to a moderately small number, and then recursively finding a min-cut in this smaller instance. This seems just a restatement of exactly what we have been doing. Inspired by the idea of boosting the accuracy via independent repetition, here we apply the recursion on ''two'' smaller instances generated independently. | |||

The algorithm obtained in this way is called ''FastCut''. We first define a procedure to randomly contract edges until there are <math>t</math> number of vertices left. | |||

{{Theorem|''RandomContract''<math>(G, t)</math>| | |||

:'''Input:''' multi-graph <math>G(V,E)</math>, and integer <math>t\ge 2</math>; | |||

---- | |||

:while <math>|V|>t</math> do | |||

:* choose an edge <math>uv\in E</math> uniformly at random; | |||

:* <math>G=Contract(G,uv)</math>; | |||

:return <math>G</math>; | |||

}} | |||

The ''FastCut'' algorithm is recursively defined as follows. | |||

{{Theorem|''FastCut''<math>(G)</math>| | |||

:'''Input:''' multi-graph <math>G(V,E)</math>; | |||

---- | |||

:if <math>|V|\le 6</math> then return a mincut by brute force; | |||

:else let <math>t=\left\lceil1+|V|/\sqrt{2}\right\rceil</math>; | |||

:: <math>G_1=RandomContract(G,t)</math>; | |||

:: <math>G_2=RandomContract(G,t)</math>; | |||

::return the smaller one of <math>FastCut(G_1)</math> and <math>FastCut(G_2)</math>; | |||

}} | |||

As before, all <math>G</math> are multigraphs. | |||

Fix a min-cut <math>C</math> in the original multigraph <math>G</math>. By the same analysis as in the case of ''RandomContract'', we have | |||

:<math> | |||

\begin{align} | |||

&\Pr[C\text{ survives all contractions in }RandomContract(G,t)]\\ | |||

= | |||

&\prod_{i=1}^{n-t}\Pr[C\text{ survives the }i\text{-th contraction}\mid C\text{ survives the first }(i-1)\text{-th contractions}]\\ | |||

\ge | |||

&\prod_{i=1}^{n-t}\left(1-\frac{2}{n-i+1}\right)\\ | |||

= | |||

&\prod_{k=t+1}^{n}\frac{k-2}{k}\\ | |||

= | |||

&\frac{t(t-1)}{n(n-1)}. | |||

\end{align} | |||

</math> | |||

When <math>t=\left\lceil1+n/\sqrt{2}\right\rceil</math>, this probability is at least <math>1/2</math>. The choice of <math>t</math> is due to our purpose to make this probability at least <math>1/2</math>. You will see this is crucial in the following analysis of accuracy. | |||

We denote by <math>A</math> and <math>B</math> the following events: | |||

:<math> | |||

\begin{align} | |||

A: | |||

&\quad C\text{ survives all contractions in }RandomContract(G,t);\\ | |||

B: | |||

&\quad\text{size of min-cut is unchanged after }RandomContract(G,t); | |||

\end{align} | |||

</math> | |||

Clearly, <math>A</math> implies <math>B</math> and by above analysis <math>\Pr[B]\ge\Pr[A]\ge\frac{1}{2}</math>. | |||

We denote by <math>p(n)</math> the lower bound on the probability that <math>FastCut(G)</math> succeeds for a multigraph of <math>n</math> vertices, that is | |||

:<math> | |||

p(n) | |||

=\min_{G: |V|=n}\Pr[\,FastCut(G)\text{ returns a min-cut in }G\,]. | |||

</math> | |||

Suppose that <math>G</math> is the multigraph that achieves the minimum in above definition. The following recurrence holds for <math>p(n)</math>. | |||

:<math> | |||

\begin{align} | |||

p(n) | |||

&= | |||

\Pr[\,FastCut(G)\text{ returns a min-cut in }G\,]\\ | |||

&= | |||

\Pr[\,\text{ a min-cut of }G\text{ is returned by }FastCut(G_1)\text{ or }FastCut(G_2)\,]\\ | |||

&\ge | |||

1-\left(1-\Pr[B\wedge FastCut(G_1)\text{ returns a min-cut in }G_1\,]\right)^2\\ | |||

&\ge | |||

1-\left(1-\Pr[A\wedge FastCut(G_1)\text{ returns a min-cut in }G_1\,]\right)^2\\ | |||

&= | |||

1-\left(1-\Pr[A]\Pr[ FastCut(G_1)\text{ returns a min-cut in }G_1\mid A]\right)^2\\ | |||

&\ge | |||

1-\left(1-\frac{1}{2}p\left(\left\lceil1+n/\sqrt{2}\right\rceil\right)\right)^2, | |||

\end{align} | |||

</math> | |||

where <math>A</math> and <math>B</math> are defined as above such that <math>\Pr[A]\ge\frac{1}{2}</math>. | |||

The base case is that <math>p(n)=1</math> for <math>n\le 6</math>. By induction it is easy to prove that | |||

:<math> | |||

p(n)=\Omega\left(\frac{1}{\log n}\right). | |||

</math> | |||

Recall that we can implement an edge contraction in <math>O(n)</math> time, thus it is easy to verify the following recursion of time complexity: | |||

:<math> | |||

T(n)=2T\left(\left\lceil1+n/\sqrt{2}\right\rceil\right)+O(n^2), | |||

</math> | |||

where <math>T(n)</math> denotes the running time of <math>FastCut(G)</math> on a multigraph <math>G</math> of <math>n</math> vertices. | |||

By induction with the base case <math>T(n)=O(1)</math> for <math>n\le 6</math>, it is easy to verify that <math>T(n)=O(n^2\log n)</math>. | |||

{{Theorem | |||

|Theorem| | |||

: For any multigraph with <math>n</math> vertices, the ''FastCut'' algorithm returns a minimum cut with probability <math>\Omega\left(\frac{1}{\log n}\right)</math> in time <math>O(n^2\log n)</math>. | |||

}} | |||

At this point, we see the name ''FastCut'' is misleading because it is actually slower than the original ''RandomContract'' algorithm, only the chance of successfully finding a min-cut is much better (improved from an <math>\Omega(1/n^2)</math> to an <math>\Omega(1/\log n)</math>). | |||

Given any input multi-graph, repeatedly running the ''FastCut'' algorithm independently for some <math>O((\log n)^2)</math> times and returns the smallest cut ever returned, we have an algorithm which runs in time <math>O(n^2\log^3n)</math> and returns a min-cut with probability <math>1-O(1/n)</math>, i.e. with high probability. | |||

Recall that the running time of best known deterministic algorithm for min-cut on multi-graph is <math>O(mn+n^2\log n)</math>. On dense graph, the randomized algorithm outperforms the best known deterministic algorithm. | |||

Finally, Karger further improves this and obtains a near-linear (in the number of edges) time [https://arxiv.org/abs/cs/9812007 randomized algorithm] for minimum cut in multi-graphs. | |||

= Max-Cut= | |||

The '''maximum cut problem''', in short the '''max-cut problem''', is defined as follows. | |||

{{Theorem|Max-cut problem| | |||

*'''Input''': an undirected graph <math>G(V,E)</math>; | |||

*'''Output''': a bipartition of <math>V</math> into disjoint subsets <math>S</math> and <math>T</math> that maximizes <math>|E(S,T)|</math>. | |||

}} | |||

The problem is a typical MAX-CSP, an optimization version of the [https://en.wikipedia.org/wiki/Constraint_satisfaction_problem constraint satisfaction problem]. An instance of CSP consists of: | |||

* a set of variables <math>x_1,x_2,\ldots,x_n</math> usually taking values from some finite domain; | |||

* a sequence of constraints (predicates) <math>C_1,C_2,\ldots, C_m</math> defined on those variables. | |||

The MAX-CSP asks to find an assignment of values to variables <math>x_1,x_2,\ldots,x_n</math> which maximizes the number of satisfied constraints. | |||

In particular, when the variables <math>x_1,x_2,\ldots,x_n</math> takes Boolean values <math>\{0,1\}</math> and every constraint is a binary constraint <math>\cdot\neq\cdot</math> in the form of <math>x_1\neq x_j</math>, then the MAX-CSP is precisely the max-cut problem. | |||

Unlike the min-cut problem, which can be solved in polynomial time, the max-cut is known to be [https://en.wikipedia.org/wiki/NP-hardness '''NP-hard''']. Its decision version is among the [https://en.wikipedia.org/wiki/Karp%27s_21_NP-complete_problems 21 '''NP-complete''' problems found by Karp]. This means we should not hope for a polynomial-time algorithm for solving the problem if [https://en.wikipedia.org/wiki/P_versus_NP_problem a famous conjecture in computational complexity] is correct. And due to another [https://en.wikipedia.org/wiki/BPP_(complexity)#Problems less famous conjecture in computational complexity], randomization alone probably cannot help this situation either. | |||

We may compromise our goal and allow algorithm to ''not always find the optimal solution''. However, we still want to guarantee that the algorithm ''always returns a relatively good solution on all possible instances''. This notion is formally captured by '''approximation algorithms''' and '''approximation ratio'''. | |||

== Greedy algorithm == | |||

A natural heuristics for solving the max-cut is to sequentially join the vertices to one of the two disjoint subsets <math>S</math> and <math>T</math> to ''greedily'' maximize the ''current'' number of edges crossing between <math>S</math> and <math>T</math>. | |||

To state the algorithm, we overload the definition <math>E(S,T)</math>. Given an undirected graph <math>G(V,E)</math>, for any disjoint subsets <math>S,T\subseteq V</math> of vertices, we define | |||

:<math>E(S,T)=\{uv\in E\mid u\in S, v\in T\}</math>. | |||

We also assume that the vertices are ordered arbitrarily as <math>V=\{v_1,v_2,\ldots,v_n\}</math>. | |||

The greedy heuristics is then described as follows. | |||

{{Theorem|''GreedyMaxCut''| | |||

:'''Input:''' undirected graph <math>G(V,E)</math>, | |||

:::with an arbitrary order of vertices <math>V=\{v_1,v_2,\ldots,v_n\}</math>; | |||

---- | |||

:initially <math>S=T=\emptyset</math>; | |||

:for <math>i=1,2,\ldots,n</math> | |||

::<math>v_i</math> joins one of <math>S,T</math> to maximize the current <math>|E(S,T)|</math> (breaking ties arbitrarily); | |||

}} | |||

The algorithm certainly runs in polynomial time. | |||

Without any guarantee of how good the solution returned by the algorithm approximates the optimal solution, the algorithm is only a heuristics, not an '''approximation algorithm'''. | |||

=== Approximation ratio === | |||

For now we restrict ourselves to the max-cut problem, although the notion applies more generally. | |||

Let <math>G</math> be an arbitrary instance of max-cut problem. Let <math>OPT_G</math> denote the size of the of max-cut in graph <math>G</math>. More precisely, | |||

:<math>OPT_G=\max_{S\subseteq V}|E(S,\overline{S})|</math>. | |||

Let <math>SOL_G</math> be the size of of the cut <math>|E(S,T)|</math> returned by the ''GreedyMaxCut'' algorithm on input graph <math>G</math>. | |||

As a maximization problem it is trivial that <math>SOL_G\le OPT_G</math> for all <math>G</math>. To guarantee that the ''GreedyMaxCut'' gives good approximation of optimal solution, we need the other direction: | |||

{{Theorem|Approximation ratio| | |||

:We say that the '''approximation ratio''' of the ''GreedyMaxCut'' algorithm is <math>\alpha</math>, or ''GreedyMaxCut'' is an '''<math>\alpha</math>-approximation''' algorithm, for some <math>0<\alpha\le 1</math>, if | |||

::<math>\frac{SOL_G}{OPT_G}\ge \alpha</math> for every possible instance <math>G</math> of max-cut. | |||

}} | }} | ||

With this notion, we now try to analyze the approximation ratio of the ''GreedyMaxCut'' algorithm. | |||

A dilemma to apply this notion in our analysis is that in the definition of approximation ratio, we compare the solution returned by the algorithm with the '''optimal solution'''. However, in the analysis we can hardly conduct similar comparisons to the optimal solutions. A fallacy in this logic is that the optimal solutions are '''NP-hard''', meaning there is no easy way to calculate them (e.g. a closed form). | |||

A popular step (usually the first step of analyzing approximation ratio) to avoid this dilemma is that instead of directly comparing to the optimal solution, we compare to an '''upper bound''' of the optimal solution (for minimization problem, this needs to be a lower bound), that is, we compare to something which is even better than the optimal solution (which means it cannot be realized by any feasible solution). | |||

For the max-cut problem, a simple upper bound to <math>OPT_G</math> is <math>|E|</math>, the number of all edges. This is a trivial upper bound of max-cut since any cut is a subset of edges. | |||

Let <math>G(V,E)</math> be the input graph and <math>V=\{v_1,v_2,\ldots,v_n\}</math>. Initially <math>S_1=T_1=\emptyset</math>. And for <math>i=1,2,\ldots,n</math>, we let <math>S_{i+1}</math> and <math>T_{i+1}</math> be the respective <math>S</math> and <math>T</math> after <math>v_i</math> joins one of <math>S,T</math>. More precisely, | |||

* <math>S_{i+1}=S_i\cup\{v_i\}</math> and <math>T_{i+1}=T_i\,</math> if <math>E(S_{i}\cup\{v_i\},T_i)>E(S_{i},T_i\cup\{v_i\})</math>; | |||

* <math>S_{i+1}=S_i\,</math> and <math>T_{i+1}=T_i\cup\{v_i\}</math> if otherwise. | |||

* | Finally, the max-cut is given by | ||

:<math>SOL_G=|E(S_{n+1},T_{n+1})|</math>. | |||

* | |||

: | |||

= | We first observe that we can count the number of edges <math>|E|</math> by summarizing the contributions of individual <math>v_i</math>'s. | ||

{{Theorem|Proposition 1| | |||

:<math>|E| = \sum_{i=1}^n\left(|E(S_i,\{v_i\})|+|E(T_i,\{v_i\})|\right)</math>. | |||

}} | |||

{{Proof| | |||

Note that <math>S_i\cup T_i=\{v_1,v_2,\ldots,v_{i-1}\}</math>, i.e. <math>S_i</math> and <math>T_i</math> together contain precisely those vertices preceding <math>v_i</math>. Therefore, by taking the sum | |||

:<math>\sum_{i=1}^n\left(|E(S_i,\{v_i\})|+|E(T_i,\{v_i\})|\right)</math>, | |||

we effectively enumerate all <math>(v_j,v_i)</math> that <math>v_jv_i\in E</math> and <math>j<i</math>. The total number is precisely <math>|E|</math>. | |||

}} | |||

== | We then observe that the <math>SOL_G</math> can be decomposed into contributions of individual <math>v_i</math>'s in the same way. | ||

{{Theorem|Proposition 2| | |||

:<math>SOL_G = \sum_{i=1}^n\max\left(|E(S_i, \{v_i\})|,|E(T_i, \{v_i\})|\right)</math>. | |||

}} | |||

{{Proof| | |||

It is east to observe that <math>E(S_i,T_i)\subseteq E(S_{i+1},T_{i+1})</math>, i.e. once an edge joins the cut between current <math>S,T</math> it will never drop from the cut in the future. | |||

== | We then define | ||

:<math>\Delta_i= |E(S_{i+1},T_{i+1})|-|E(S_i,T_i)|=|E(S_{i+1},T_{i+1})\setminus E(S_i,T_i)|</math> | |||

to be the contribution of <math>v_i</math> in the final cut. | |||

=== | It holds that | ||

* | :<math>\sum_{i=1}^n\Delta_i=|E(S_{n+1},T_{n+1})|-|E(S_{1},T_{1})|=|E(S_{n+1},T_{n+1})|=SOL_G</math>. | ||

On the other hand, due to the greedy rule: | |||

* <math>S_{i+1}=S_i\cup\{v_i\}</math> and <math>T_{i+1}=T_i\,</math> if <math>E(S_{i}\cup\{v_i\},T_i)>E(S_{i},T_i\cup\{v_i\})</math>; | |||

* <math>S_{i+1}=S_i\,</math> and <math>T_{i+1}=T_i\cup\{v_i\}</math> if otherwise; | |||

it holds that | |||

:<math>\Delta_i=|E(S_{i+1},T_{i+1})\setminus E(S_i,T_i)| = \max\left(|E(S_i, \{v_i\})|,|E(T_i, \{v_i\})|\right)</math>. | |||

Together the proposition follows. | |||

}} | |||

=== | Combining the above Proposition 1 and Proposition 2, we have | ||

:<math> | |||

\begin{align} | |||

SOL_G | |||

&= \sum_{i=1}^n\max\left(|E(S_i, \{v_i\})|,|E(T_i, \{v_i\})|\right)\\ | |||

&\ge \frac{1}{2}\sum_{i=1}^n\left(|E(S_i, \{v_i\})|+|E(T_i, \{v_i\})|\right)\\ | |||

&=\frac{1}{2}|E|\\ | |||

&\ge\frac{1}{2}OPT_G. | |||

\end{align} | |||

</math> | |||

{{Theorem|Theorem| | |||

:The ''GreedyMaxCut'' is a <math>0.5</math>-approximation algorithm for the max-cut problem. | |||

}} | |||

This is not the best approximation ratio achieved by polynomial-time algorithms for max-cut. | |||

* The best known approximation ratio achieved by any polynomial-time algorithm is achieved by the [http://www-math.mit.edu/~goemans/PAPERS/maxcut-jacm.pdf Goemans-Williamson algorithm], which relies on rounding an [https://en.wikipedia.org/wiki/Semidefinite_programming SDP] relaxation of the max-cut, and achieves an approximation ratio <math>\alpha^*\approx 0.878</math>, where <math>\alpha^*</math> is an irrational whose precise value is given by <math>\alpha^*=\frac{2}{\pi}\inf_{x\in[-1,1]}\frac{\arccos(x)}{1-x}</math>. | |||

* Assuming the [https://en.wikipedia.org/wiki/Unique_games_conjecture unique game conjecture], there does not exist any polynomial-time algorithm for max-cut with approximation ratio <math>\alpha>\alpha^*</math>. | |||

== Derandomization by conditional expectation == | |||

There is a probabilistic interpretation of the greedy algorithm, which may explains why we use greedy scheme for max-cut and why it works for finding an approximate max-cut. | |||

Given an undirected graph <math>G(V,E)</math>, let us calculate the average size of cuts in <math>G</math>. For every vertex <math>v\in V</math> let <math>X_v\in\{0,1\}</math> be a ''uniform'' and ''independent'' random bit which indicates whether <math>v</math> joins <math>S</math> or <math>T</math>. This gives us a uniform random bipartition of <math>V</math> into <math>S</math> and <math>T</math>. | |||

The size of the random cut <math>|E(S,T)|</math> is given by | |||

:<math> | |||

|E(S,T)| = \sum_{uv\in E} I[X_u\neq X_v], | |||

</math> | |||

where <math>I[X_u\neq X_v]</math> is the Boolean indicator random variable that indicates whether event <math>X_u\neq X_v</math> occurs. | |||

= | Due to '''linearity of expectation''', | ||

* [ | :<math> | ||

\mathbb{E}[|E(S,T)|]=\sum_{uv\in E} \mathbb{E}[I[X_u\neq X_v]] =\sum_{uv\in E} \Pr[X_u\neq X_v]=\frac{|E|}{2}. | |||

</math> | |||

Recall that <math>|E|</math> is a trivial upper bound for the max-cut <math>OPT_G</math>. Due to the above argument, we have | |||

:<math> | |||

\mathbb{E}[|E(S,T)|]\ge\frac{OPT_G}{2}. | |||

</math> | |||

:{|border="2" width="100%" cellspacing="4" cellpadding="3" rules="all" style="margin:1em 1em 1em 0; border:solid 1px #AAAAAA; border-collapse:collapse;empty-cells:show;" | |||

| | |||

*In above argument we use a few probability propositions. | |||

: '''linearity of expectation:''' | |||

:: Let <math>\boldsymbol{X}=(X_1,X_2,\ldots,X_n)</math> be a random vector. Then | |||

:::<math>\mathbb{E}\left[\sum_{i=1}^nc_iX_i\right]=\sum_{i=1}^nc_i\mathbb{E}[X_i]</math>, | |||

::where <math>c_1,c_2,\ldots,c_n</math> are scalars. | |||

::That is, the order of computations of expectation and linear (affine) function of a random vector can be exchanged. | |||

::Note that this property ignores the dependency between random variables, and hence is very useful. | |||

:'''Expectation of indicator random variable:''' | |||

::We usually use the notation <math>I[A]</math> to represent the Boolean indicator random variable that indicates whether the event <math>A</math> occurs: i.e. <math>I[A]=1</math> if event <math>A</math> occurs and <math>I[A]=0</math> if otherwise. | |||

::It is easy to see that <math>\mathbb{E}[I[A]]=\Pr[A]</math>. The expectation of an indicator random variable equals the probability of the event it indicates. | |||

|} | |||

By above analysis, the average (under uniform distribution) size of all cuts in any graph <math>G</math> must be at least <math>\frac{OPT_G}{2}</math>. Due to '''the probabilistic method''', in particular '''the averaging principle''', there must exists a bipartition of <math>V</math> into <math>S</math> and <math>T</math> whose cut <math>E(S,T)</math> is of size at least <math>\frac{OPT_G}{2}</math>. Then next question is how to find such a bipartition <math>\{S,T\}</math> ''algorithmically''. | |||

We still fix an arbitrary order of all vertices as <math>V=\{v_1,v_2,\ldots,v_n\}</math>. Recall that each vertex <math>v_i</math> is associated with a uniform and independent random bit <math>X_{v_i}</math> to indicate whether <math>v_i</math> joins <math>S</math> or <math>T</math>. We want to fix the value of <math>X_{v_i}</math> one after another to construct a bipartition <math>\{\hat{S},\hat{T}\}</math> of <math>V</math> such that | |||

:<math>|E(\hat{S},\hat{T})|\ge\mathbb{E}[|E(S,T)|]\ge\frac{OPT_G}{2}</math>. | |||

We start with the first vertex <math>v_i</math> and its random variable <math>X_{v_1}</math>. By the '''law of total expectation''', | |||

:<math> | |||

\mathbb{E}[E(S,T)]=\frac{1}{2}\mathbb{E}[E(S,T)\mid X_{v_1}=0]+\frac{1}{2}\mathbb{E}[E(S,T)\mid X_{v_1}=1]. | |||

</math> | |||

There must exist an assignment <math>x_1\in\{0,1\}</math> of <math>X_{v_1}</math> such that | |||

:<math>\mathbb{E}[E(S,T)\mid X_{v_1}=x_1]\ge \mathbb{E}[E(S,T)]</math>. | |||

We can continuously applying this argument. In general, for any <math>i\le n</math> and any particular partial assignment <math>x_1,x_2,\ldots,x_{i-1}\in\{0,1\}</math> of <math>X_{v_1},X_{v_2},\ldots,X_{v_{i-1}}</math>, by the law of total expectation | |||

:<math> | |||

\begin{align} | |||

\mathbb{E}[E(S,T)\mid X_{v_1}=x_1,\ldots, X_{v_{i-1}}=x_{i-1}] | |||

= | |||

&\frac{1}{2}\mathbb{E}[E(S,T)\mid X_{v_1}=x_1,\ldots, X_{v_{i-1}}=x_{i-1}, X_{v_{i}}=0]\\ | |||

&+\frac{1}{2}\mathbb{E}[E(S,T)\mid X_{v_1}=x_1,\ldots, X_{v_{i-1}}=x_{i-1}, X_{v_{i}}=1]. | |||

\end{align} | |||

</math> | |||

There must exist an assignment <math>x_{i}\in\{0,1\}</math> of <math>X_{v_i}</math> such that | |||

:<math> | |||

\mathbb{E}[E(S,T)\mid X_{v_1}=x_1,\ldots, X_{v_{i}}=x_{i}]\ge \mathbb{E}[E(S,T)\mid X_{v_1}=x_1,\ldots, X_{v_{i-1}}=x_{i-1}]. | |||

</math> | |||

By this argument, we can find a sequence <math>x_1,x_2,\ldots,x_n\in\{0,1\}</math> of bits which forms a ''monotone path'': | |||

:<math> | |||

\mathbb{E}[E(S,T)]\le \cdots \le \mathbb{E}[E(S,T)\mid X_{v_1}=x_1,\ldots, X_{v_{i-1}}=x_{i-1}] \le \mathbb{E}[E(S,T)\mid X_{v_1}=x_1,\ldots, X_{v_{i}}=x_{i}] \le \cdots \le \mathbb{E}[E(S,T)\mid X_{v_1}=x_1,\ldots, X_{v_{n}}=x_{n}]. | |||

</math> | |||

We already know the first step of this monotone path <math>\mathbb{E}[E(S,T)]\ge\frac{OPT_G}{2}</math>. And for the last step of the monotone path <math>\mathbb{E}[E(S,T)\mid X_{v_1}=x_1,\ldots, X_{v_{n}}=x_{n}]</math> since all random bits have been fixed, a bipartition <math>(\hat{S},\hat{T})</math> is determined by the assignment <math>x_1,\ldots, x_n</math>, so the expectation has no effect except just retuning the size of that cut <math>|E(\hat{S},\hat{T})|</math>. We found the cut <math>E(\hat{S},\hat{T})</math> such that <math>|E(\hat{S},\hat{T})|\ge \frac{OPT_G}{2}</math>. | |||

We translate the procedure of constructing this monotone path of conditional expectation to the following algorithm. | |||

{{Theorem|''MonotonePath''| | |||

:'''Input:''' undirected graph <math>G(V,E)</math>, | |||

:::with an arbitrary order of vertices <math>V=\{v_1,v_2,\ldots,v_n\}</math>; | |||

---- | |||

:initially <math>S=T=\emptyset</math>; | |||

:for <math>i=1,2,\ldots,n</math> | |||

::<math>v_i</math> joins one of <math>S,T</math> to maximize the average size of cut conditioning on the choices made so far by the vertices <math>v_1,v_2,\ldots,v_i</math>; | |||

}} | |||

We leave as an exercise to verify that the choice of each <math>v_i</math> (to join which one of <math>S,T</math>) in the ''MonotonePath'' algorithm (which maximizes the average size of cut conditioning on the choices made so far by the vertices <math>v_1,v_2,\ldots,v_i</math>) must be the same choice made by <math>v_i</math> in the ''GreedyMaxCut'' algorithm (which maximizes the current <math>|E(S,T)|</math>). | |||

Therefore, the greedy algorithm for max-cut is actually due to a derandomization of average-case. | |||

== Derandomization by pairwise independence == | |||

We still construct a random bipartition of <math>V</math> into <math>S</math> and <math>T</math>. But this time the random choices have '''bounded independence'''. | |||

For each vertex <math>v\in V</math>, we use a Boolean random variable <math>Y_v\in\{0,1\}</math> to indicate whether <math>v</math> joins <math>S</math> and <math>T</math>. The dependencies between <math>Y_v</math>'s are to be specified later. | |||

By linearity of expectation, regardless of the dependencies between <math>Y_v</math>'s, it holds that: | |||

:<math> | |||

\mathbb{E}[|E(S,T)|]=\sum_{uv\in E} \Pr[Y_u\neq Y_v]. | |||

</math> | |||

In order to have the average cut <math>\mathbb{E}[|E(S,T)|]=\frac{|E|}{2}</math> as the fully random case, we need <math>\Pr[Y_u\neq Y_v]=\frac{1}{2}</math>. This only requires that the Boolean random variables <math>Y_v</math>'s are uniform and '''pairwise independent''' instead of being '''mutually independent'''. | |||

The <math>n</math> pairwise independent random bits <math>\{Y_v\}_{v\in V}</math> can be constructed by at most <math>k=\lceil\log (n+1)\rceil</math> mutually independent random bits <math>X_1,X_2,\ldots,X_k\in\{0,1\}</math> by the following standard routine. | |||

{{Theorem|Theorem| | |||

:Let <math>X_1, X_2, \ldots, X_k\in\{0,1\}</math> be mutually independent uniform random bits. | |||

:Let <math>S_1, S_2, \ldots, S_{2^k-1}\subseteq \{1,2,\ldots,k\}</math> enumerate the <math>2^k-1</math> nonempty subsets of <math>\{1,2,\ldots,k\}</math>. | |||

:For each <math>i\le i\le2^k-1</math>, let | |||

::<math>Y_i=\bigoplus_{j\in S_i}X_j=\left(\sum_{j\in S_i}X_j\right)\bmod 2.</math> | |||

:Then <math>Y_1,Y_2,\ldots,Y_{2^k-1}</math> are pairwise independent uniform random bits. | |||

}} | |||

If <math>Y_v</math> for each vertex <math>v\in V</math> is constructed in this way by at most <math>k=\lceil\log (n+1)\rceil</math> mutually independent random bits <math>X_1,X_2,\ldots,X_k\in\{0,1\}</math>, then they are uniform and pairwise independent, which by the above calculation, it holds for the corresponding bipartition <math>\{S,T\}</math> of <math>V</math> that | |||

:<math> | |||

\mathbb{E}[|E(S,T)|]=\sum_{uv\in E} \Pr[Y_u\neq Y_v]=\frac{|E|}{2}. | |||

</math> | |||

Note that the average is taken over the random choices of <math>X_1,X_2,\ldots,X_k\in\{0,1\}</math> (because they are the only random choices used to construct the bipartition <math>\{S,T\}</math>). By the probabilistic method, there must exist an assignment of <math>X_1,X_2,\ldots,X_k\in\{0,1\}</math> such that the corresponding <math>Y_v</math>'s and the bipartition <math>\{S,T\}</math> of <math>V</math> indicated by the <math>Y_v</math>'s have that | |||

:<math>|E(S,T)|\ge \frac{|E|}{2}\ge\frac{OPT}{2}</math>. | |||

This gives us the following algorithm for exhaustive search in a smaller solution space of size <math>2^k-1=O(n^2)</math>. | |||

{{Theorem|Algorithm| | |||

:Enumerate vertices as <math>V=\{v_1,v_2,\ldots,v_n\}</math>; | |||

:let <math>k=\lceil\log (n+1)\rceil</math>; | |||

:for all <math>\vec{x}\in\{0,1\}^k</math> | |||

::initialize <math>S_{\vec{x}}=T_{\vec{x}}=\emptyset</math>; | |||

::for <math>i=1, 2, \ldots, n</math> | |||

:::if <math>\bigoplus_{j:\lfloor i/2^j\rfloor\bmod 2=1}x_j=1</math> then <math>v_i</math> joins <math>S_{\vec{x}}</math>; | |||

:::else <math>v_i</math> joins <math>T_{\vec{x}}</math>; | |||

:return the <math>\{S_{\vec{x}},T_{\vec{x}}\}</math> with the largest <math>|E(S_{\vec{x}},T_{\vec{x}})|</math>; | |||

}} | |||

The algorithm has approximation ratio 1/2 and runs in polynomial time. | |||

Latest revision as of 08:44, 24 August 2021

Graph Cut

Let [math]\displaystyle{ G(V, E) }[/math] be an undirected graph. A subset [math]\displaystyle{ C\subseteq E }[/math] of edges is a cut of graph [math]\displaystyle{ G }[/math] if [math]\displaystyle{ G }[/math] becomes disconnected after deleting all edges in [math]\displaystyle{ C }[/math].

Let [math]\displaystyle{ \{S,T\} }[/math] be a bipartition of [math]\displaystyle{ V }[/math] into nonempty subsets [math]\displaystyle{ S,T\subseteq V }[/math], where [math]\displaystyle{ S\cap T=\emptyset }[/math] and [math]\displaystyle{ S\cup T=V }[/math]. A cut [math]\displaystyle{ C }[/math] is specified by this bipartition as

- [math]\displaystyle{ C=E(S,T)\, }[/math],

where [math]\displaystyle{ E(S,T) }[/math] denotes the set of "crossing edges" with one endpoint in each of [math]\displaystyle{ S }[/math] and [math]\displaystyle{ T }[/math], formally defined as

- [math]\displaystyle{ E(S,T)=\{uv\in E\mid u\in S, v\in T\} }[/math].

Given a graph [math]\displaystyle{ G }[/math], there might be many cuts in [math]\displaystyle{ G }[/math], and we are interested in finding the minimum or maximum cut.

Min-Cut

The min-cut problem, also called the global minimum cut problem, is defined as follows.

Min-cut problem - Input: an undirected graph [math]\displaystyle{ G(V,E) }[/math];

- Output: a cut [math]\displaystyle{ C }[/math] in [math]\displaystyle{ G }[/math] with the smallest size [math]\displaystyle{ |C| }[/math].

Equivalently, the problem asks to find a bipartition of [math]\displaystyle{ V }[/math] into disjoint non-empty subsets [math]\displaystyle{ S }[/math] and [math]\displaystyle{ T }[/math] that minimizes [math]\displaystyle{ |E(S,T)| }[/math].

We consider the problem in a slightly more generalized setting, where the input graphs [math]\displaystyle{ G }[/math] can be multi-graphs, meaning that there could be multiple parallel edges between two vertices [math]\displaystyle{ u }[/math] and [math]\displaystyle{ v }[/math]. The cuts in multi-graphs are defined in the same way as before, and the cost of a cut [math]\displaystyle{ C }[/math] is given by the total number of edges (including parallel edges) in [math]\displaystyle{ C }[/math]. Equivalently, one may think of a multi-graph as a graph with integer edge weights, and the cost of a cut [math]\displaystyle{ C }[/math] is the total weights of all edges in [math]\displaystyle{ C }[/math].

A canonical deterministic algorithm for this problem is through the max-flow min-cut theorem. The max-flow algorithm finds us a minimum [math]\displaystyle{ s }[/math]-[math]\displaystyle{ t }[/math] cut, which disconnects a source [math]\displaystyle{ s\in V }[/math] from a sink [math]\displaystyle{ t\in V }[/math], both specified as part of the input. A global min cut can be found by exhaustively finding the minimum [math]\displaystyle{ s }[/math]-[math]\displaystyle{ t }[/math] cut for an arbitrarily fixed source [math]\displaystyle{ s }[/math] and all possible sink [math]\displaystyle{ t\neq s }[/math]. This takes [math]\displaystyle{ (n-1)\times }[/math]max-flow time where [math]\displaystyle{ n=|V| }[/math] is the number of vertices.

The fastest known deterministic algorithm for the minimum cut problem on multi-graphs is the Stoer–Wagner algorithm, which achieves an [math]\displaystyle{ O(mn+n^2\log n) }[/math] time complexity where [math]\displaystyle{ m=|E| }[/math] is the total number of edges (counting the parallel edges).

If we restrict the input to be simple graphs (meaning there is no parallel edges) with no edge weight, there are better algorithms. A deterministic algorithm of Ken-ichi Kawarabayashi and Mikkel Thorup published in STOC 2015, achieves the near-linear (in the number of edges) time complexity.

Karger's Contraction algorithm

We will describe a simple and elegant randomized algorithm for the min-cut problem. The algorithm is due to David Karger.

Let [math]\displaystyle{ G(V, E) }[/math] be a multi-graph, which allows more than one parallel edges between two distinct vertices [math]\displaystyle{ u }[/math] and [math]\displaystyle{ v }[/math] but does not allow any self-loops: the edges that adjoin a vertex to itself. A multi-graph [math]\displaystyle{ G }[/math] can be represented by an adjacency matrix [math]\displaystyle{ A }[/math], in the way that each non-diagonal entry [math]\displaystyle{ A(u,v) }[/math] takes nonnegative integer values instead of just 0 or 1, representing the number of parallel edges between [math]\displaystyle{ u }[/math] and [math]\displaystyle{ v }[/math] in [math]\displaystyle{ G }[/math], and all diagonal entries [math]\displaystyle{ A(v,v)=0 }[/math] (since there is no self-loop).

Given a multi-graph [math]\displaystyle{ G(V,E) }[/math] and an edge [math]\displaystyle{ e\in E }[/math], we define the following contraction operator Contract([math]\displaystyle{ G }[/math], [math]\displaystyle{ e }[/math]), which transform [math]\displaystyle{ G }[/math] to a new multi-graph.

The contraction operator Contract([math]\displaystyle{ G }[/math], [math]\displaystyle{ e }[/math]) - say [math]\displaystyle{ e=uv }[/math]:

- replace [math]\displaystyle{ \{u,v\} }[/math] by a new vertex [math]\displaystyle{ x }[/math];

- for every edge (no matter parallel or not) in the form of [math]\displaystyle{ uw }[/math] or [math]\displaystyle{ vw }[/math] that connects one of [math]\displaystyle{ \{u,v\} }[/math] to a vertex [math]\displaystyle{ w\in V\setminus\{u,v\} }[/math] in the graph other than [math]\displaystyle{ u,v }[/math], replace it by a new edge [math]\displaystyle{ xw }[/math];

- the reset of the graph does not change.

- say [math]\displaystyle{ e=uv }[/math]:

In other words, the [math]\displaystyle{ Contract(G,uv) }[/math] merges the two vertices [math]\displaystyle{ u }[/math] and [math]\displaystyle{ v }[/math] into a new vertex [math]\displaystyle{ x }[/math] whose incident edges preserves the edges incident to [math]\displaystyle{ u }[/math] or [math]\displaystyle{ v }[/math] in the original graph [math]\displaystyle{ G }[/math] except for the parallel edges between them. Now you should realize why we consider multi-graphs instead of simple graphs, because even if we start with a simple graph without parallel edges, the contraction operator may create parallel edges.

The contraction operator is illustrated by the following picture:

Karger's algorithm uses a simple idea:

- At each step we randomly select an edge in the current multi-graph to contract until there are only two vertices left.

- The parallel edges between these two remaining vertices must be a cut of the original graph.

- We return this cut and hope that with good chance this gives us a minimum cut.

The following is the pseudocode for Karger's algorithm.

RandomContract (Karger 1993) - Input: multi-graph [math]\displaystyle{ G(V,E) }[/math];

- while [math]\displaystyle{ |V|\gt 2 }[/math] do

- choose an edge [math]\displaystyle{ uv\in E }[/math] uniformly at random;

- [math]\displaystyle{ G=Contract(G,uv) }[/math];

- return [math]\displaystyle{ C=E }[/math] (the parallel edges between the only two vertices in [math]\displaystyle{ V }[/math]);

Another way of looking at the contraction operator Contract([math]\displaystyle{ G }[/math],[math]\displaystyle{ e }[/math]) is that we are dealing with classes of vertices. Let [math]\displaystyle{ V=\{v_1,v_2,\ldots,v_n\} }[/math] be the set of all vertices. We start with [math]\displaystyle{ n }[/math] vertex classes [math]\displaystyle{ S_1,S_2,\ldots, S_n }[/math] with each class [math]\displaystyle{ S_i=\{v_i\} }[/math] contains one vertex. By calling [math]\displaystyle{ Contract(G,uv) }[/math], where [math]\displaystyle{ u\in S_i }[/math] and [math]\displaystyle{ v\in S_j }[/math] for distinct [math]\displaystyle{ i\neq j }[/math], we take union of [math]\displaystyle{ S_i }[/math] and [math]\displaystyle{ S_j }[/math]. The edges in the contracted multi-graph are the edges that cross between different vertex classes.

This view of contraction is illustrated by the following picture:

The following claim is left as an exercise for the class:

- With suitable choice of data structures, each operation [math]\displaystyle{ Contract(G,e) }[/math] can be implemented within running time [math]\displaystyle{ O(n) }[/math] where [math]\displaystyle{ n=|V| }[/math] is the number of vertices.

In the above RandomContract algorithm, there are precisely [math]\displaystyle{ n-2 }[/math] contractions. Therefore, we have the following time upper bound.

Theorem - For any multigraph with [math]\displaystyle{ n }[/math] vertices, the running time of the RandomContract algorithm is [math]\displaystyle{ O(n^2) }[/math].

We emphasize that it's the time complexity of a "single running" of the algorithm: later we will see we may need to run this algorithm for many times to guarantee a desirable accuracy.

Analysis of accuracy

We now analyze the performance of the above algorithm. Since the algorithm is randomized, its output cut is a random variable even when the input is fixed, so the output may not always be correct. We want to give a theoretical guarantee of the chance that the algorithm returns a correct answer on an arbitrary input.

More precisely, on an arbitrarily fixed input multi-graph [math]\displaystyle{ G }[/math], we want to answer the following question rigorously:

- [math]\displaystyle{ p_{\text{correct}}=\Pr[\,\text{a minimum cut is returned by }RandomContract\,]\ge ? }[/math]

To answer this question, we prove a stronger statement: for arbitrarily fixed input multi-graph [math]\displaystyle{ G }[/math] and a particular minimum cut [math]\displaystyle{ C }[/math] in [math]\displaystyle{ G }[/math],

- [math]\displaystyle{ p_{C}=\Pr[\,C\mbox{ is returned by }RandomContract\,]\ge ? }[/math]

Obviously this will imply the previous lower bound for [math]\displaystyle{ p_{\text{correct}} }[/math] because the event in [math]\displaystyle{ p_{C} }[/math] implies the event in [math]\displaystyle{ p_{\text{correct}} }[/math].

- In above argument we use the simple law in probability that [math]\displaystyle{ \Pr[A]\le \Pr[B] }[/math] if [math]\displaystyle{ A\subseteq B }[/math], i.e. event [math]\displaystyle{ A }[/math] implies event [math]\displaystyle{ B }[/math].

We introduce the following notations:

- Let [math]\displaystyle{ e_1,e_2,\ldots,e_{n-2} }[/math] denote the sequence of random edges chosen to contract in a running of RandomContract algorithm.

- Let [math]\displaystyle{ G_1=G }[/math] denote the original input multi-graph. And for [math]\displaystyle{ i=1,2,\ldots,n-2 }[/math], let [math]\displaystyle{ G_{i+1}=Contract(G_{i},e_i) }[/math] be the multigraph after [math]\displaystyle{ i }[/math]th contraction.

Obviously [math]\displaystyle{ e_1,e_2,\ldots,e_{n-2} }[/math] are random variables, and they are the only random choices used in the algorithm: meaning that they along with the input [math]\displaystyle{ G }[/math], uniquely determine the sequence of multi-graphs [math]\displaystyle{ G_1,G_2,\ldots,G_{n-2} }[/math] in every iteration as well as the final output.

We now compute the probability [math]\displaystyle{ p_C }[/math] by decompose it into more elementary events involving [math]\displaystyle{ e_1,e_2,\ldots,e_{n-2} }[/math]. This is due to the following proposition.

Proposition 1 - If [math]\displaystyle{ C }[/math] is a minimum cut in a multi-graph [math]\displaystyle{ G }[/math] and [math]\displaystyle{ e\not\in C }[/math], then [math]\displaystyle{ C }[/math] is still a minimum cut in the contracted graph [math]\displaystyle{ G'=contract(G,e) }[/math].

Proof. We first observe that contraction will never create new cuts: every cut in the contracted graph [math]\displaystyle{ G' }[/math] must also be a cut in the original graph [math]\displaystyle{ G }[/math].

We then observe that a cut [math]\displaystyle{ C }[/math] in [math]\displaystyle{ G }[/math] "survives" in the contracted graph [math]\displaystyle{ G' }[/math] if and only if the contracted edge [math]\displaystyle{ e\not\in C }[/math].

Both observations are easy to verify by the definition of contraction operator (in particular, easier to verify if we take the vertex class interpretation). The detailed proofs are left as an exercise.

- [math]\displaystyle{ \square }[/math]

Recall that [math]\displaystyle{ e_1,e_2,\ldots,e_{n-2} }[/math] denote the sequence of random edges chosen to contract in a running of RandomContract algorithm.

By Proposition 1, the event [math]\displaystyle{ \mbox{``}C\mbox{ is returned by }RandomContract\mbox{''}\, }[/math] is equivalent to the event [math]\displaystyle{ \mbox{``}e_i\not\in C\mbox{ for all }i=1,2,\ldots,n-2\mbox{''} }[/math]. Therefore:

- [math]\displaystyle{ \begin{align} p_C &= \Pr[\,C\mbox{ is returned by }{RandomContract}\,]\\ &= \Pr[\,e_i\not\in C\mbox{ for all }i=1,2,\ldots,n-2\,]\\ &= \prod_{i=1}^{n-2}\Pr[e_i\not\in C\mid \forall j\lt i, e_j\not\in C]. \end{align} }[/math]

The last equation is due to the so called chain rule in probability.

- The chain rule, also known as the law of progressive conditioning, is the following proposition: for a sequence of events (not necessarily independent) [math]\displaystyle{ A_1,A_2,\ldots,A_n }[/math],

- [math]\displaystyle{ \Pr[\forall i, A_i]=\prod_{i=1}^n\Pr[A_i\mid \forall j\lt i, A_j] }[/math].

- It is a simple consequence of the definition of conditional probability. By definition of conditional probability,

- [math]\displaystyle{ \Pr[A_n\mid \forall j\lt n]=\frac{\Pr[\forall i, A_i]}{\Pr[\forall j\lt n, A_j]} }[/math],

- and equivalently we have

- [math]\displaystyle{ \Pr[\forall i, A_i]=\Pr[\forall j\lt n, A_j]\Pr[A_n\mid \forall j\lt n] }[/math].

- Recursively apply this to [math]\displaystyle{ \Pr[\forall j\lt n, A_j] }[/math] we obtain the chain rule.

Back to the analysis of probability [math]\displaystyle{ p_C }[/math].

Now our task is to give lower bound to each [math]\displaystyle{ p_i=\Pr[e_i\not\in C\mid \forall j\lt i, e_j\not\in C] }[/math]. The condition [math]\displaystyle{ \mbox{``}\forall j\lt i, e_j\not\in C\mbox{''} }[/math] means the min-cut [math]\displaystyle{ C }[/math] survives all first [math]\displaystyle{ i-1 }[/math] contractions [math]\displaystyle{ e_1,e_2,\ldots,e_{i-1} }[/math], which due to Proposition 1 means that [math]\displaystyle{ C }[/math] is also a min-cut in the multi-graph [math]\displaystyle{ G_i }[/math] obtained from applying the first [math]\displaystyle{ (i-1) }[/math] contractions.

Then the conditional probability [math]\displaystyle{ p_i=\Pr[e_i\not\in C\mid \forall j\lt i, e_j\not\in C] }[/math] is the probability that no edge in [math]\displaystyle{ C }[/math] is hit when a uniform random edge in the current multi-graph is chosen assuming that [math]\displaystyle{ C }[/math] is a minimum cut in the current multi-graph. Intuitively this probability should be bounded from below, because as a min-cut [math]\displaystyle{ C }[/math] should be sparse among all edges. This intuition is justified by the following proposition.

Proposition 2 - If [math]\displaystyle{ C }[/math] is a min-cut in a multi-graph [math]\displaystyle{ G(V,E) }[/math], then [math]\displaystyle{ |E|\ge \frac{|V||C|}{2} }[/math].

Proof. - It must hold that the degree of each vertex [math]\displaystyle{ v\in V }[/math] is at least [math]\displaystyle{ |C| }[/math], or otherwise the set of edges incident to [math]\displaystyle{ v }[/math] forms a cut of size smaller than [math]\displaystyle{ |C| }[/math] which separates [math]\displaystyle{ \{v\} }[/math] from the rest of the graph, contradicting that [math]\displaystyle{ C }[/math] is a min-cut. And the bound [math]\displaystyle{ |E|\ge \frac{|V||C|}{2} }[/math] follows directly from applying the handshaking lemma to the fact that every vertex in [math]\displaystyle{ G }[/math] has degree at least [math]\displaystyle{ |C| }[/math].

- [math]\displaystyle{ \square }[/math]

Let [math]\displaystyle{ V_i }[/math] and [math]\displaystyle{ E_i }[/math] denote the vertex set and edge set of the multi-graph [math]\displaystyle{ G_i }[/math] respectively, and recall that [math]\displaystyle{ G_i }[/math] is the multi-graph obtained from applying first [math]\displaystyle{ (i-1) }[/math] contractions. Obviously [math]\displaystyle{ |V_{i}|=n-i+1 }[/math]. And due to Proposition 2, [math]\displaystyle{ |E_i|\ge \frac{|V_i||C|}{2} }[/math] if [math]\displaystyle{ C }[/math] is still a min-cut in [math]\displaystyle{ G_i }[/math].

The probability [math]\displaystyle{ p_i=\Pr[e_i\not\in C\mid \forall j\lt i, e_j\not\in C] }[/math] can be computed as

- [math]\displaystyle{ \begin{align} p_i &=1-\frac{|C|}{|E_i|}\\ &\ge1-\frac{2}{|V_i|}\\ &=1-\frac{2}{n-i+1} \end{align}, }[/math]

where the inequality is due to Proposition 2.

We now can put everything together. We arbitrarily fix the input multi-graph [math]\displaystyle{ G }[/math] and any particular minimum cut [math]\displaystyle{ C }[/math] in [math]\displaystyle{ G }[/math].

- [math]\displaystyle{ \begin{align} p_{\text{correct}} &=\Pr[\,\text{a minimum cut is returned by }RandomContract\,]\\ &\ge \Pr[\,C\mbox{ is returned by }{RandomContract}\,]\\ &= \Pr[\,e_i\not\in C\mbox{ for all }i=1,2,\ldots,n-2\,]\\ &= \prod_{i=1}^{n-2}\Pr[e_i\not\in C\mid \forall j\lt i, e_j\not\in C]\\ &\ge \prod_{i=1}^{n-2}\left(1-\frac{2}{n-i+1}\right)\\ &= \prod_{k=3}^{n}\frac{k-2}{k}\\ &= \frac{2}{n(n-1)}. \end{align} }[/math]

This gives us the following theorem.

Theorem - For any multigraph with [math]\displaystyle{ n }[/math] vertices, the RandomContract algorithm returns a minimum cut with probability at least [math]\displaystyle{ \frac{2}{n(n-1)} }[/math].

At first glance this seems to be a miserable chance of success. However, notice that there may be exponential many cuts in a graph (because potentially every nonempty subset [math]\displaystyle{ S\subset V }[/math] corresponds to a cut [math]\displaystyle{ C=E(S,\overline{S}) }[/math]), and Karger's algorithm effectively reduce this exponential-sized space of feasible solutions to a quadratic size one, an exponential improvement!

We can run RandomContract independently for [math]\displaystyle{ t=\frac{n(n-1)\ln n}{2} }[/math] times and return the smallest cut ever returned. The probability that a minimum cut is found is at least:

- [math]\displaystyle{ \begin{align} &\quad 1-\Pr[\,\mbox{all }t\mbox{ independent runnings of } RandomContract\mbox{ fails to find a min-cut}\,] \\ &= 1-\Pr[\,\mbox{a single running of }{RandomContract}\mbox{ fails}\,]^{t} \\ &\ge 1- \left(1-\frac{2}{n(n-1)}\right)^{\frac{n(n-1)\ln n}{2}} \\ &\ge 1-\frac{1}{n}. \end{align} }[/math]

Recall that a running of RandomContract algorithm takes [math]\displaystyle{ O(n^2) }[/math] time. Altogether this gives us a randomized algorithm running in time [math]\displaystyle{ O(n^4\log n) }[/math] and find a minimum cut with high probability.

A Corollary by the Probabilistic Method

The analysis of Karger's algorithm implies the following combinatorial proposition for the number of distinct minimum cuts in a graph.

Corollary - For any graph [math]\displaystyle{ G(V,E) }[/math] of [math]\displaystyle{ n }[/math] vertices, the number of distinct minimum cuts in [math]\displaystyle{ G }[/math] is at most [math]\displaystyle{ \frac{n(n-1)}{2} }[/math].

Proof. Let [math]\displaystyle{ \mathcal{C} }[/math] denote the set of all minimum cuts in [math]\displaystyle{ G }[/math]. For each min-cut [math]\displaystyle{ C\in\mathcal{C} }[/math], let [math]\displaystyle{ A_C }[/math] denote the event "[math]\displaystyle{ C }[/math] is returned by RandomContract", whose probability is given by

- [math]\displaystyle{ p_C=\Pr[A_C]\, }[/math].

Clearly we have:

- for any distinct [math]\displaystyle{ C,D\in\mathcal{C} }[/math], [math]\displaystyle{ A_C\, }[/math] and [math]\displaystyle{ A_{D}\, }[/math] are disjoint events; and

- the union [math]\displaystyle{ \bigcup_{C\in\mathcal{C}}A_C }[/math] is precisely the event "a minimum cut is returned by RandomContract", whose probability is given by

- [math]\displaystyle{ p_{\text{correct}}=\Pr[\,\text{a minimum cut is returned by } RandomContract\,] }[/math].

Due to the additivity of probability, it holds that

- [math]\displaystyle{ p_{\text{correct}}=\sum_{C\in\mathcal{C}}\Pr[A_C]=\sum_{C\in\mathcal{C}}p_C. }[/math]

By the analysis of Karger's algorithm, we know [math]\displaystyle{ p_C\ge\frac{2}{n(n-1)} }[/math]. And since [math]\displaystyle{ p_{\text{correct}} }[/math] is a well defined probability, due to the unitarity of probability, it must hold that [math]\displaystyle{ p_{\text{correct}}\le 1 }[/math]. Therefore,

- [math]\displaystyle{ 1\ge p_{\text{correct}}=\sum_{C\in\mathcal{C}}p_C\ge|\mathcal{C}|\frac{2}{n(n-1)} }[/math],

which means [math]\displaystyle{ |\mathcal{C}|\le\frac{n(n-1)}{2} }[/math].

- [math]\displaystyle{ \square }[/math]

Note that the statement of this theorem has no randomness at all, while the proof consists of a randomized procedure. This is an example of the probabilistic method.

Fast Min-Cut

In the analysis of RandomContract algorithm, recall that we lower bound the probability [math]\displaystyle{ p_C }[/math] that a min-cut [math]\displaystyle{ C }[/math] is returned by RandomContract by the following telescopic product:

- [math]\displaystyle{ p_C\ge\prod_{i=1}^{n-2}\left(1-\frac{2}{n-i+1}\right) }[/math].

Here the index [math]\displaystyle{ i }[/math] corresponds to the [math]\displaystyle{ i }[/math]th contraction. The factor [math]\displaystyle{ \left(1-\frac{2}{n-i+1}\right) }[/math] is decreasing in [math]\displaystyle{ i }[/math], which means:

- The probability of success is only getting bad when the graph is getting "too contracted", that is, when the number of remaining vertices is getting small.

This motivates us to consider the following alternation to the algorithm: first using random contractions to reduce the number of vertices to a moderately small number, and then recursively finding a min-cut in this smaller instance. This seems just a restatement of exactly what we have been doing. Inspired by the idea of boosting the accuracy via independent repetition, here we apply the recursion on two smaller instances generated independently.

The algorithm obtained in this way is called FastCut. We first define a procedure to randomly contract edges until there are [math]\displaystyle{ t }[/math] number of vertices left.

RandomContract[math]\displaystyle{ (G, t) }[/math] - Input: multi-graph [math]\displaystyle{ G(V,E) }[/math], and integer [math]\displaystyle{ t\ge 2 }[/math];

- while [math]\displaystyle{ |V|\gt t }[/math] do

- choose an edge [math]\displaystyle{ uv\in E }[/math] uniformly at random;

- [math]\displaystyle{ G=Contract(G,uv) }[/math];

- return [math]\displaystyle{ G }[/math];

The FastCut algorithm is recursively defined as follows.

FastCut[math]\displaystyle{ (G) }[/math] - Input: multi-graph [math]\displaystyle{ G(V,E) }[/math];

- if [math]\displaystyle{ |V|\le 6 }[/math] then return a mincut by brute force;

- else let [math]\displaystyle{ t=\left\lceil1+|V|/\sqrt{2}\right\rceil }[/math];

- [math]\displaystyle{ G_1=RandomContract(G,t) }[/math];

- [math]\displaystyle{ G_2=RandomContract(G,t) }[/math];

- return the smaller one of [math]\displaystyle{ FastCut(G_1) }[/math] and [math]\displaystyle{ FastCut(G_2) }[/math];

As before, all [math]\displaystyle{ G }[/math] are multigraphs.

Fix a min-cut [math]\displaystyle{ C }[/math] in the original multigraph [math]\displaystyle{ G }[/math]. By the same analysis as in the case of RandomContract, we have

- [math]\displaystyle{ \begin{align} &\Pr[C\text{ survives all contractions in }RandomContract(G,t)]\\ = &\prod_{i=1}^{n-t}\Pr[C\text{ survives the }i\text{-th contraction}\mid C\text{ survives the first }(i-1)\text{-th contractions}]\\ \ge &\prod_{i=1}^{n-t}\left(1-\frac{2}{n-i+1}\right)\\ = &\prod_{k=t+1}^{n}\frac{k-2}{k}\\ = &\frac{t(t-1)}{n(n-1)}. \end{align} }[/math]

When [math]\displaystyle{ t=\left\lceil1+n/\sqrt{2}\right\rceil }[/math], this probability is at least [math]\displaystyle{ 1/2 }[/math]. The choice of [math]\displaystyle{ t }[/math] is due to our purpose to make this probability at least [math]\displaystyle{ 1/2 }[/math]. You will see this is crucial in the following analysis of accuracy.

We denote by [math]\displaystyle{ A }[/math] and [math]\displaystyle{ B }[/math] the following events:

- [math]\displaystyle{ \begin{align} A: &\quad C\text{ survives all contractions in }RandomContract(G,t);\\ B: &\quad\text{size of min-cut is unchanged after }RandomContract(G,t); \end{align} }[/math]

Clearly, [math]\displaystyle{ A }[/math] implies [math]\displaystyle{ B }[/math] and by above analysis [math]\displaystyle{ \Pr[B]\ge\Pr[A]\ge\frac{1}{2} }[/math].

We denote by [math]\displaystyle{ p(n) }[/math] the lower bound on the probability that [math]\displaystyle{ FastCut(G) }[/math] succeeds for a multigraph of [math]\displaystyle{ n }[/math] vertices, that is

- [math]\displaystyle{ p(n) =\min_{G: |V|=n}\Pr[\,FastCut(G)\text{ returns a min-cut in }G\,]. }[/math]

Suppose that [math]\displaystyle{ G }[/math] is the multigraph that achieves the minimum in above definition. The following recurrence holds for [math]\displaystyle{ p(n) }[/math].

- [math]\displaystyle{ \begin{align} p(n) &= \Pr[\,FastCut(G)\text{ returns a min-cut in }G\,]\\ &= \Pr[\,\text{ a min-cut of }G\text{ is returned by }FastCut(G_1)\text{ or }FastCut(G_2)\,]\\ &\ge 1-\left(1-\Pr[B\wedge FastCut(G_1)\text{ returns a min-cut in }G_1\,]\right)^2\\ &\ge 1-\left(1-\Pr[A\wedge FastCut(G_1)\text{ returns a min-cut in }G_1\,]\right)^2\\ &= 1-\left(1-\Pr[A]\Pr[ FastCut(G_1)\text{ returns a min-cut in }G_1\mid A]\right)^2\\ &\ge 1-\left(1-\frac{1}{2}p\left(\left\lceil1+n/\sqrt{2}\right\rceil\right)\right)^2, \end{align} }[/math]

where [math]\displaystyle{ A }[/math] and [math]\displaystyle{ B }[/math] are defined as above such that [math]\displaystyle{ \Pr[A]\ge\frac{1}{2} }[/math].

The base case is that [math]\displaystyle{ p(n)=1 }[/math] for [math]\displaystyle{ n\le 6 }[/math]. By induction it is easy to prove that

- [math]\displaystyle{ p(n)=\Omega\left(\frac{1}{\log n}\right). }[/math]

Recall that we can implement an edge contraction in [math]\displaystyle{ O(n) }[/math] time, thus it is easy to verify the following recursion of time complexity:

- [math]\displaystyle{ T(n)=2T\left(\left\lceil1+n/\sqrt{2}\right\rceil\right)+O(n^2), }[/math]

where [math]\displaystyle{ T(n) }[/math] denotes the running time of [math]\displaystyle{ FastCut(G) }[/math] on a multigraph [math]\displaystyle{ G }[/math] of [math]\displaystyle{ n }[/math] vertices.

By induction with the base case [math]\displaystyle{ T(n)=O(1) }[/math] for [math]\displaystyle{ n\le 6 }[/math], it is easy to verify that [math]\displaystyle{ T(n)=O(n^2\log n) }[/math].

Theorem - For any multigraph with [math]\displaystyle{ n }[/math] vertices, the FastCut algorithm returns a minimum cut with probability [math]\displaystyle{ \Omega\left(\frac{1}{\log n}\right) }[/math] in time [math]\displaystyle{ O(n^2\log n) }[/math].

At this point, we see the name FastCut is misleading because it is actually slower than the original RandomContract algorithm, only the chance of successfully finding a min-cut is much better (improved from an [math]\displaystyle{ \Omega(1/n^2) }[/math] to an [math]\displaystyle{ \Omega(1/\log n) }[/math]).

Given any input multi-graph, repeatedly running the FastCut algorithm independently for some [math]\displaystyle{ O((\log n)^2) }[/math] times and returns the smallest cut ever returned, we have an algorithm which runs in time [math]\displaystyle{ O(n^2\log^3n) }[/math] and returns a min-cut with probability [math]\displaystyle{ 1-O(1/n) }[/math], i.e. with high probability.

Recall that the running time of best known deterministic algorithm for min-cut on multi-graph is [math]\displaystyle{ O(mn+n^2\log n) }[/math]. On dense graph, the randomized algorithm outperforms the best known deterministic algorithm.

Finally, Karger further improves this and obtains a near-linear (in the number of edges) time randomized algorithm for minimum cut in multi-graphs.

Max-Cut

The maximum cut problem, in short the max-cut problem, is defined as follows.

Max-cut problem - Input: an undirected graph [math]\displaystyle{ G(V,E) }[/math];

- Output: a bipartition of [math]\displaystyle{ V }[/math] into disjoint subsets [math]\displaystyle{ S }[/math] and [math]\displaystyle{ T }[/math] that maximizes [math]\displaystyle{ |E(S,T)| }[/math].

The problem is a typical MAX-CSP, an optimization version of the constraint satisfaction problem. An instance of CSP consists of:

- a set of variables [math]\displaystyle{ x_1,x_2,\ldots,x_n }[/math] usually taking values from some finite domain;