随机算法 (Fall 2011)/Randomized Algorithms: an Introduction: Difference between revisions

imported>WikiSysop No edit summary |

imported>WikiSysop |

||

| Line 163: | Line 163: | ||

= Graph Coloring = | = Graph Coloring = | ||

A '''coloring''' of a graph <math>G(V,E)</math> is a mapping <math> | A '''coloring''' of a graph <math>G(V,E)</math> is a mapping <math>\sigma:V\rightarrow[q]</math> for some integer <math>q</math>, satisfying that <math>\sigma(u)\neq \sigma(v)</math> for all <math>uv\in E</math>. | ||

The problem of deciding whether an input graph is colorable by some fixed number of colors is a classic problem in Computer Science. Denote by <math>\Delta</math> the maximum degree of <math>G</math>. | The problem of deciding whether an input graph is colorable by some fixed number of colors is a classic problem in Computer Science. Denote by <math>\Delta</math> the maximum degree of <math>G</math>. | ||

Latest revision as of 01:21, 29 August 2011

Randomized Quicksort

Given as input a permutation [math]\displaystyle{ \pi }[/math] of [math]\displaystyle{ n }[/math] numbers, we want to reorder the numbers in [math]\displaystyle{ \pi }[/math] in increasing order. This is the problem of sorting, one of the most classic problem in Computer Science. The famous Quicksort algorithm has very good performance in practice.

Quicksort if the length of [math]\displaystyle{ \pi }[/math] is greater than 1 do:

- pick an [math]\displaystyle{ i\in[n] }[/math] and choose [math]\displaystyle{ \pi_i }[/math] as the pivot;

- partition [math]\displaystyle{ \pi }[/math] into three parts: [math]\displaystyle{ \pi' }[/math], [math]\displaystyle{ \pi_i }[/math], and [math]\displaystyle{ \pi'' }[/math], where all numbers in [math]\displaystyle{ \pi' }[/math] are smaller than [math]\displaystyle{ \pi_i }[/math] and all numbers in [math]\displaystyle{ \pi'' }[/math] are larger than [math]\displaystyle{ \pi_i }[/math];

- recursively sort [math]\displaystyle{ \pi' }[/math] and [math]\displaystyle{ \pi'' }[/math];

The time complexity of this sorting algorithm is measured by the number of comparisons.

For the deterministic quicksort algorithm, the pivot is picked from a fixed position [math]\displaystyle{ i }[/math] (e.g. the first element [math]\displaystyle{ \pi_0 }[/math]). The worst-case time complexity in terms of number of comparisons is [math]\displaystyle{ \Theta(n^2) }[/math], though the average-case complexity is [math]\displaystyle{ O(n\log n) }[/math], matching the lower bound for comparison-based sorting.

We consider the following randomized version of the quicksort.

Randomized Quicksort if the length of [math]\displaystyle{ \pi }[/math] is greater than 1 do:

- pick an [math]\displaystyle{ i\in[n] }[/math] uniformly at random and choose [math]\displaystyle{ \pi_i }[/math] as the pivot;

- partition [math]\displaystyle{ \pi }[/math] into three parts: [math]\displaystyle{ \pi' }[/math], [math]\displaystyle{ \pi_i }[/math], and [math]\displaystyle{ \pi'' }[/math], where all numbers in [math]\displaystyle{ \pi' }[/math] are smaller than [math]\displaystyle{ \pi_i }[/math] and all numbers in [math]\displaystyle{ \pi'' }[/math] are larger than [math]\displaystyle{ \pi_i }[/math];

- recursively sort [math]\displaystyle{ \pi' }[/math] and [math]\displaystyle{ \pi'' }[/math];

We assume that the complexity is measured in terms of number of comparisons, and the deterministic quicksort chooses the first element in the permutation as the pivot.

Average-case vs. Randomization

We show that the expected running time of randomized quicksort on any input is equal to the average-case complexity of deterministic quicksort. Formally, we have the following theorem.

Theorem - For any permutation [math]\displaystyle{ \pi }[/math] of [math]\displaystyle{ n }[/math] numbers, let [math]\displaystyle{ t(\pi) }[/math] be the complexity of the deterministic quicksort algorithm on input [math]\displaystyle{ \pi }[/math], and let [math]\displaystyle{ T(\pi) }[/math] be the random variable representing the complexity of the randomized quicksort on input [math]\displaystyle{ \pi }[/math].

- Let [math]\displaystyle{ \Pi }[/math] be a uniformly random permutation of [math]\displaystyle{ n }[/math] numbers.

- [math]\displaystyle{ \mathrm{E}[T(\pi)]=\mathrm{E}[t(\Pi)] }[/math]

- where the first expectation is taken over the randomness of the algorithm, and the second expectation is taken over the random permutation [math]\displaystyle{ \Pi }[/math].

We know that the average-case complexity of deterministic quicksort is [math]\displaystyle{ O(n\log n) }[/math], i.e. [math]\displaystyle{ \mathrm{E}[t(\Pi)]=O(n\log n) }[/math]. Then the above theorem implies that the expected running time of the randomized quicksort is [math]\displaystyle{ \mathrm{E}[T(\pi)]=O(n\log n) }[/math] on any input [math]\displaystyle{ \pi }[/math].

For the deterministic quicksort, there exists some bad input [math]\displaystyle{ \pi }[/math] such that the running time of the algorithm is as bad as [math]\displaystyle{ \Omega(n^2) }[/math], each time we run the algorithm on that input. However, for the randomized quicksort, for any input [math]\displaystyle{ \pi }[/math], the running time of the algorithm is random and exhibits a well-behaved distribution.

We now prove the theorem.

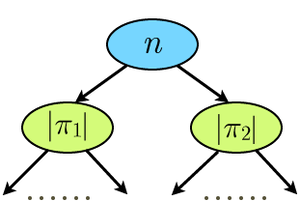

Each running instance of the quicksort can be represented by a binary recursion tree. Each node corresponds to a recursive call of the quciksort algorithm, and the node is labeled with the size of the current input permutation [math]\displaystyle{ \pi }[/math]. The running time of the algorithm is the sum of the labels of the nodes.

We can show that the distribution of the labels of the tree defined by the deterministic quicksort on a random permutation [math]\displaystyle{ \Pi }[/math] is equivalent to the distribution of the labels of the tree defined by the randomized quicksort on any fixed permutation [math]\displaystyle{ \pi }[/math]. The argument uses an important principle for the probabilistic analysis of algorithms: the principle of deferred decisions.

Principle of deferred decisions

For the average-case analysis, the input is a uniformly random permutation which is generated before the running of the algorithm. We can defer the decision of the random choices to the time when they are actually used by the algorithm.

For the deterministic quicksort, each time we choose the first element in the current permutation as the pivot. For a uniformly random inout permutation [math]\displaystyle{ \Pi }[/math]. Once the random choice of [math]\displaystyle{ \Pi }[/math] is determined, all the pivots and the labels of the recursion tree are accordingly fixed. We defer the random choice of the uniform input permutation [math]\displaystyle{ \Pi }[/math] to the time when the pivots are determined. At each step, the pivot is uniformly sampled from the current permutation. This deferring of random choices does not change the distribution of the pivots since the element in a fixed position of a random permutation is equally distributed as the element in a random position of a fixed permutation.

Observe that the resulting process (each time the pivot is uniformly sampled from the current permutation) has exactly the same way of sampling pivots as the randomized quicksort. Thus, the expected complexity of randomized quicksort on any fixed input is equal to the expected complexity of deterministic quicksort on a random input. The theorem is proved.

Primality Test

A primality test is an algorithm that given as input a number [math]\displaystyle{ n }[/math] determines whether [math]\displaystyle{ n }[/math] is prime.

Fermat Test

Recall the Fermat's little theorem.

Fermat's little theorem - If [math]\displaystyle{ n\gt 2 }[/math] is prime, then [math]\displaystyle{ a^{n-1}\equiv 1\pmod n }[/math] for every [math]\displaystyle{ a\in\{1,2,\ldots,n-1\} }[/math].

There are several proofs for this famous theorem. We will not prove the theorem but will only use it here.

If we can find an [math]\displaystyle{ a\in\{1,2,\ldots,n-1\} }[/math] such that [math]\displaystyle{ a^{n-1}\not\equiv 1\pmod n }[/math], it will prove that [math]\displaystyle{ n }[/math] is composite. This inspires the following "primality testing" algorithm.

Fermat test - Choose a uniformly random [math]\displaystyle{ a\in\{1,2,\ldots,n-1\} }[/math].

- If [math]\displaystyle{ a^{n-1}\not\equiv 1\pmod n }[/math], then return "composite".

- Else return "probably prime".

Complexity of Fermat test

The time complexity of this algorithm depends on the computational cost [math]\displaystyle{ a^{n-1} \bmod n }[/math], whose straightforward computing takes [math]\displaystyle{ n-2 }[/math] multiplications, which is too expensive. We describe an efficient way of computing the modular exponent [math]\displaystyle{ a^x\bmod n\, }[/math] where [math]\displaystyle{ x\in[n] }[/math].

We first make the following observations regarding the modular exponentiations:

- If the values of [math]\displaystyle{ a^x\bmod n }[/math] and [math]\displaystyle{ a^y\bmod n }[/math] are both known, then [math]\displaystyle{ a^{x+y}\bmod n }[/math] can be computed by multiplying (modulo [math]\displaystyle{ n }[/math]) them.

- [math]\displaystyle{ a^{2^i} }[/math] can be computed by letting [math]\displaystyle{ a_0=a }[/math] and [math]\displaystyle{ a_{j}=a_{j-1}^2 \pmod n }[/math] for [math]\displaystyle{ j=1,2,\ldots, i }[/math], which takes only [math]\displaystyle{ i }[/math] modular multiplications.

Let [math]\displaystyle{ \ell=\lceil\log_2 n\rceil }[/math]. A number [math]\displaystyle{ x\in[n] }[/math] can be represented in its binary form: [math]\displaystyle{ x_\ell x_{\ell-1}\cdots x_1x_0 }[/math], where each [math]\displaystyle{ x_i\in\{0,1\} }[/math], so that [math]\displaystyle{ x=\sum_{i=0}^{\ell}x_i\cdot2^i }[/math].

Combining the above two observations, all [math]\displaystyle{ a^{x_i2^i}\bmod n }[/math] can be computed in [math]\displaystyle{ O(\log n) }[/math] many multiplications, and [math]\displaystyle{ a^x\bmod n }[/math] can be computed by multiplying (modulo [math]\displaystyle{ n }[/math]) them together.

The time complexity of Fermat test thus can be made in polynomial of [math]\displaystyle{ \log n }[/math].

Accuracy of Fermat test

If the output is "composite", then [math]\displaystyle{ a^{n-1}\not\equiv 1\pmod n }[/math] for some [math]\displaystyle{ a\in\{1,2,\ldots,n-1\} }[/math]. By the Fermat's little theorem, [math]\displaystyle{ n }[/math] must be composite. Therefore, for any prime [math]\displaystyle{ n }[/math], the output is always "probably prime".

For composite [math]\displaystyle{ n }[/math], it is possible that the algorithm picks an [math]\displaystyle{ a }[/math] such that [math]\displaystyle{ a^{n-1}\equiv 1\pmod n }[/math] and outputs "probably prime". But if the fraction of such bad [math]\displaystyle{ a }[/math] in [math]\displaystyle{ \{1,2,\ldots,n-1\} }[/math] is small enough, then the testing algorithm may still correctly output "composite" with a good chance. However, there exist (though very rare) such composites, called Carmichael numbers, that may fool the Fermat test.

Definition (Carmichael number) - A composite number [math]\displaystyle{ n }[/math] is a Carmichael number if [math]\displaystyle{ a^{n-1}\equiv 1\pmod n }[/math] for all [math]\displaystyle{ a\in\mathbb{Z}_n^* }[/math].

Here [math]\displaystyle{ \mathbb{Z}_n^* }[/math] is the multiplicative group modulo [math]\displaystyle{ n }[/math], defined as [math]\displaystyle{ \mathbb{Z}_n^*=\{a\mid 1\le a\le n-1\wedge \mathrm{gcd}(a,n)=1\} }[/math].

For non-Carmichael composites, the Fermat test may detect the compositeness with a fairly good chance. Let [math]\displaystyle{ B=\{a\in\mathbb{Z}_n^*\mid a^{n-1}\equiv 1\pmod n\} }[/math]. Note that [math]\displaystyle{ B }[/math] is closed under multiplication (modulo [math]\displaystyle{ n }[/math]), thus [math]\displaystyle{ B }[/math] is a subgroup of [math]\displaystyle{ \mathbb{Z}_n^* }[/math]. Therefore, [math]\displaystyle{ |\mathbb{Z}_n^*| }[/math] is divisible by [math]\displaystyle{ |B| }[/math].

If [math]\displaystyle{ n }[/math] is neither prime nor Carmichael, then [math]\displaystyle{ \mathbb{Z}_n^*\setminus B }[/math] is nonempty, i.e. [math]\displaystyle{ B }[/math] is a proper subgroup of [math]\displaystyle{ \mathbb{Z}_n^* }[/math], thus [math]\displaystyle{ |\mathbb{Z}_n^*|/|B| }[/math] is at least 2 and there are at least half [math]\displaystyle{ a\in\{1,2,\ldots,n-1\} }[/math] satisfying [math]\displaystyle{ a^{n-1}\not\equiv 1 }[/math].

In conclusion,

- if [math]\displaystyle{ n }[/math] is prime, then the Fermat test returns "probably prime" with probability 1;

- if [math]\displaystyle{ n }[/math] is non-Carmichael composite, then the Fermat test returns "composite" with probability at least [math]\displaystyle{ 1/2 }[/math];

- if [math]\displaystyle{ n }[/math] is a Carmichael number, the Fermat test breaks down.

As long as the input is not a Carmichael number, we can repeat the Fermat test independently for [math]\displaystyle{ k }[/math] times and reduce the error probability to [math]\displaystyle{ 2^{-k} }[/math].

The Carmichael numbers are very rare. Let [math]\displaystyle{ c(n) }[/math] be the "Carmichael density" that

- [math]\displaystyle{ c(n)=\frac{\text{number of Carmichael numbers }\le n}{n} }[/math].

In 1956, Erdős proved that

- [math]\displaystyle{ c(n)\le\exp\left(-\Omega\left(\frac{\log n\log\log\log n}{\log \log n}\right)\right)=n^{-\Omega\left(\frac{\log\log\log n}{\log\log n}\right)} }[/math].

If one only needs to generates a prime number instead of testing the primality of a given number, then we can generates a random number, and apply the Fermat test. Due to the prime number theorem, the number of prime numbers less than or equal to [math]\displaystyle{ n }[/math] is [math]\displaystyle{ \pi(n)\sim\frac{n}{\ln n} }[/math]. This scheme will generates a prime number in a reasonable number of independent trials with a good chance.

Miller-Rabin Test

The Fermat test is based on the following way to prove that a number [math]\displaystyle{ n }[/math] is composite:

- there exists a number [math]\displaystyle{ a }[/math] such that [math]\displaystyle{ a^{n-1}\not\equiv 1\pmod n }[/math].

The Miller-Rabin primality test is based on an additional way to prove that a number [math]\displaystyle{ n }[/math] is composite:

- 1 has a nontrivial square root, that is, a number [math]\displaystyle{ a }[/math] satisfying that [math]\displaystyle{ a^2\equiv 1\pmod n }[/math] but [math]\displaystyle{ a\not\equiv \pm 1\pmod n }[/math].

The following theorem states that the existence of nontrivial square root of 1 is a valid proof of compositeness of [math]\displaystyle{ n }[/math].

Theorem - If [math]\displaystyle{ n\gt 2 }[/math] is prime, then [math]\displaystyle{ 1 }[/math] does not have a nontrivial square root.

Proof. Suppose [math]\displaystyle{ a }[/math] is a square root of 1, that is, [math]\displaystyle{ a^2\equiv1\pmod n }[/math]. Therefore,

- [math]\displaystyle{ (a-1)(a+1)=a^2-1\equiv 0\pmod n }[/math],

which means that [math]\displaystyle{ (a-1)(a+1)|n\, }[/math].

If [math]\displaystyle{ a\not\equiv \pm1\pmod n }[/math], then [math]\displaystyle{ n }[/math] divides neither [math]\displaystyle{ (a-1) }[/math] nor [math]\displaystyle{ (a+1) }[/math], which contradicts that [math]\displaystyle{ n }[/math] is prime and divides [math]\displaystyle{ (a-1)(a+1) }[/math].

- [math]\displaystyle{ \square }[/math]

The idea of Miller-Rabin test is to find either a Fermat proof of compositeness, or a nontrivial square root of 1.

Miller-Rabin Primality Test - Choose a uniformly random [math]\displaystyle{ a\in\{1,2,\ldots,n-1\} }[/math].

- Let [math]\displaystyle{ t }[/math] and [math]\displaystyle{ m }[/math] be such that [math]\displaystyle{ t\ge 1 }[/math], [math]\displaystyle{ m }[/math] is odd, and [math]\displaystyle{ n-1=2^tm }[/math].

- Let [math]\displaystyle{ a_0=a^m\bmod\, n\, }[/math]. For [math]\displaystyle{ i=1 }[/math] to [math]\displaystyle{ t }[/math], let [math]\displaystyle{ a_i=a_{i-1}^2 \bmod\, n }[/math].

- If [math]\displaystyle{ a_t\not\equiv 1\pmod n }[/math], then return "composite".

- If there is an [math]\displaystyle{ i }[/math], [math]\displaystyle{ 1\le i\le t }[/math], such that [math]\displaystyle{ a_i\equiv 1\pmod n }[/math] but [math]\displaystyle{ a_{i-1}\not\equiv \pm 1\pmod n }[/math], then return "composite".

- Else return "probably prime".

An easy inductive proof shows that [math]\displaystyle{ a_i=a^{2^im}\bmod\, n }[/math] for all [math]\displaystyle{ i }[/math], [math]\displaystyle{ 0\le i\le t }[/math]. In particular, [math]\displaystyle{ a_t\equiv a^{2^tm}=a^{n-1}\pmod n }[/math].

The original algorithm due to Miller is deterministic, which test all small [math]\displaystyle{ a }[/math] up to an [math]\displaystyle{ O(\log n) }[/math] order. The correctness of this deterministic algorithm relies on the unproven conjecture of Generalized Riemann hypothesis. It was observed by Rabin that the deterministic searching can be replaced by random sampling.

Line 4 of the algorithm is equivalent to that [math]\displaystyle{ a^{n-1}\not\equiv 1\pmod n }[/math], thus line 4 is just the Fermat test. If [math]\displaystyle{ n }[/math] passes the Fermat test, line 5 tries to find a nontrivial square root of 1 in form of [math]\displaystyle{ a^{2^im} }[/math].

If [math]\displaystyle{ n }[/math] is prime, then due to the Fermat little theorem and the fact that prime numbers do not have nontrivial square roots of 1, the conditions in line 4 and line 5 will never hold, thus the algorithm will return "probably prime". If [math]\displaystyle{ n }[/math] is a non-Carmichael composite, as in the Fermat test, line 4 returns "composite" with probability at least [math]\displaystyle{ 1/2 }[/math]. The only remaining case is when [math]\displaystyle{ n }[/math] is a Carmichael number.

We pick the largest [math]\displaystyle{ j }[/math] such that there is a [math]\displaystyle{ b\in\mathbb{Z}_{n}^* }[/math] satisfying [math]\displaystyle{ b^{2^jm}\equiv -1\pmod n }[/math], and define

- [math]\displaystyle{ B=\{a\in\mathbb{Z}_n^*\mid a^{2^jm}\equiv \pm 1\pmod n\} }[/math].

Theorem - If [math]\displaystyle{ n }[/math] is a Carmichael number, then the [math]\displaystyle{ B }[/math] defined as above is a proper subgroup of [math]\displaystyle{ \mathbb{Z}_n^* }[/math].

Since [math]\displaystyle{ j }[/math] is fixed, it is easy to verify that [math]\displaystyle{ B }[/math] is closed under multiplication, thus [math]\displaystyle{ B }[/math] is a subgroup of [math]\displaystyle{ \mathbb{Z}_n^* }[/math]. It is a bit complicated to show that [math]\displaystyle{ \mathbb{Z}_n^*\setminus B }[/math] is nonempty and we will not give the full proof here.

The accuracy of Miller-Rabin test on Carmichael numbers is implied by this theorem. Suppose [math]\displaystyle{ n }[/math] is a Carmichael number. We call an [math]\displaystyle{ a\in\{1,2,\ldots, n-1\} }[/math] a liar if it fools the test in line 5, i.e. there is no such [math]\displaystyle{ i }[/math] that [math]\displaystyle{ a^{2^im}\equiv 1\pmod n }[/math] but [math]\displaystyle{ a^{2^{i-1}m}\not\equiv \pm 1\pmod n }[/math].

We claim that all liars belong to [math]\displaystyle{ B }[/math]. Due to the maximality of [math]\displaystyle{ j }[/math], [math]\displaystyle{ a^{2^im}\not\equiv -1 }[/math] for all [math]\displaystyle{ i\gt j }[/math]. Since [math]\displaystyle{ n }[/math] is a Carmichael number, [math]\displaystyle{ a^{n-1}\equiv 1\pmod n }[/math], if [math]\displaystyle{ a }[/math] is a liar then it mus hold that [math]\displaystyle{ a^{2^im}\equiv 1\pmod n }[/math] for all [math]\displaystyle{ i\gt j }[/math] or otherwise [math]\displaystyle{ a }[/math] cannot be a liar. In particular, [math]\displaystyle{ a^{2^{j+1}m}\equiv 1\pmod n }[/math]. Again, since [math]\displaystyle{ a }[/math] is a liar, [math]\displaystyle{ a^{2^jm}\equiv \pm1\pmod n }[/math], therefore [math]\displaystyle{ a\in B }[/math].

We show that when [math]\displaystyle{ n }[/math] is a Carmichael number, all numbers [math]\displaystyle{ a }[/math] that fools the Miller-Rabin test belongs to a proper subgroup of [math]\displaystyle{ \mathbb{Z}_n^* }[/math], therefore the Miller-Rabin test returns a "composite" with probability [math]\displaystyle{ 1/2 }[/math].

In conclusion,

- if [math]\displaystyle{ n }[/math] is prime, the algorithm returns "probably prime";

- if [math]\displaystyle{ n }[/math] is a non-Carmichael composite, the algorithm returns "composite" in line 4 with probability at least [math]\displaystyle{ 1/2 }[/math];

- if [math]\displaystyle{ n }[/math] is a Carmichael number, the algorithm returns "composite" in line 5 with probability at least [math]\displaystyle{ 1/2 }[/math].

Graph Coloring

A coloring of a graph [math]\displaystyle{ G(V,E) }[/math] is a mapping [math]\displaystyle{ \sigma:V\rightarrow[q] }[/math] for some integer [math]\displaystyle{ q }[/math], satisfying that [math]\displaystyle{ \sigma(u)\neq \sigma(v) }[/math] for all [math]\displaystyle{ uv\in E }[/math].

The problem of deciding whether an input graph is colorable by some fixed number of colors is a classic problem in Computer Science. Denote by [math]\displaystyle{ \Delta }[/math] the maximum degree of [math]\displaystyle{ G }[/math].

- If [math]\displaystyle{ q\ge \Delta+1 }[/math], there always exists a coloring. Moreover, the coloring can be found by a simple greedy algorithm.

- If [math]\displaystyle{ q=\Delta }[/math], [math]\displaystyle{ G }[/math] has a coloring unless it contains a [math]\displaystyle{ (\Delta+1) }[/math]-clique or it is an odd cycle. (Brooks Theorem)

- If [math]\displaystyle{ q\lt \Delta }[/math], the problem of deciding whether [math]\displaystyle{ G }[/math] is [math]\displaystyle{ q }[/math]-colorable is NP-hard.

Sampling a graph coloring

We consider the problem of sampling a uniformly random coloring of a given graph.

Sampling a random coloring is at least as hard as deciding its existence, so we don't expect to solve the sampling problem when [math]\displaystyle{ q\lt \Delta }[/math]. The decision problem for the case [math]\displaystyle{ q=\Delta }[/math] is also nontrivial. Thus people are interested only in the case when [math]\displaystyle{ q\ge \Delta+1 }[/math].

Unlike sampling a number from [math]\displaystyle{ [n] }[/math] uniformly at random, which can be done by flipping a fair coin for [math]\displaystyle{ O(\log n) }[/math] times, sampling a coloring cannot be easily done, because of the constraint that any two adjacent vertices cannot have the same color. Such constraints (also called "structures") tremendously increase the difficulty of random sampling a combinatorial object as well as counting the number of combinatorial objects.

The following is a simple randomized algorithm for sampling colorings.

Sampling Graph Coloring - Start with an arbitrary coloring of [math]\displaystyle{ G(V,E) }[/math]. At each step:

- Pick a vertex [math]\displaystyle{ v\in V }[/math] and a color [math]\displaystyle{ c\in[q] }[/math] uniformly at random.

- Change the color of [math]\displaystyle{ v }[/math] to [math]\displaystyle{ c }[/math] if it results a valid coloring; do nothing if otherwise.

- Repeat this process for sufficiently many steps.

This very simple algorithm uses an important idea called random walks. There is a huge class of randomized algorithms for random sampling based on this principle.

The algorithm start with a fixed coloring, and "walks" to more and more random colorings as it runs. In finite steps, it will get "close" enough to a uniform random coloring. There are two issues:

- How do we measure the randomness of the coloring, or how doe we measure how close the current random coloring to the uniform random coloring?

- How many steps it takes to get close enough to a random coloring?

We will introduce the formal concepts addressing these issues in future lectures. We will introduce a beautiful theory of random walks, which is fundamental to the analysis of randomized algorithms.

The followings are the two most important conjectures regarding the graph coloring problem.

Conjecture - The above simple algorithm returns a nearly uniform random coloring in [math]\displaystyle{ O(n\ln n) }[/math] steps whenever [math]\displaystyle{ q\ge\Delta+2 }[/math].

- Random sampling a nearly uniform graph colorings can be done in polynomial time whenever [math]\displaystyle{ q\ge\Delta+1 }[/math].

These two conjectures are still open. We will not solve them in this class, but we will approach them by some important techniques for analyzing random walks.

Counting by sampling

Given a graph [math]\displaystyle{ G(V,E) }[/math] of [math]\displaystyle{ n }[/math] vertices, we want to compute the number of different colorings of graph [math]\displaystyle{ G }[/math]. This is a well-defined computational problem.

Enumerating all colorings will take exponential time in the worst case. Since we only need a number instead of a list of all colorings, we expect cleverer ways of computing this number than the brute force enumeration.

The problem of counting graph colorings has the following formulation. Let [math]\displaystyle{ A }[/math] be a [math]\displaystyle{ q\times q }[/math] matrix defined as

- [math]\displaystyle{ A=\begin{bmatrix} A_{00} & A_{01} & \cdots & A_{0,q-1}\\ A_{10} & A_{11} & \cdots & A_{1,q-1}\\ \vdots & \vdots & \ddots & \vdots\\ A_{q-1, 0} & A_{q-1, 1} & \cdots & A_{q-1,q-1}\\ \end{bmatrix} }[/math], where [math]\displaystyle{ A_{ij}=\begin{cases}0 & i=j \\ 1 & i\neq j \end{cases} }[/math].

The number of colorings for a given graph [math]\displaystyle{ G }[/math] is described by the following function:

- [math]\displaystyle{ Z_A(G)=\sum_{\sigma:V\rightarrow[q]}\prod_{(u,v)\in E}A_{\sigma(u),\sigma(v)} }[/math].

The sum enumerates all possible colorings, valid or invalid, and the product determines whether the present coloring is valid.

We can replace [math]\displaystyle{ A }[/math] with any [math]\displaystyle{ q\times q }[/math] matrix and talk about the computation of [math]\displaystyle{ Z_A(G) }[/math]. This gives us the Potts model in statistical physics. When [math]\displaystyle{ q=2 }[/math], it becomes the famous Ising model.

The function [math]\displaystyle{ Z_A(G) }[/math] is called the partition function in statistical physics. Virtually all interesting information about a statistical physics system can be deduced from its partition function value.

The partition function can also be used to model many natural counting problems. For example, when [math]\displaystyle{ q=2 }[/math] and

- [math]\displaystyle{ A=\begin{bmatrix} A_{00} & A_{01} \\ A_{10} & A_{11} \\ \end{bmatrix}= \begin{bmatrix} 1 & 1\\ 1 & 0 \\ \end{bmatrix} }[/math]

The partition function [math]\displaystyle{ Z_A(G) }[/math] is the total number of independent sets of graph [math]\displaystyle{ G }[/math].

There is a complexity class for counting problems, named #P. The NP class is for decision problems. The #P class is the analog of the NP class for counting problems. Many counting problems are known to be #P-hard, including counting graph colorings or independent sets.

It is believed that #P-hard problems do not have polynomial time algorithms. Actually it is believed that #P-hard problems are harder than NP-hard problems, partly because the existence of polynomial time algorithm for any #P-hard problem implies that P=NP, but not vice versa.

Later in this class, we will show that although the exact counting is computationally hard, for #P-hard problems such as counting graph colorings, there exist randomized algorithms which can approximately compute the problems. These algorithms are based on random samplings obtained from simulating a random walk. This powerful technique is called the Monte Carlo Markov Chain (MCMC) method.

Identity Checking

Consider the following scenario: Two datacenters located in two far apart cities (Beijing and Nanjing), each holds a copy of a very large database. We want to check whether the two copies are identical with minimum communication between two datacenters. The cost of local computation is ignored because it is incomparable to the cost caused by remote communication.

Communication Complexity

In 1979, Andrew Yao introduced the communication complexity to model the problems of this kind. The communication complexity is much more general, which does not just consider the problem of checking identity but any problems that can be computed by communication.

Alice and Bob are two entities. Alice has a private input [math]\displaystyle{ x }[/math] and Bob has a private input [math]\displaystyle{ y }[/math]. Together they want to compute a function [math]\displaystyle{ f(x,y) }[/math] by communicating with each other. The communication follows a predefined communication protocol (the "algorithm" in this model) which depends only on the problem [math]\displaystyle{ f }[/math] but not on the inputs. The complexity of a communication protocol is measured by the number of bits communicated between Alice and Bob in the worst case.

The problem of checking identity is formally defined by the function EQ as follows: [math]\displaystyle{ \mathrm{EQ}:\{0,1\}^n\times\{0,1\}^n\rightarrow\{0,1\} }[/math] and for any [math]\displaystyle{ x,y\in\{0,1\}^n }[/math],

- [math]\displaystyle{ \mathrm{EQ}(x,y)= \begin{cases} 1& \mbox{if } x=y,\\ 0& \mbox{otherwise.} \end{cases} }[/math]

A trivial way to solve EQ is to let Bob send [math]\displaystyle{ y }[/math] to Alice. Supposed that [math]\displaystyle{ x,y\in\{0,1\}^n }[/math], this costs [math]\displaystyle{ n }[/math] bits of communications.

It is known that for deterministic communication protocols, this is the best we can get for computing EQ.

Theorem (Yao 1979) - Any deterministic communication protocol computing EQ on two [math]\displaystyle{ n }[/math]-bit strings costs [math]\displaystyle{ n }[/math] bits of communication in the worst-case.

This theorem is much more nontrivial to prove than it looks, because Alice and Bob are allowed to interact with each other in arbitrary ways. The proof of this theorem in Yao's 1979 paper initiates the field of communication complexity.

Randomized communication protocols

If the randomness is allowed, we can solve this problem up to a tolerable probabilistic error with significantly less communications. We treat the inputs [math]\displaystyle{ x,y\in\{0,1\}^n }[/math] as two binary numbers [math]\displaystyle{ x,y\in[2^n] }[/math]. The randomized communication protocol is as follows:

A randomized protocol for EQ Alice does:

- for some parameter [math]\displaystyle{ k }[/math] (to be specified),

- choose uniformly at random a prime [math]\displaystyle{ p\in[k] }[/math];

- send [math]\displaystyle{ p }[/math] and [math]\displaystyle{ x\bmod p }[/math] to Bob;

Upon receiving [math]\displaystyle{ p }[/math] and [math]\displaystyle{ x\bmod p }[/math], Bob does:

- If [math]\displaystyle{ x\bmod p=y\bmod p }[/math] return "probably identical"; else return "distinct".

- for some parameter [math]\displaystyle{ k }[/math] (to be specified),

The number of bits to be communicated is [math]\displaystyle{ O(\log k) }[/math].

This technique is called fingerprinting. The random prime [math]\displaystyle{ p }[/math] induces a random fingerprinting function [math]\displaystyle{ \bmod p }[/math], so that the [math]\displaystyle{ x\bmod p }[/math] and [math]\displaystyle{ y\bmod p }[/math] are like the "fingerprints" of [math]\displaystyle{ x }[/math] and [math]\displaystyle{ y }[/math]. Like the fingerprints of people, the same input must have the same fingerprints and the chance that two distinct inputs have the same fingerprint is small.

If [math]\displaystyle{ x=y }[/math], then it is trivial to see that the output is "probably identical". For the case that [math]\displaystyle{ x\neq y }[/math], it is probable that Alice pick a wrong prime [math]\displaystyle{ p }[/math] so that [math]\displaystyle{ x\equiv y\pmod p }[/math] and the protocol incorrectly outputs "probably identical", however, it can be proved that this probability of error is polynomially small [math]\displaystyle{ O(\frac{1}{\mathrm{poly}(n)}) }[/math] for some polynomially large [math]\displaystyle{ k=\mathrm{poly}(n) }[/math].

Therefore, this randomized protocol solves the problem up to a one-sided error [math]\displaystyle{ O(\frac{1}{\mathrm{poly}(n)}) }[/math] with communication cost [math]\displaystyle{ O(\log k)=O(\log n) }[/math].

In the above algorithm, the random coin flips made by Alice are private to herself but not available to Bob.

We may assume that both Alice and Bob observe the same random coin flips with no additional costs. This model is called public coins (so the previous algorithm is actually communication with private coins). With this stronger assumption, we can have new randomized protocol.

We treat the inputs [math]\displaystyle{ x,y\in\{0,1\}^n }[/math] as vectors of [math]\displaystyle{ n }[/math] Boolean entries. We define the inner product [math]\displaystyle{ \langle x,y\rangle }[/math] for Boolean vectors [math]\displaystyle{ x,y\in\{0,1\}^n }[/math] as [math]\displaystyle{ \langle x,y\rangle=\left(\sum_{i=1}^nx_iy_i\right)\bmod 2 }[/math], which has an equivalent definition [math]\displaystyle{ \langle x,y\rangle=\bigoplus_{i=1}^n(x_i\wedge y_i) }[/math], where [math]\displaystyle{ \oplus }[/math] is the Boolean operator XOR.

A randomized (public coin) protocol for EQ - Suppose that a uniformly random Boolean vector [math]\displaystyle{ r\in\{0,1\}^n }[/math] is known to both Alice and Bob.

Alice does:

- send [math]\displaystyle{ \langle x, r\rangle }[/math] to Bob;

Upon receiving [math]\displaystyle{ \langle x, r\rangle }[/math], Bob does:

- If [math]\displaystyle{ \langle x, r\rangle=\langle y, r\rangle }[/math] return "probably identical"; else return "distinct".

Same as before, if [math]\displaystyle{ x=y }[/math], the output is always correct. If [math]\displaystyle{ x\neq y }[/math], it is easy to check that the protocol gives a wrong anser with probability at most [math]\displaystyle{ 1/2 }[/math]. By repeatedly running the protocol for a number of times (with independent public coins [math]\displaystyle{ r }[/math]), the error probability can be reduced significantly.