高级算法 (Fall 2019)/Concentration of measure: Difference between revisions

imported>Etone |

imported>Etone |

||

| (One intermediate revision by the same user not shown) | |||

| Line 559: | Line 559: | ||

Apply Azuma's inequality for the martingale <math>Y_0,\ldots,Y_n</math> with respect to <math>X_1,\ldots, X_n</math>, the Hoeffding's inequality is proved. | Apply Azuma's inequality for the martingale <math>Y_0,\ldots,Y_n</math> with respect to <math>X_1,\ldots, X_n</math>, the Hoeffding's inequality is proved. | ||

}} | }} | ||

==The Bounded Difference Method== | |||

Combining Azuma's inequality with the construction of Doob martingales, we have the powerful ''Bounded Difference Method'' for concentration of measures. | |||

=== For arbitrary random variables === | |||

Given a sequence of random variables <math>X_1,\ldots,X_n</math> and a function <math>f</math>. The Doob sequence constructs a martingale from them. Combining this construction with Azuma's inequality, we can get a very powerful theorem called "the method of averaged bounded differences" which bounds the concentration for arbitrary function on arbitrary random variables (not necessarily a martingale). | |||

{{Theorem | |||

|Theorem (Method of averaged bounded differences)| | |||

:Let <math>\boldsymbol{X}=(X_1,\ldots, X_n)</math> be arbitrary random variables and let <math>f</math> be a function of <math>X_0,X_1,\ldots, X_n</math> satisfying that, for all <math>1\le i\le n</math>, | |||

::<math> | |||

|\mathbf{E}[f(\boldsymbol{X})\mid X_1,\ldots,X_i]-\mathbf{E}[f(\boldsymbol{X})\mid X_1,\ldots,X_{i-1}]|\le c_i, | |||

</math> | |||

:Then | |||

::<math>\begin{align} | |||

\Pr\left[|f(\boldsymbol{X})-\mathbf{E}[f(\boldsymbol{X})]|\ge t\right]\le 2\exp\left(-\frac{t^2}{2\sum_{i=1}^nc_i^2}\right). | |||

\end{align}</math> | |||

}} | |||

{{Proof| Define the Doob Martingale sequence <math>Y_0,Y_1,\ldots,Y_n</math> by setting <math>Y_0=\mathbf{E}[f(X_1,\ldots,X_n)]</math> and, for <math>1\le i\le n</math>, <math>Y_i=\mathbf{E}[f(X_1,\ldots,X_n)\mid X_1,\ldots,X_i]</math>. Then the above theorem is a restatement of the Azuma's inequality holding for <math>Y_0,Y_1,\ldots,Y_n</math>. | |||

}} | |||

=== For independent random variables === | |||

The condition of bounded averaged differences is usually hard to check. This severely limits the usefulness of the method. To overcome this, we introduce a property which is much easier to check, called the Lipschitz condition. | |||

{{Theorem | |||

|Definition (Lipschitz condition)| | |||

:A function <math>f(x_1,\ldots,x_n)</math> satisfies the Lipschitz condition, if for any <math>x_1,\ldots,x_n</math> and any <math>y_i</math>, | |||

::<math>\begin{align} | |||

|f(x_1,\ldots,x_{i-1},x_i,x_{i+1},\ldots,x_n)-f(x_1,\ldots,x_{i-1},y_i,x_{i+1},\ldots,x_n)|\le 1. | |||

\end{align}</math> | |||

}} | |||

In other words, the function satisfies the Lipschitz condition if an arbitrary change in the value of any one argument does not change the value of the function by more than 1. | |||

The diference of 1 can be replaced by arbitrary constants, which gives a generalized version of Lipschitz condition. | |||

{{Theorem | |||

|Definition (Lipschitz condition, general version)| | |||

:A function <math>f(x_1,\ldots,x_n)</math> satisfies the Lipschitz condition with constants <math>c_i</math>, <math>1\le i\le n</math>, if for any <math>x_1,\ldots,x_n</math> and any <math>y_i</math>, | |||

::<math>\begin{align} | |||

|f(x_1,\ldots,x_{i-1},x_i,x_{i+1},\ldots,x_n)-f(x_1,\ldots,x_{i-1},y_i,x_{i+1},\ldots,x_n)|\le c_i. | |||

\end{align}</math> | |||

}} | |||

The following "method of bounded differences" can be developed for functions satisfying the Lipschitz condition. Unfortunately, in order to imply the condition of averaged bounded differences from the Lipschitz condition, we have to restrict the method to independent random variables. | |||

{{Theorem | |||

|Corollary (Method of bounded differences)| | |||

:Let <math>\boldsymbol{X}=(X_1,\ldots, X_n)</math> be <math>n</math> '''independent''' random variables and let <math>f</math> be a function satisfying the Lipschitz condition with constants <math>c_i</math>, <math>1\le i\le n</math>. Then | |||

::<math>\begin{align} | |||

\Pr\left[|f(\boldsymbol{X})-\mathbf{E}[f(\boldsymbol{X})]|\ge t\right]\le 2\exp\left(-\frac{t^2}{2\sum_{i=1}^nc_i^2}\right). | |||

\end{align}</math> | |||

}} | |||

{{Proof| For convenience, we denote that <math>\boldsymbol{X}_{[i,j]}=(X_i,X_{i+1},\ldots, X_j)</math> for any <math>1\le i\le j\le n</math>. | |||

We first show that the Lipschitz condition with constants <math>c_i</math>, <math>1\le i\le n</math>, implies another condition called the averaged Lipschitz condition (ALC): for any <math>a_i,b_i</math>, <math>1\le i\le n</math>, | |||

:<math> | |||

\left|\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]},X_i=a_i\right]-\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]},X_i=b_i\right]\right|\le c_i. | |||

</math> | |||

And this condition implies the averaged bounded difference condition: for all <math>1\le i\le n</math>, | |||

::<math> | |||

\left|\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i]}\right]-\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]}\right]\right|\le c_i. | |||

</math> | |||

Then by applying the method of averaged bounded differences, the corollary can be proved. | |||

For any <math>a</math>, by the law of total expectation, | |||

:<math> | |||

\begin{align} | |||

&\quad\, \mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]},X_i=a\right]\ | |||

&=\sum_{a_{i+1},\ldots,a_n}\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]},X_i=a, \boldsymbol{X}_{[i+1,n]}=\boldsymbol{a}_{[i+1,n]}\right]\cdot\Pr\left[\boldsymbol{X}_{[i+1,n]}=\boldsymbol{a}_{[i+1,n]}\mid \boldsymbol{X}_{[1,i-1]},X_i=a\right]\ | |||

&=\sum_{a_{i+1},\ldots,a_n}\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]},X_i=a, \boldsymbol{X}_{[i+1,n]}=\boldsymbol{a}_{[i+1,n]}\right]\cdot\Pr\left[\boldsymbol{X}_{[i+1,n]}=\boldsymbol{a}_{[i+1,n]}\right] \qquad (\mbox{independence})\ | |||

&= \sum_{a_{i+1},\ldots,a_n} f(\boldsymbol{X}_{[1,i-1]},a,\boldsymbol{a}_{[i+1,n]})\cdot\Pr\left[\boldsymbol{X}_{[i+1,n]}=\boldsymbol{a}_{[i+1,n]}\right]. | |||

\end{align} | |||

</math> | |||

Let <math>a=a_i</math> and <math>b_i</math>, and take the diference. Then | |||

:<math> | |||

\begin{align} | |||

&\quad\, \left|\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]},X_i=a_i\right]-\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]},X_i=b_i\right]\right|\ | |||

&=\left|\sum_{a_{i+1},\ldots,a_n}\left(f(\boldsymbol{X}_{[1,i-1]},a_i,\boldsymbol{a}_{[i+1,n]})-f(\boldsymbol{X}_{[1,i-1]},b_i,\boldsymbol{a}_{[i+1,n]})\right)\Pr\left[\boldsymbol{X}_{[i+1,n]}=\boldsymbol{a}_{[i+1,n]}\right]\right|\ | |||

&\le \sum_{a_{i+1},\ldots,a_n}\left|f(\boldsymbol{X}_{[1,i-1]},a_i,\boldsymbol{a}_{[i+1,n]})-f(\boldsymbol{X}_{[1,i-1]},b_i,\boldsymbol{a}_{[i+1,n]})\right|\Pr\left[\boldsymbol{X}_{[i+1,n]}=\boldsymbol{a}_{[i+1,n]}\right]\ | |||

&\le \sum_{a_{i+1},\ldots,a_n}c_i\Pr\left[\boldsymbol{X}_{[i+1,n]}=\boldsymbol{a}_{[i+1,n]}\right] \qquad (\mbox{Lipschitz condition})\ | |||

&=c_i. | |||

\end{align} | |||

</math> | |||

Thus, the Lipschitz condition is transformed to the ALC. We then deduce the averaged bounded difference condition from ALC. | |||

By the law of total expectation, | |||

:<math> | |||

\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]}\right]=\sum_{a}\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]},X_i=a\right]\cdot\Pr[X_i=a\mid \boldsymbol{X}_{[1,i-1]}]. | |||

</math> | |||

We can trivially write <math>\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i]}\right]</math> as | |||

:<math> | |||

\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i]}\right]=\sum_{a}\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i]}\right]\cdot\Pr\left[X_i=a\mid \boldsymbol{X}_{[1,i-1]}\right]. | |||

</math> | |||

Hence, the difference is | |||

:<math> | |||

\begin{align} | |||

&\quad \left|\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i]}\right]-\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]}\right]\right|\ | |||

&=\left|\sum_{a}\left(\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i]}\right]-\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]},X_i=a\right]\right)\cdot\Pr\left[X_i=a\mid \boldsymbol{X}_{[1,i-1]}\right]\right| \ | |||

&\le \sum_{a}\left|\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i]}\right]-\mathbf{E}\left[f(\boldsymbol{X})\mid \boldsymbol{X}_{[1,i-1]},X_i=a\right]\right|\cdot\Pr\left[X_i=a\mid \boldsymbol{X}_{[1,i-1]}\right] \ | |||

&\le \sum_a c_i\Pr\left[X_i=a\mid \boldsymbol{X}_{[1,i-1]}\right] \qquad (\mbox{due to ALC})\ | |||

&=c_i. | |||

\end{align} | |||

</math> | |||

The averaged bounded diference condition is implied. Applying the method of averaged bounded diferences, the corollary follows. | |||

}} | |||

=== Applications === | |||

==== Occupancy problem ==== | |||

Throwing <math>m</math> balls uniformly and independently at random to <math>n</math> bins, we ask for the occupancies of bins by the balls. In particular, we are interested in the number of empty bins. | |||

This problem can be described equivalently as follows. Let <math>f:[m]\rightarrow[n]</math> be a uniform random function from <math>[m]\rightarrow[n]</math>. We ask for the number of <math>i\in[n]</math> that <math>f^{-1}(i)</math> is empty. | |||

For any <math>i\in[n]</math>, let <math>X_i</math> indicate the emptiness of bin <math>i</math>. Let <math>X=\sum_{i=1}^nX_i</math> be the number of empty bins. | |||

:<math> | |||

\mathbf{E}[X_i]=\Pr[\mbox{bin }i\mbox{ is empty}]=\left(1-\frac{1}{n}\right)^m. | |||

</math> | |||

By the linearity of expectation, | |||

:<math> | |||

\mathbf{E}[X]=\sum_{i=1}^n\mathbf{E}[X_i]=n\left(1-\frac{1}{n}\right)^m. | |||

</math> | |||

We want to know how <math>X</math> deviates from this expectation. The complication here is that <math>X_i</math> are not independent. So we alternatively look at a sequence of independent random variables <math>Y_1,\ldots, Y_m</math>, where <math>Y_j\in[n]</math> represents the bin into which the <math>j</math>th ball falls. Clearly <math>X</math> is function of <math>Y_1,\ldots, Y_m</math>. | |||

We than observe that changing the value of any <math>Y_i</math> can change the value of <math>X</math> by at most 1, because one ball can affect the emptiness of at most one bin. | |||

Thus as a function of independent random variables <math>Y_1,\ldots, Y_m</math>, <math>X</math> satisfies the Lipschitz condition. Apply the method of bounded differences, it holds that | |||

:<math> | |||

\Pr\left[\left|X-n\left(1-\frac{1}{n}\right)^m\right|\ge t\sqrt{m}\right]=\Pr[|X-\mathbf{E}[X]|\ge t\sqrt{m}]\le 2e^{-t^2/2} | |||

</math> | |||

Thus, for sufficiently large <math>n</math> and <math>m</math>, the number of empty bins is tightly concentrated around <math>n\left(1-\frac{1}{n}\right)^m\approx \frac{n}{e^{m/n}}</math> | |||

==== Pattern Matching ==== | |||

Let <math>\boldsymbol{X}=(X_1,\ldots,X_n)</math> be a sequence of characters chosen independently and uniformly at random from an alphabet <math>\Sigma</math>, where <math>m=|\Sigma|</math>. Let <math>\pi\in\Sigma^k</math> be an arbitrarily fixed string of <math>k</math> characters from <math>\Sigma</math>, called a ''pattern''. Let <math>Y</math> be the number of occurrences of the pattern <math>\pi</math> as a substring of the random string <math>X</math>. | |||

By the linearity of expectation, it is obvious that | |||

:<math> | |||

\mathbf{E}[Y]=(n-k+1)\left(\frac{1}{m}\right)^k. | |||

</math> | |||

We now look at the concentration of <math>Y</math>. The complication again lies in the dependencies between the matches. Yet we will see that <math>Y</math> is well tightly concentrated around its expectation if <math>k</math> is relatively small compared to <math>n</math>. | |||

For a fixed pattern <math>\pi</math>, the random variable <math>Y</math> is a function of the independent random variables <math>(X_1,\ldots,X_n)</math>. Any character <math>X_i</math> participates in no more than <math>k</math> matches, thus changing the value of any <math>X_i</math> can affect the value of <math>Y</math> by at most <math>k</math>. <math>Y</math> satisfies the Lipschitz condition with constant <math>k</math>. Apply the method of bounded differences, | |||

:<math> | |||

\Pr\left[\left|Y-\frac{n-k+1}{m^k}\right|\ge tk\sqrt{n}\right]=\Pr\left[\left|Y-\mathbf{E}[Y]\right|\ge tk\sqrt{n}\right]\le 2e^{-t^2/2} | |||

</math> | |||

==== Combining unit vectors ==== | |||

Let <math>u_1,\ldots,u_n</math> be <math>n</math> unit vectors from some normed space. That is, <math>\|u_i\|=1</math> for any <math>1\le i\le n</math>, where <math>\|\cdot\|</math> denote the vector norm (e.g. <math>\ell_1,\ell_2,\ell_\infty</math>) of the space. | |||

Let <math>\epsilon_1,\ldots,\epsilon_n\in\{-1,+1\}</math> be independently chosen and <math>\Pr[\epsilon_i=-1]=\Pr[\epsilon_i=1]=1/2</math>. | |||

Let | |||

:<math>v=\epsilon_1u_1+\cdots+\epsilon_nu_n, | |||

</math> | |||

and | |||

:<math> | |||

X=\|v\|. | |||

</math> | |||

This kind of construction is very useful in combinatorial proofs of metric problems. We will show that by this construction, the random variable <math>X</math> is well concentrated around its mean. | |||

<math>X</math> is a function of independent random variables <math>\epsilon_1,\ldots,\epsilon_n</math>. | |||

By the triangle inequality for norms, it is easy to verify that changing the sign of a unit vector <math>u_i</math> can only change the value of <math>X</math> for at most 2, thus <math>X</math> satisfies the Lipschitz condition with constant 2. The concentration result follows by applying the method of bounded differences: | |||

:<math> | |||

\Pr[|X-\mathbf{E}[X]|\ge 2t\sqrt{n}]\le 2e^{-t^2/2}. | |||

</math> | |||

Latest revision as of 06:10, 8 October 2019

Chernoff Bound

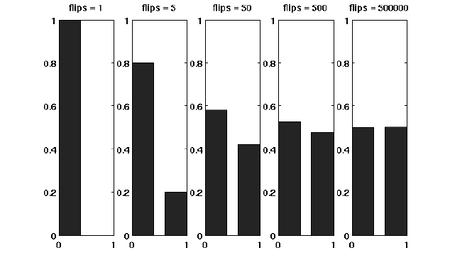

Suppose that we have a fair coin. If we toss it once, then the outcome is completely unpredictable. But if we toss it, say for 1000 times, then the number of HEADs is very likely to be around 500. This phenomenon, as illustrated in the following figure, is called the concentration of measure. The Chernoff bound is an inequality that characterizes the concentration phenomenon for the sum of independent trials.

Before formally stating the Chernoff bound, let's introduce the moment generating function.

Moment generating functions

The more we know about the moments of a random variable

Definition - The moment generating function of a random variable

- The moment generating function of a random variable

By Taylor's expansion and the linearity of expectations,

The moment generating function

The Chernoff bound

The Chernoff bounds are exponentially sharp tail inequalities for the sum of independent trials.

The bounds are obtained by applying Markov's inequality to the moment generating function of the sum of independent trials, with some appropriate choice of the parameter

Chernoff bound (the upper tail) - Let

- Then for any

- Let

Proof. For any where the last step follows by Markov's inequality.

Computing the moment generating function

Let

We bound the moment generating function for each individual

where in the last step we apply the Taylor's expansion so that

Therefore,

Thus, we have shown that for any

For any

The idea of the proof is actually quite clear: we apply Markov's inequality to

We then proceed to the lower tail, the probability that the random variable deviates below the mean value:

Chernoff bound (the lower tail) - Let

- Then for any

- Let

Proof. For any For any

Useful forms of the Chernoff bounds

Some useful special forms of the bounds can be derived directly from the above general forms of the bounds. We now know better why we say that the bounds are exponentially sharp.

Useful forms of the Chernoff bound - Let

- 1. for

- 2. for

- Let

Proof. To obtain the bounds in (1), we need to show that for To obtain the bound in (2), let

Applications to balls-into-bins

Throwing

Now we give a more "advanced" analysis by using Chernoff bounds.

For any

Let

Then the expected load of bin

For the case

Note that

The

When

Let

Thus,

Applying the union bound, the probability that there exists a bin with load

Therefore, for

The

When

We can apply an easier form of the Chernoff bounds,

By the union bound, the probability that there exists a bin with load

Therefore, for

Martingales

"Martingale" originally refers to a betting strategy in which the gambler doubles his bet after every loss. Assuming unlimited wealth, this strategy is guaranteed to eventually have a positive net profit. For example, starting from an initial stake 1, after

which is a positive number.

However, the assumption of unlimited wealth is unrealistic. For limited wealth, with geometrically increasing bet, it is very likely to end up bankrupt. You should never try this strategy in real life.

Suppose that the gambler is allowed to use any strategy. His stake on the next beting is decided based on the results of all the bettings so far. This gives us a highly dependent sequence of random variables

Definition (martingale) - A sequence of random variables

- A sequence of random variables

The martingale can be generalized to be with respect to another sequence of random variables.

Definition (martingale, general version) - A sequence of random variables

- A sequence of random variables

Therefore, a sequence

The purpose of this generalization is that we are usually more interested in a function of a sequence of random variables, rather than the sequence itself.

Azuma's Inequality

The Azuma's inequality is a martingale tail inequality.

Azuma's Inequality - Let

- Then

- Let

Unlike the Chernoff bounds, there is no assumption of independence, which makes the martingale inequalities more useful.

The following bounded difference condition

says that the martingale

The Azuma's inequality says that for any martingale satisfying the bounded difference condition, it is unlikely that process wanders far from its starting point.

A special case is when the differences are bounded by a constant. The following corollary is directly implied by the Azuma's inequality.

Corollary - Let

- Then

- Let

This corollary states that for any martingale sequence whose diferences are bounded by a constant, the probability that it deviates

Generalization

Azuma's inequality can be generalized to a martingale with respect another sequence.

Azuma's Inequality (general version) - Let

- Then

- Let

The Proof of Azuma's Inueqality

We will only give the formal proof of the non-generalized version. The proof of the general version is almost identical, with the only difference that we work on random sequence

The proof of Azuma's Inequality uses several ideas which are used in the proof of the Chernoff bounds. We first observe that the total deviation of the martingale sequence can be represented as the sum of deferences in every steps. Thus, as the Chernoff bounds, we are looking for a bound of the deviation of the sum of random variables. The strategy of the proof is almost the same as the proof of Chernoff bounds: we first apply Markov's inequality to the moment generating function, then we bound the moment generating function, and at last we optimize the parameter of the moment generating function. However, unlike the Chernoff bounds, the martingale differences are not independent any more. So we replace the use of the independence in the Chernoff bound by the martingale property. The proof is detailed as follows.

In order to bound the probability of

Represent the deviation as the sum of differences

We define the martingale difference sequence: for

It holds that

The second to the last equation is due to the fact that

Let

The deviation

We then only need to upper bound the probability of the event

Apply Markov's inequality to the moment generating function

The event

This is exactly the same as what we did to prove the Chernoff bound. Next, we need to bound the moment generating function

Bound the moment generating functions

The moment generating function

The first and the last equations are due to the fundamental facts about conditional expectation which are proved by us in the first section.

We then upper bound the

Lemma - Let

- Let

Proof. Observe that for Since

By expanding both sides as Taylor's series, it can be verified that

Apply the above lemma to the random variable

We have already shown that its expectation

Back to our analysis of the expectation

Apply the same analysis to

Go back to the Markov's inequality,

We then only need to choose a proper

Optimization

By choosing

Thus, the probability

The upper tail of Azuma's inequality is proved. By replacing

The Doob martingales

The following definition describes a very general approach for constructing an important type of martingales.

Definition (The Doob sequence) - The Doob sequence of a function

- In particular,

- The Doob sequence of a function

The Doob sequence of a function defines a martingale. That is

for any

To prove this claim, we recall the definition that

where the second equation is due to the fundamental fact about conditional expectation introduced in the first section.

The Doob martingale describes a very natural procedure to determine a function value of a sequence of random variables. Suppose that we want to predict the value of a function

The following two Doob martingales arise in evaluating the parameters of random graphs.

- edge exposure martingale

- Let

- Let

- The sequence

- vertex exposure martingale

- Instead of revealing edges one at a time, we could reveal the set of edges connected to a given vertex, one vertex at a time. Suppose that the vertex set is

- Let

- The sequence

Chromatic number

The random graph

Theorem [Shamir and Spencer (1987)] - Let

- Let

Proof. Consider the vertex exposure martingale where each

is satisfied. Now apply the Azuma's inequality for the martingale

For

Hoeffding's Inequality

The following theorem states the so-called Hoeffding's inequality. It is a generalized version of the Chernoff bounds. Recall that the Chernoff bounds hold for the sum of independent trials. When the random variables are not trials, the Hoeffding's inequality is useful, since it holds for the sum of any independent random variables whose ranges are bounded.

Hoeffding's inequality - Let

- Let

Proof. Define the Doob martingale sequence Apply Azuma's inequality for the martingale

The Bounded Difference Method

Combining Azuma's inequality with the construction of Doob martingales, we have the powerful Bounded Difference Method for concentration of measures.

For arbitrary random variables

Given a sequence of random variables

Theorem (Method of averaged bounded differences) - Let

- Then

- Let

Proof. Define the Doob Martingale sequence

For independent random variables

The condition of bounded averaged differences is usually hard to check. This severely limits the usefulness of the method. To overcome this, we introduce a property which is much easier to check, called the Lipschitz condition.

Definition (Lipschitz condition) - A function

- A function

In other words, the function satisfies the Lipschitz condition if an arbitrary change in the value of any one argument does not change the value of the function by more than 1.

The diference of 1 can be replaced by arbitrary constants, which gives a generalized version of Lipschitz condition.

Definition (Lipschitz condition, general version) - A function

- A function

The following "method of bounded differences" can be developed for functions satisfying the Lipschitz condition. Unfortunately, in order to imply the condition of averaged bounded differences from the Lipschitz condition, we have to restrict the method to independent random variables.

Corollary (Method of bounded differences) - Let

- Let

Proof. For convenience, we denote that We first show that the Lipschitz condition with constants

And this condition implies the averaged bounded difference condition: for all

Then by applying the method of averaged bounded differences, the corollary can be proved.

For any

Let

Thus, the Lipschitz condition is transformed to the ALC. We then deduce the averaged bounded difference condition from ALC.

By the law of total expectation,

We can trivially write

Hence, the difference is

The averaged bounded diference condition is implied. Applying the method of averaged bounded diferences, the corollary follows.

Applications

Occupancy problem

Throwing

This problem can be described equivalently as follows. Let

For any

By the linearity of expectation,

We want to know how

We than observe that changing the value of any

Thus, for sufficiently large

Pattern Matching

Let

By the linearity of expectation, it is obvious that

We now look at the concentration of

For a fixed pattern

Combining unit vectors

Let

Let

Let

and

This kind of construction is very useful in combinatorial proofs of metric problems. We will show that by this construction, the random variable