Randomized Algorithms (Spring 2010)/Tail inequalities: Difference between revisions

imported>WikiSysop m Protected "Randomized Algorithms (Spring 2010)/Tail inequalities" ([edit=sysop] (indefinite) [move=sysop] (indefinite)) |

|||

| (47 intermediate revisions by 6 users not shown) | |||

| Line 1: | Line 1: | ||

When applying probabilistic analysis, we often want a bound in form of <math>\Pr[X\ge t]<\epsilon</math> for some random variable <math>X</math> (think that <math>X</math> is a cost such as running time of a randomized algorithm). We call this a '''tail bound''', or a '''tail inequality'''. | |||

In principle, we can bound <math>\Pr[X\ge t]</math> by directly estimating the probability of the event that <math>X\ge t</math>. Besides this ''ad hoc'' way, we want to have some general tools which estimate tail probabilities based on certain information regarding the random variables. | |||

== The Moment Methods == | |||

=== Markov's inequality === | |||

One of the most natural information about a random variable is its expectation, which is the first moment of the random variable. Markov's inequality draws a tail bound for a random variable from its expectation. | |||

{{Theorem | |||

|Theorem (Markov's Inequality)| | |||

:Let <math>X</math> be a random variable assuming only nonnegative values. Then, for all <math>t>0</math>, | |||

::<math>\begin{align} | |||

\Pr[X\ge t]\le \frac{\mathbf{E}[X]}{t}. | |||

\end{align}</math> | |||

}} | |||

{{Proof| Let <math>Y</math> be the indicator such that | |||

:<math>\begin{align} | |||

Y &= | |||

\begin{cases} | |||

1 & \mbox{if }X\ge t,\\ | |||

0 & \mbox{otherwise.} | |||

\end{cases} | |||

\end{align}</math> | |||

It holds that <math>Y\le\frac{X}{t}</math>. Since <math>Y</math> is 0-1 valued, <math>\mathbf{E}[Y]=\Pr[Y=1]=\Pr[X\ge t]</math>. Therefore, | |||

:<math> | |||

\Pr[X\ge t] | |||

= | |||

\mathbf{E}[Y] | |||

\le | |||

\mathbf{E}\left[\frac{X}{t}\right] | |||

=\frac{\mathbf{E}[X]}{t}. | |||

</math> | |||

}} | |||

;Example (from Las Vegas to Monte Carlo) | |||

Let <math>A</math> be a Las Vegas randomized algorithm for a decision problem <math>f</math>, whose expected running time is within <math>T(n)</math> on any input of size <math>n</math>. We transform <math>A</math> to a Monte Carlo randomized algorithm <math>B</math> with bounded one-sided error as follows: | |||

:<math>B(x)</math>: | |||

:*Run <math>A(x)</math> for <math>2T(n)</math> long where <math>n</math> is the size of <math>x</math>. | |||

:*If <math>A(x)</math> returned within <math>2T(n)</math> time, then return what <math>A(x)</math> just returned, else return 1. | |||

Since <math>A</math> is Las Vegas, its output is always correct, thus <math>B(x)</math> only errs when it returns 1, thus the error is one-sided. The error probability is bounded by the probability that <math>A(x)</math> runs longer than <math>2T(n)</math>. Since the expected running time of <math>A(x)</math> is at most <math>T(n)</math>, due to Markov's inequality, | |||

:<math> | |||

\Pr[\mbox{the running time of }A(x)\ge2T(n)]\le\frac{\mathbf{E}[\mbox{running time of }A(x)]}{2T(n)}\le\frac{1}{2}, | |||

</math> | |||

thus the error probability is bounded. | |||

This easy reduction implies that '''ZPP'''<math>\subseteq</math>'''RP'''. | |||

==== Generalization ==== | |||

For any random variable <math>X</math>, for an arbitrary non-negative real function <math>h</math>, the <math>h(X)</math> is a non-negative random variable. Applying Markov's inequality, we directly have that | |||

:<math> | |||

\Pr[h(X)\ge t]\le\frac{\mathbf{E}[h(X)]}{t}. | |||

</math> | |||

This trivial application of Markov's inequality gives us a powerful tool for proving tail inequalities. With the function <math>h</math> which extracts more information about the random variable, we can prove sharper tail inequalities. | |||

=== Variance === | |||

{{Theorem | |||

|Definition (variance)| | |||

:The '''variance''' of a random variable <math>X</math> is defined as | |||

::<math>\begin{align} | |||

\mathbf{Var}[X]=\mathbf{E}\left[(X-\mathbf{E}[X])^2\right]=\mathbf{E}\left[X^2\right]-(\mathbf{E}[X])^2. | |||

\end{align}</math> | |||

:The '''standard deviation''' of random variable <math>X</math> is | |||

::<math> | |||

\delta[X]=\sqrt{\mathbf{Var}[X]}. | |||

</math> | |||

}} | |||

We have seen that due to the linearity of expectations, the expectation of the sum of variable is the sum of the expectations of the variables. It is natural to ask whether this is true for variances. We find that the variance of sum has an extra term called covariance. | |||

{{Theorem | |||

|Definition (covariance)| | |||

:The '''covariance''' of two random variables <math>X</math> and <math>Y</math> is | |||

::<math>\begin{align} | |||

\mathbf{Cov}(X,Y)=\mathbf{E}\left[(X-\mathbf{E}[X])(Y-\mathbf{E}[Y])\right]. | |||

\end{align}</math> | |||

}} | |||

We have the following theorem for the variance of sum. | |||

{{Theorem | |||

|Theorem| | |||

:For any two random variables <math>X</math> and <math>Y</math>, | |||

::<math>\begin{align} | |||

\mathbf{Var}[X+Y]=\mathbf{Var}[X]+\mathbf{Var}[Y]+2\mathbf{Cov}(X,Y). | |||

\end{align}</math> | |||

:Generally, for any random variables <math>X_1,X_2,\ldots,X_n</math>, | |||

::<math>\begin{align} | |||

\mathbf{Var}\left[\sum_{i=1}^n X_i\right]=\sum_{i=1}^n\mathbf{Var}[X_i]+\sum_{i\neq j}\mathbf{Cov}(X_i,X_j). | |||

\end{align}</math> | |||

}} | |||

{{Proof| The equation for two variables is directly due to the definition of variance and covariance. The equation for <math>n</math> variables can be deduced from the equation for two variables. | |||

}} | |||

We will see that when random variables are independent, the variance of sum is equal to the sum of variances. To prove this, we first establish a very useful result regarding the expectation of multiplicity. | |||

{{Theorem | |||

|Theorem| | |||

:For any two independent random variables <math>X</math> and <math>Y</math>, | |||

::<math>\begin{align} | |||

\mathbf{E}[X\cdot Y]=\mathbf{E}[X]\cdot\mathbf{E}[Y]. | |||

\end{align}</math> | |||

}} | |||

{{Proof| | |||

:<math> | |||

\begin{align} | |||

\mathbf{E}[X\cdot Y] | |||

&= | |||

\sum_{x,y}xy\Pr[X=x\wedge Y=y]\\ | |||

&= | |||

\sum_{x,y}xy\Pr[X=x]\Pr[Y=y]\\ | |||

&= | |||

\sum_{x}x\Pr[X=x]\sum_{y}y\Pr[Y=y]\\ | |||

&= | |||

\mathbf{E}[X]\cdot\mathbf{E}[Y]. | |||

\end{align} | |||

</math> | |||

}} | |||

With the above theorem, we can show that the covariance of two independent variables is always zero. | |||

{{Theorem | |||

|Theorem| | |||

:For any two independent random variables <math>X</math> and <math>Y</math>, | |||

::<math>\begin{align} | |||

\mathbf{Cov}(X,Y)=0. | |||

\end{align}</math> | |||

}} | |||

{{Proof| | |||

:<math>\begin{align} | |||

\mathbf{Cov}(X,Y) | |||

&=\mathbf{E}\left[(X-\mathbf{E}[X])(Y-\mathbf{E}[Y])\right]\\ | |||

&= \mathbf{E}\left[X-\mathbf{E}[X]\right]\mathbf{E}\left[Y-\mathbf{E}[Y]\right] &\qquad(\mbox{Independence})\\ | |||

&=0. | |||

\end{align}</math> | |||

}} | |||

We then have the following theorem for the variance of the sum of pairwise independent random variables. | |||

{{Theorem | |||

|Theorem| | |||

:For '''pairwise''' independent random variables <math>X_1,X_2,\ldots,X_n</math>, | |||

::<math>\begin{align} | |||

\mathbf{Var}\left[\sum_{i=1}^n X_i\right]=\sum_{i=1}^n\mathbf{Var}[X_i]. | |||

\end{align}</math> | |||

}} | |||

;Remark | |||

:The theorem holds for '''pairwise''' independent random variables, a much weaker independence requirement than the '''mutual''' independence. This makes the variance-based probability tools work even for weakly random cases. We will see what it exactly means in the future lectures. | |||

==== Variance of binomial distribution ==== | |||

For a Bernoulli trial with parameter <math>p</math>. | |||

:<math> | |||

X=\begin{cases} | |||

1& \mbox{with probability }p\\ | |||

0& \mbox{with probability }1-p | |||

\end{cases} | |||

</math> | |||

The variance is | |||

:<math> | |||

\mathbf{Var}[X]=\mathbf{E}[X^2]-(\mathbf{E}[X])^2=\mathbf{E}[X]-(\mathbf{E}[X])^2=p-p^2=p(1-p). | |||

</math> | |||

Let <math>Y</math> be a binomial random variable with parameter <math>n</math> and <math>p</math>, i.e. <math>Y=\sum_{i=1}^nY_i</math>, where <math>Y_i</math>'s are i.i.d. Bernoulli trials with parameter <math>p</math>. The variance is | |||

:<math> | |||

\begin{align} | |||

\mathbf{Var}[Y] | |||

&= | |||

\mathbf{Var}\left[\sum_{i=1}^nY_i\right]\\ | |||

&= | |||

\sum_{i=1}^n\mathbf{Var}\left[Y_i\right] &\qquad (\mbox{Independence})\\ | |||

&= | |||

\sum_{i=1}^np(1-p) &\qquad (\mbox{Bernoulli})\\ | |||

&= | |||

p(1-p)n. | |||

\end{align} | |||

</math> | |||

=== Chebyshev's inequality === | |||

With the information of the expectation and variance of a random variable, one can derive a stronger tail bound known as Chebyshev's Inequality. | |||

{{Theorem | |||

|Theorem (Chebyshev's Inequality)| | |||

:For any <math>t>0</math>, | |||

::<math>\begin{align} | |||

\Pr\left[|X-\mathbf{E}[X]| \ge t\right] \le \frac{\mathbf{Var}[X]}{t^2}. | |||

\end{align}</math> | |||

}} | |||

{{Proof| Observe that | |||

:<math>\Pr[|X-\mathbf{E}[X]| \ge t] = \Pr[(X-\mathbf{E}[X])^2 \ge t^2].</math> | |||

Since <math>(X-\mathbf{E}[X])^2</math> is a nonnegative random variable, we can apply Markov's inequality, such that | |||

:<math> | |||

\Pr[(X-\mathbf{E}[X])^2 \ge t^2] \le | |||

\frac{\mathbf{E}[(X-\mathbf{E}[X])^2]}{t^2} | |||

=\frac{\mathbf{Var}[X]}{t^2}. | |||

</math> | |||

}} | |||

=== Higher moments === | |||

The above two inequalities can be put into a general framework regarding the [http://en.wikipedia.org/wiki/Moment_(mathematics) '''moments'''] of random variables. | |||

{{Theorem | |||

|Definition (moments)| | |||

:The <math>k</math>th moment of a random variable <math>X</math> is <math>\mathbf{E}[X^k]</math>. | |||

}} | |||

The more we know about the moments, the more information we have about the distribution, hence in principle, we can get tighter tail bounds. This technique is called the <math>k</math>th moment method. | |||

We know that the <math>k</math>th moment is <math>\mathbf{E}[X^k]</math>. More generally, | |||

the <math>k</math>th moment about <math>c</math> is <math>\mathbf{E}[(X-c)^k]</math>. The [http://en.wikipedia.org/wiki/Central_moment central moment] of <math>X</math>, denoted <math>\mu_k[X]</math>, is defined as <math>\mu_k[X]=\mathbf{E}[(X-\mathbf{E}[X])^k]</math>. So the variance is just the second central moment <math>\mu_2[X]</math>. | |||

The <math>k</math>th moment method is stated by the following theorem. | |||

{{Theorem | |||

|Theorem (the <math>k</math>th moment method)| | |||

:For even <math>k>0</math>, and any <math>t>0</math>, | |||

::<math>\begin{align} | |||

\Pr\left[|X-\mathbf{E}[X]| \ge t\right] \le \frac{\mu_k[X]}{t^k}. | |||

\end{align}</math> | |||

}} | |||

{{Proof| Apply Markov's inequality to <math>(X-\mathbf{E}[X])^k</math>. | |||

}} | |||

How about the odd <math>k</math>? For odd <math>k</math>, we should apply Markov's inequality to <math>|X-\mathbf{E}[X]|^k</math>, but estimating expectations of absolute values can be hard. | |||

== Select the Median == | == Select the Median == | ||

The [http://en.wikipedia.org/wiki/Selection_algorithm selection problem] is the problem of finding the <math>k</math>th smallest element in a set <math>S</math>. A typical case of selection problem is finding the '''median'''. | The [http://en.wikipedia.org/wiki/Selection_algorithm selection problem] is the problem of finding the <math>k</math>th smallest element in a set <math>S</math>. A typical case of selection problem is finding the '''median'''. | ||

{ | {{Theorem | ||

| | |Definition| | ||

:The median of a set <math>S</math> is the <math>(\lceil n/2\rceil)</math>th element in the sorted order of <math>S</math>. | :The median of a set <math>S</math> is the <math>(\lceil n/2\rceil)</math>th element in the sorted order of <math>S</math>. | ||

}} | |||

The median can be found in <math>O(n\log n)</math> time by sorting. There is a linear-time deterministic algorithm, [http://en.wikipedia.org/wiki/Selection_algorithm#Linear_general_selection_algorithm_-_.22Median_of_Medians_algorithm.22 "median of medians" algorithm], which is quite sophisticated. Here we introduce a much simpler randomized algorithm which also runs in linear time. | The median can be found in <math>O(n\log n)</math> time by sorting. There is a linear-time deterministic algorithm, [http://en.wikipedia.org/wiki/Selection_algorithm#Linear_general_selection_algorithm_-_.22Median_of_Medians_algorithm.22 "median of medians" algorithm], which is quite sophisticated. Here we introduce a much simpler randomized algorithm which also runs in linear time. | ||

=== Randomized median algorithm === | === Randomized median selection algorithm === | ||

We introduce a randomized median selection algorithm called '''LazySelect''', which is a variant on a randomized algorithm due to [http://en.wikipedia.org/wiki/Robert_Floyd Floyd] and [http://en.wikipedia.org/wiki/Ron_Rivest Rivest] | |||

The idea of this algorithm is random sampling. For a set <math>S</math>, let <math>m\in S</math> denote the median. We observe that if we can find two elements <math>d,u\in S</math> satisfying the following properties: | The idea of this algorithm is random sampling. For a set <math>S</math>, let <math>m\in S</math> denote the median. We observe that if we can find two elements <math>d,u\in S</math> satisfying the following properties: | ||

# The median is between <math>d</math> and <math>u</math> in the sorted order, i.e. <math>d\le m\le u</math>; | # The median is between <math>d</math> and <math>u</math> in the sorted order, i.e. <math>d\le m\le u</math>; | ||

| Line 28: | Line 255: | ||

We choose the size of <math>R</math> as <math>n^{3/4}</math>, and <math>d</math> and <math>u</math> are within <math>\sqrt{n}</math> range around the median of <math>R</math>. | We choose the size of <math>R</math> as <math>n^{3/4}</math>, and <math>d</math> and <math>u</math> are within <math>\sqrt{n}</math> range around the median of <math>R</math>. | ||

{ | {{Theorem | ||

| | |LazySelect| | ||

| | '''Input:''' a set <math>S</math> of <math>n</math> elements over totally ordered domain. | ||

# Pick a multi-set <math>R</math> of <math>\left\lceil n^{3/4}\right\rceil</math> elements in <math>S</math>, chosen independently and uniformly at random with replacement, and sort <math>R</math>. | # Pick a multi-set <math>R</math> of <math>\left\lceil n^{3/4}\right\rceil</math> elements in <math>S</math>, chosen independently and uniformly at random with replacement, and sort <math>R</math>. | ||

# Let <math>d</math> be the <math>\left\lfloor\frac{1}{2}n^{3/4}-\sqrt{n}\right\rfloor</math>-th smallest element in <math>R</math>, and let <math>u</math> be the <math>\left\lceil\frac{1}{2}n^{3/4}+\sqrt{n}\right\rceil</math>-th smallest element in <math>R</math>. | # Let <math>d</math> be the <math>\left\lfloor\frac{1}{2}n^{3/4}-\sqrt{n}\right\rfloor</math>-th smallest element in <math>R</math>, and let <math>u</math> be the <math>\left\lceil\frac{1}{2}n^{3/4}+\sqrt{n}\right\rceil</math>-th smallest element in <math>R</math>. | ||

# Construct <math>C=\{x\in S\mid d\le x\le u\}</math> and compute the ranks <math>r_d=|\{x\in S\mid x<d\}|</math> and <math>r_u=|\{x\in S\mid x<u\}|</math>. | # Construct <math>C=\{x\in S\mid d\le x\le u\}</math> and compute the ranks <math>r_d=|\{x\in S\mid x<d\}|</math> and <math>r_u=|\{x\in S\mid x<u\}|</math>. | ||

# If <math>r_d>\frac{n}{2}</math> or <math>r_u<\frac{n}{2}</math> or <math>|C|>4n^{3/4}</math> then FAIL. | # If <math>r_d>\frac{n}{2}</math> or <math>r_u<\frac{n}{2}</math> or <math>|C|>4n^{3/4}</math> then return FAIL. | ||

# Sort <math>C</math> and return the <math>\left(\left\lfloor\frac{n}{2}\right\rfloor-r_d+1\right)</math>th element in the sorted order of <math>C</math>. | # Sort <math>C</math> and return the <math>\left(\left\lfloor\frac{n}{2}\right\rfloor-r_d+1\right)</math>th element in the sorted order of <math>C</math>. | ||

}} | |||

"Sample with replacement" (有放回采样) means that after sampling an element, we put the element back to the set. In this way, each sampled element is independently and identically distributed (''i.i.d'') (独立同分布). In the above algorithm, this is for our convenience of analysis. | "Sample with replacement" (有放回采样) means that after sampling an element, we put the element back to the set. In this way, each sampled element is independently and identically distributed (''i.i.d'') (独立同分布). In the above algorithm, this is for our convenience of analysis. | ||

| Line 53: | Line 279: | ||

We now bound the probabilities of these events one by one. | We now bound the probabilities of these events one by one. | ||

{ | {{Theorem | ||

| | |Lemma 1| | ||

:<math>\Pr[\mathcal{E}_1]\le \frac{1}{4}n^{-1/4}</math>. | :<math>\Pr[\mathcal{E}_1]\le \frac{1}{4}n^{-1/4}</math>. | ||

}} | |||

{{Proof| Let <math>X_i</math> be the <math>i</math>th sampled element in Line 1 of the algorithm. Let <math>Y_i</math> be a indicator random variable such that | |||

:<math> | :<math> | ||

Y_i= | Y_i= | ||

| Line 93: | Line 319: | ||

\end{align} | \end{align} | ||

</math> | </math> | ||

}} | |||

By a similar analysis, we can obtain the following bound for the event <math>\mathcal{E}_2</math>. | By a similar analysis, we can obtain the following bound for the event <math>\mathcal{E}_2</math>. | ||

{ | {{Theorem | ||

| | |Lemma 2| | ||

:<math>\Pr[\mathcal{E}_2]\le \frac{1}{4}n^{-1/4}</math>. | :<math>\Pr[\mathcal{E}_2]\le \frac{1}{4}n^{-1/4}</math>. | ||

}} | |||

We now bound the probability of the event <math>\mathcal{E}_3</math>. | We now bound the probability of the event <math>\mathcal{E}_3</math>. | ||

{ | {{Theorem | ||

| | |Lemma 3| | ||

:<math>\Pr[\mathcal{E}_3]\le \frac{1}{2}n^{-1/4}</math>. | :<math>\Pr[\mathcal{E}_3]\le \frac{1}{2}n^{-1/4}</math>. | ||

}} | |||

{{Proof| The event <math>\mathcal{E}_3</math> is defined as that <math>|C|>4 n^{3/4}</math>, which by the Pigeonhole Principle, implies that at leas one of the following must be true: | |||

* <math>\mathcal{E}_3'</math>: at least <math>2n^{3/4}</math> elements of <math>C</math> is greater than <math>m</math>; | * <math>\mathcal{E}_3'</math>: at least <math>2n^{3/4}</math> elements of <math>C</math> is greater than <math>m</math>; | ||

* <math>\mathcal{E}_3''</math>: at least <math>2n^{3/4}</math> elements of <math>C</math> is smaller than <math>m</math>. | * <math>\mathcal{E}_3''</math>: at least <math>2n^{3/4}</math> elements of <math>C</math> is smaller than <math>m</math>. | ||

| Line 148: | Line 373: | ||

:<math>\Pr[\mathcal{E}_3]\le \Pr[\mathcal{E}_3']+\Pr[\mathcal{E}_3'']\le\frac{1}{2}n^{-1/4}. | :<math>\Pr[\mathcal{E}_3]\le \Pr[\mathcal{E}_3']+\Pr[\mathcal{E}_3'']\le\frac{1}{2}n^{-1/4}. | ||

</math> | </math> | ||

}} | |||

| Line 159: | Line 384: | ||

== Chernoff Bound == | == Chernoff Bound == | ||

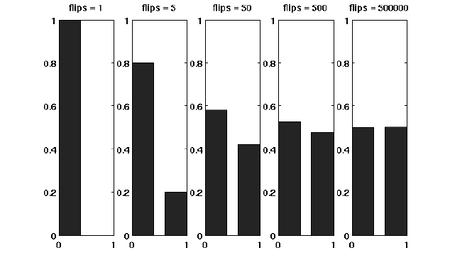

Suppose that we have a fair coin. If we toss it once, then the outcome is completely unpredictable. But if we toss it, say for 1000 times, then the number of HEADs is very likely to be around 500. This striking phenomenon is called the '''concentration'''. The Chernoff bound captures the concentration of independent trials. | Suppose that we have a fair coin. If we toss it once, then the outcome is completely unpredictable. But if we toss it, say for 1000 times, then the number of HEADs is very likely to be around 500. This striking phenomenon, illustrated in the right figure, is called the '''concentration'''. The Chernoff bound captures the concentration of independent trials. | ||

[[File:Coinflip.png|border|450px|right]] | |||

The Chernoff bound is also a tail bound for the sum of independent random variables which may give us ''exponentially'' sharp bounds. | The Chernoff bound is also a tail bound for the sum of independent random variables which may give us ''exponentially'' sharp bounds. | ||

| Line 168: | Line 395: | ||

The more we know about the moments of a random variable <math>X</math>, the more information we would have about <math>X</math>. There is a so-called '''moment generating function''', which "packs" all the information about the moments of <math>X</math> into one function. | The more we know about the moments of a random variable <math>X</math>, the more information we would have about <math>X</math>. There is a so-called '''moment generating function''', which "packs" all the information about the moments of <math>X</math> into one function. | ||

{ | {{Theorem | ||

| | |Definition| | ||

:The moment generating function of a random variable <math>X</math> is defined as <math>\mathbf{E}\left[\mathrm{e}^{\lambda X}\right]</math> where <math>\lambda</math> is the parameter of the function. | :The moment generating function of a random variable <math>X</math> is defined as <math>\mathbf{E}\left[\mathrm{e}^{\lambda X}\right]</math> where <math>\lambda</math> is the parameter of the function. | ||

}} | |||

By Taylor's expansion and the linearity of expectations, | By Taylor's expansion and the linearity of expectations, | ||

| Line 184: | Line 411: | ||

=== The Chernoff bound === | === The Chernoff bound === | ||

The Chernoff bounds are tail inequalities | The Chernoff bounds are exponentially sharp tail inequalities for the sum of independent trials. | ||

The bounds are obtained by applying Markov's inequality to the moment generating function of the sum of independent trials, with some appropriate choice of the parameter <math>\lambda</math>. | The bounds are obtained by applying Markov's inequality to the moment generating function of the sum of independent trials, with some appropriate choice of the parameter <math>\lambda</math>. | ||

{ | {{Theorem | ||

| | |Chernoff bound (the upper tail)| | ||

:Let <math>X=\sum_{i=1}^n X_i</math>, where <math>X_1, X_2, \ldots, X_n</math> are independent Poisson trials. Let <math>\mu=\mathbf{E}[X]</math>. | :Let <math>X=\sum_{i=1}^n X_i</math>, where <math>X_1, X_2, \ldots, X_n</math> are independent Poisson trials. Let <math>\mu=\mathbf{E}[X]</math>. | ||

:Then for any <math>\delta>0</math>, | :Then for any <math>\delta>0</math>, | ||

::<math>\Pr[X\ge (1+\delta)\mu]\le\left(\frac{e^{\delta}}{(1+\delta)^{(1+\delta)}}\right)^{\mu}.</math> | ::<math>\Pr[X\ge (1+\delta)\mu]\le\left(\frac{e^{\delta}}{(1+\delta)^{(1+\delta)}}\right)^{\mu}.</math> | ||

}} | |||

{{Proof| For any <math>\lambda>0</math>, <math>X\ge (1+\delta)\mu</math> is equivalent to that <math>e^{\lambda X}\ge e^{\lambda (1+\delta)\mu}</math>, thus | |||

:<math>\begin{align} | :<math>\begin{align} | ||

\Pr[X\ge (1+\delta)\mu] | \Pr[X\ge (1+\delta)\mu] | ||

| Line 208: | Line 435: | ||

\mathbf{E}\left[e^{\lambda \sum_{i=1}^n X_i}\right]\\ | \mathbf{E}\left[e^{\lambda \sum_{i=1}^n X_i}\right]\\ | ||

&= | &= | ||

\mathbf{E}\left[\prod_{i=1}^n e^{\lambda X_i}\right] | \mathbf{E}\left[\prod_{i=1}^n e^{\lambda X_i}\right]\\ | ||

\ | |||

&= | &= | ||

\prod_{i=1}^n \mathbf{E}\left[e^{\lambda X_i}\right]. | \prod_{i=1}^n \mathbf{E}\left[e^{\lambda X_i}\right]. | ||

& (\mbox{for independent random variables}) | |||

\end{align}</math> | \end{align}</math> | ||

| Line 256: | Line 480: | ||

For any <math>\delta>0</math>, we can let <math>\lambda=\ln(1+\delta)>0</math> to get | For any <math>\delta>0</math>, we can let <math>\lambda=\ln(1+\delta)>0</math> to get | ||

:<math>\Pr[X\ge (1+\delta)\mu]\le\left(\frac{e^{\delta}}{(1+\delta)^{(1+\delta)}}\right)^{\mu}.</math> | :<math>\Pr[X\ge (1+\delta)\mu]\le\left(\frac{e^{\delta}}{(1+\delta)^{(1+\delta)}}\right)^{\mu}.</math> | ||

}} | |||

The idea of the proof is actually quite clear: we apply Markov's inequality to <math>e^{\lambda X}</math> and for the rest, we just estimate the moment generating function <math>\mathbf{E}[e^{\lambda X}]</math>. To make the bound as tight as possible, we minimized the <math>\frac{e^{(e^\lambda-1)}}{e^{\lambda (1+\delta)}}</math> by setting <math>\lambda=\ln(1+\delta)</math>, which can be justified by taking derivatives of <math>\frac{e^{(e^\lambda-1)}}{e^{\lambda (1+\delta)}}</math>. | |||

---- | ---- | ||

| Line 265: | Line 488: | ||

We then proceed to the lower tail, the probability that the random variable deviates below the mean value: | We then proceed to the lower tail, the probability that the random variable deviates below the mean value: | ||

{ | {{Theorem | ||

| | |Chernoff bound (the lower tail)| | ||

:Let <math>X=\sum_{i=1}^n X_i</math>, where <math>X_1, X_2, \ldots, X_n</math> are independent Poisson trials. Let <math>\mu=\mathbf{E}[X]</math>. | :Let <math>X=\sum_{i=1}^n X_i</math>, where <math>X_1, X_2, \ldots, X_n</math> are independent Poisson trials. Let <math>\mu=\mathbf{E}[X]</math>. | ||

:Then for any <math>0<\delta<1</math>, | :Then for any <math>0<\delta<1</math>, | ||

::<math>\Pr[X\le (1-\delta)\mu]\le\left(\frac{e^{-\delta}}{(1-\delta)^{(1-\delta)}}\right)^{\mu}.</math> | ::<math>\Pr[X\le (1-\delta)\mu]\le\left(\frac{e^{-\delta}}{(1-\delta)^{(1-\delta)}}\right)^{\mu}.</math> | ||

}} | |||

{{Proof| For any <math>\lambda<0</math>, by the same analysis as in the upper tail version, | |||

:<math>\begin{align} | :<math>\begin{align} | ||

\Pr[X\le (1-\delta)\mu] | \Pr[X\le (1-\delta)\mu] | ||

| Line 283: | Line 506: | ||

For any <math>0<\delta<1</math>, we can let <math>\lambda=\ln(1-\delta)<0</math> to get | For any <math>0<\delta<1</math>, we can let <math>\lambda=\ln(1-\delta)<0</math> to get | ||

:<math>\Pr[X\ge (1-\delta)\mu]\le\left(\frac{e^{-\delta}}{(1-\delta)^{(1-\delta)}}\right)^{\mu}.</math> | :<math>\Pr[X\ge (1-\delta)\mu]\le\left(\frac{e^{-\delta}}{(1-\delta)^{(1-\delta)}}\right)^{\mu}.</math> | ||

}} | |||

---- | ---- | ||

| Line 290: | Line 512: | ||

Some useful special forms of the bounds can be derived directly from the above general forms of the bounds. We now know better why we say that the bounds are exponentially sharp. | Some useful special forms of the bounds can be derived directly from the above general forms of the bounds. We now know better why we say that the bounds are exponentially sharp. | ||

{ | {{Theorem | ||

| | |Useful forms of the Chernoff bound| | ||

:Let <math>X=\sum_{i=1}^n X_i</math>, where <math>X_1, X_2, \ldots, X_n</math> are independent Poisson trials. Let <math>\mu=\mathbf{E}[X]</math>. Then | :Let <math>X=\sum_{i=1}^n X_i</math>, where <math>X_1, X_2, \ldots, X_n</math> are independent Poisson trials. Let <math>\mu=\mathbf{E}[X]</math>. Then | ||

:1. for <math>0<\delta\le 1</math>, | :1. for <math>0<\delta\le 1</math>, | ||

| Line 298: | Line 520: | ||

:2. for <math>t\ge 2e\mu</math>, | :2. for <math>t\ge 2e\mu</math>, | ||

::<math>\Pr[X\ge t]\le 2^{-t}.</math> | ::<math>\Pr[X\ge t]\le 2^{-t}.</math> | ||

}} | |||

{{Proof| To obtain the bounds in (1), we need to show that for <math>0<\delta< 1</math>, <math>\frac{e^{\delta}}{(1+\delta)^{(1+\delta)}}\le e^{-\delta^2/3}</math> and <math>\frac{e^{-\delta}}{(1-\delta)^{(1-\delta)}}\le e^{-\delta^2/2}</math>. We can verify both inequalities by standard analysis techniques. | |||

To obtain the bound in (2), let <math>t=(1+\delta)\mu</math>. Then <math>\delta=t/\mu-1\ge 2e-1</math>. Hence, | To obtain the bound in (2), let <math>t=(1+\delta)\mu</math>. Then <math>\delta=t/\mu-1\ge 2e-1</math>. Hence, | ||

| Line 309: | Line 531: | ||

\left(\frac{e}{1+\delta}\right)^{(1+\delta)\mu}\\ | \left(\frac{e}{1+\delta}\right)^{(1+\delta)\mu}\\ | ||

&\le | &\le | ||

\left(\frac{e}{2e}\right)^ | \left(\frac{e}{2e}\right)^t\\ | ||

&\le | &\le | ||

2^{-t} | 2^{-t} | ||

\end{align}</math> | \end{align}</math> | ||

}} | |||

<math> | === Balls into bins, revisited === | ||

Throwing <math>m</math> balls uniformly and independently to <math>n</math> bins, what is the maximum load of all bins with high probability? In the last class, we gave an analysis of this problem by using a counting argument. | |||

Now we give a more "advanced" analysis by using Chernoff bounds. | |||

For any <math>i\in[n]</math> and <math>j\in[m]</math>, let <math>X_{ij}</math> be the indicator variable for the event that ball <math>j</math> is thrown to bin <math>i</math>. Obviously | For any <math>i\in[n]</math> and <math>j\in[m]</math>, let <math>X_{ij}</math> be the indicator variable for the event that ball <math>j</math> is thrown to bin <math>i</math>. Obviously | ||

| Line 327: | Line 548: | ||

Then the expected load of bin <math>i</math> is | |||

<math>(*)\qquad \mu=\mathbf{E}[Y_i]=\mathbf{E}\left[\sum_{j\in[m]}X_{ij}\right]=\sum_{j\in[m]}\mathbf{E}[X_{ij}]=m/n. </math> | |||

For the case <math>m=n</math>, it holds that <math>\mu=1</math> | |||

Note that <math>Y_i</math> is a sum of <math>m</math> mutually independent indicator variable. Applying Chernoff bound, for any particular bin <math>i\in[n]</math>, | Note that <math>Y_i</math> is a sum of <math>m</math> mutually independent indicator variable. Applying Chernoff bound, for any particular bin <math>i\in[n]</math>, | ||

:<math> | :<math> | ||

\Pr[Y_i>(1+\delta)\mu] \le \left(\frac{e^{\delta}}{(1+\delta)^{1+\delta}}\right)^\mu. | |||

</math> | </math> | ||

=== | ==== When <math>m=n</math> ==== | ||

When <math>m=n</math>, <math>\mu=1</math>. Write <math>c=1+\delta</math>. The above bound can be written as | |||

:<math> | :<math> | ||

\ | \Pr[Y_i>c] \le \frac{e^{c-1}}{c^c}. | ||

</math> | </math> | ||

Let <math>c=\frac{e\ln n}{\ln\ln n}</math>, we evaluate <math>\frac{e^{c-1}}{c^c}</math> by taking logarithm to its reciprocal. | |||

:<math> | :<math> | ||

\begin{align} | |||

\begin{ | \ln\left(\frac{c^c}{e^{c-1}}\right) | ||

&= | |||

c\ln c-c+1\\ | |||

\left | |||

\ | |||

\right | |||

&= | &= | ||

c(\ln c-1)+1\\ | |||

&= | &= | ||

\ | \frac{e\ln n}{\ln\ln n}\left(\ln\ln n-\ln\ln\ln n\right)+1\\ | ||

&\ | &\ge | ||

\ | \frac{e\ln n}{\ln\ln n}\cdot\frac{2}{e}\ln\ln n+1\\ | ||

&\ | &\ge | ||

2\ln n. | |||

\end{align} | \end{align} | ||

</math> | |||

Thus, | |||

:<math> | |||

\Pr\left[Y_i>\frac{e\ln n}{\ln\ln n}\right] \le \frac{1}{n^2}. | |||

</math> | </math> | ||

Applying the union bound, the probability that there exists a bin with load <math>>12\ln n</math> is | |||

:<math>\Pr[ | :<math>n\cdot \Pr\left[Y_1>\frac{e\ln n}{\ln\ln n}\right] \le \frac{1}{n}</math>. | ||

Therefore, for <math>m=n</math>, with high probability, the maximum load is <math>O\left(\frac{e\ln n}{\ln\ln n}\right)</math>. | |||

==== For larger <math>m</math> ==== | |||

When <math>m\ge n\ln n</math>, then according to <math>(*)</math>, <math>\mu=\frac{m}{n}\ge \ln n</math> | |||

We can apply an easier form of the Chernoff bounds, | |||

:<math> | |||

\Pr[Y_i\ge 2e\mu]\le 2^{-2e\mu}\le 2^{-2e\ln n}<\frac{1}{n^2}. | |||

</math> | |||

By the union bound, the probability that there exists a bin with load <math>\ge 2e\frac{m}{n}</math> is, | |||

:<math>n\cdot \Pr\left[Y_1>2e\frac{m}{n}\right] = n\cdot \Pr\left[Y_1>2e\mu\right]\le \frac{1}{n}</math>. | |||

Therefore, for <math>m\ge n\ln n</math>, with high probability, the maximum load is <math>O\left(\frac{m}{n}\right)</math>. | |||

Latest revision as of 07:49, 3 August 2011

When applying probabilistic analysis, we often want a bound in form of [math]\displaystyle{ \Pr[X\ge t]\lt \epsilon }[/math] for some random variable [math]\displaystyle{ X }[/math] (think that [math]\displaystyle{ X }[/math] is a cost such as running time of a randomized algorithm). We call this a tail bound, or a tail inequality.

In principle, we can bound [math]\displaystyle{ \Pr[X\ge t] }[/math] by directly estimating the probability of the event that [math]\displaystyle{ X\ge t }[/math]. Besides this ad hoc way, we want to have some general tools which estimate tail probabilities based on certain information regarding the random variables.

The Moment Methods

Markov's inequality

One of the most natural information about a random variable is its expectation, which is the first moment of the random variable. Markov's inequality draws a tail bound for a random variable from its expectation.

Theorem (Markov's Inequality) - Let [math]\displaystyle{ X }[/math] be a random variable assuming only nonnegative values. Then, for all [math]\displaystyle{ t\gt 0 }[/math],

- [math]\displaystyle{ \begin{align} \Pr[X\ge t]\le \frac{\mathbf{E}[X]}{t}. \end{align} }[/math]

- Let [math]\displaystyle{ X }[/math] be a random variable assuming only nonnegative values. Then, for all [math]\displaystyle{ t\gt 0 }[/math],

Proof. Let [math]\displaystyle{ Y }[/math] be the indicator such that - [math]\displaystyle{ \begin{align} Y &= \begin{cases} 1 & \mbox{if }X\ge t,\\ 0 & \mbox{otherwise.} \end{cases} \end{align} }[/math]

It holds that [math]\displaystyle{ Y\le\frac{X}{t} }[/math]. Since [math]\displaystyle{ Y }[/math] is 0-1 valued, [math]\displaystyle{ \mathbf{E}[Y]=\Pr[Y=1]=\Pr[X\ge t] }[/math]. Therefore,

- [math]\displaystyle{ \Pr[X\ge t] = \mathbf{E}[Y] \le \mathbf{E}\left[\frac{X}{t}\right] =\frac{\mathbf{E}[X]}{t}. }[/math]

- [math]\displaystyle{ \square }[/math]

- Example (from Las Vegas to Monte Carlo)

Let [math]\displaystyle{ A }[/math] be a Las Vegas randomized algorithm for a decision problem [math]\displaystyle{ f }[/math], whose expected running time is within [math]\displaystyle{ T(n) }[/math] on any input of size [math]\displaystyle{ n }[/math]. We transform [math]\displaystyle{ A }[/math] to a Monte Carlo randomized algorithm [math]\displaystyle{ B }[/math] with bounded one-sided error as follows:

- [math]\displaystyle{ B(x) }[/math]:

- Run [math]\displaystyle{ A(x) }[/math] for [math]\displaystyle{ 2T(n) }[/math] long where [math]\displaystyle{ n }[/math] is the size of [math]\displaystyle{ x }[/math].

- If [math]\displaystyle{ A(x) }[/math] returned within [math]\displaystyle{ 2T(n) }[/math] time, then return what [math]\displaystyle{ A(x) }[/math] just returned, else return 1.

Since [math]\displaystyle{ A }[/math] is Las Vegas, its output is always correct, thus [math]\displaystyle{ B(x) }[/math] only errs when it returns 1, thus the error is one-sided. The error probability is bounded by the probability that [math]\displaystyle{ A(x) }[/math] runs longer than [math]\displaystyle{ 2T(n) }[/math]. Since the expected running time of [math]\displaystyle{ A(x) }[/math] is at most [math]\displaystyle{ T(n) }[/math], due to Markov's inequality,

- [math]\displaystyle{ \Pr[\mbox{the running time of }A(x)\ge2T(n)]\le\frac{\mathbf{E}[\mbox{running time of }A(x)]}{2T(n)}\le\frac{1}{2}, }[/math]

thus the error probability is bounded.

This easy reduction implies that ZPP[math]\displaystyle{ \subseteq }[/math]RP.

Generalization

For any random variable [math]\displaystyle{ X }[/math], for an arbitrary non-negative real function [math]\displaystyle{ h }[/math], the [math]\displaystyle{ h(X) }[/math] is a non-negative random variable. Applying Markov's inequality, we directly have that

- [math]\displaystyle{ \Pr[h(X)\ge t]\le\frac{\mathbf{E}[h(X)]}{t}. }[/math]

This trivial application of Markov's inequality gives us a powerful tool for proving tail inequalities. With the function [math]\displaystyle{ h }[/math] which extracts more information about the random variable, we can prove sharper tail inequalities.

Variance

Definition (variance) - The variance of a random variable [math]\displaystyle{ X }[/math] is defined as

- [math]\displaystyle{ \begin{align} \mathbf{Var}[X]=\mathbf{E}\left[(X-\mathbf{E}[X])^2\right]=\mathbf{E}\left[X^2\right]-(\mathbf{E}[X])^2. \end{align} }[/math]

- The standard deviation of random variable [math]\displaystyle{ X }[/math] is

- [math]\displaystyle{ \delta[X]=\sqrt{\mathbf{Var}[X]}. }[/math]

- The variance of a random variable [math]\displaystyle{ X }[/math] is defined as

We have seen that due to the linearity of expectations, the expectation of the sum of variable is the sum of the expectations of the variables. It is natural to ask whether this is true for variances. We find that the variance of sum has an extra term called covariance.

Definition (covariance) - The covariance of two random variables [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math] is

- [math]\displaystyle{ \begin{align} \mathbf{Cov}(X,Y)=\mathbf{E}\left[(X-\mathbf{E}[X])(Y-\mathbf{E}[Y])\right]. \end{align} }[/math]

- The covariance of two random variables [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math] is

We have the following theorem for the variance of sum.

Theorem - For any two random variables [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math],

- [math]\displaystyle{ \begin{align} \mathbf{Var}[X+Y]=\mathbf{Var}[X]+\mathbf{Var}[Y]+2\mathbf{Cov}(X,Y). \end{align} }[/math]

- Generally, for any random variables [math]\displaystyle{ X_1,X_2,\ldots,X_n }[/math],

- [math]\displaystyle{ \begin{align} \mathbf{Var}\left[\sum_{i=1}^n X_i\right]=\sum_{i=1}^n\mathbf{Var}[X_i]+\sum_{i\neq j}\mathbf{Cov}(X_i,X_j). \end{align} }[/math]

- For any two random variables [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math],

Proof. The equation for two variables is directly due to the definition of variance and covariance. The equation for [math]\displaystyle{ n }[/math] variables can be deduced from the equation for two variables.

- [math]\displaystyle{ \square }[/math]

We will see that when random variables are independent, the variance of sum is equal to the sum of variances. To prove this, we first establish a very useful result regarding the expectation of multiplicity.

Theorem - For any two independent random variables [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math],

- [math]\displaystyle{ \begin{align} \mathbf{E}[X\cdot Y]=\mathbf{E}[X]\cdot\mathbf{E}[Y]. \end{align} }[/math]

- For any two independent random variables [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math],

Proof. - [math]\displaystyle{ \begin{align} \mathbf{E}[X\cdot Y] &= \sum_{x,y}xy\Pr[X=x\wedge Y=y]\\ &= \sum_{x,y}xy\Pr[X=x]\Pr[Y=y]\\ &= \sum_{x}x\Pr[X=x]\sum_{y}y\Pr[Y=y]\\ &= \mathbf{E}[X]\cdot\mathbf{E}[Y]. \end{align} }[/math]

- [math]\displaystyle{ \square }[/math]

With the above theorem, we can show that the covariance of two independent variables is always zero.

Theorem - For any two independent random variables [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math],

- [math]\displaystyle{ \begin{align} \mathbf{Cov}(X,Y)=0. \end{align} }[/math]

- For any two independent random variables [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math],

Proof. - [math]\displaystyle{ \begin{align} \mathbf{Cov}(X,Y) &=\mathbf{E}\left[(X-\mathbf{E}[X])(Y-\mathbf{E}[Y])\right]\\ &= \mathbf{E}\left[X-\mathbf{E}[X]\right]\mathbf{E}\left[Y-\mathbf{E}[Y]\right] &\qquad(\mbox{Independence})\\ &=0. \end{align} }[/math]

- [math]\displaystyle{ \square }[/math]

We then have the following theorem for the variance of the sum of pairwise independent random variables.

Theorem - For pairwise independent random variables [math]\displaystyle{ X_1,X_2,\ldots,X_n }[/math],

- [math]\displaystyle{ \begin{align} \mathbf{Var}\left[\sum_{i=1}^n X_i\right]=\sum_{i=1}^n\mathbf{Var}[X_i]. \end{align} }[/math]

- For pairwise independent random variables [math]\displaystyle{ X_1,X_2,\ldots,X_n }[/math],

- Remark

- The theorem holds for pairwise independent random variables, a much weaker independence requirement than the mutual independence. This makes the variance-based probability tools work even for weakly random cases. We will see what it exactly means in the future lectures.

Variance of binomial distribution

For a Bernoulli trial with parameter [math]\displaystyle{ p }[/math].

- [math]\displaystyle{ X=\begin{cases} 1& \mbox{with probability }p\\ 0& \mbox{with probability }1-p \end{cases} }[/math]

The variance is

- [math]\displaystyle{ \mathbf{Var}[X]=\mathbf{E}[X^2]-(\mathbf{E}[X])^2=\mathbf{E}[X]-(\mathbf{E}[X])^2=p-p^2=p(1-p). }[/math]

Let [math]\displaystyle{ Y }[/math] be a binomial random variable with parameter [math]\displaystyle{ n }[/math] and [math]\displaystyle{ p }[/math], i.e. [math]\displaystyle{ Y=\sum_{i=1}^nY_i }[/math], where [math]\displaystyle{ Y_i }[/math]'s are i.i.d. Bernoulli trials with parameter [math]\displaystyle{ p }[/math]. The variance is

- [math]\displaystyle{ \begin{align} \mathbf{Var}[Y] &= \mathbf{Var}\left[\sum_{i=1}^nY_i\right]\\ &= \sum_{i=1}^n\mathbf{Var}\left[Y_i\right] &\qquad (\mbox{Independence})\\ &= \sum_{i=1}^np(1-p) &\qquad (\mbox{Bernoulli})\\ &= p(1-p)n. \end{align} }[/math]

Chebyshev's inequality

With the information of the expectation and variance of a random variable, one can derive a stronger tail bound known as Chebyshev's Inequality.

Theorem (Chebyshev's Inequality) - For any [math]\displaystyle{ t\gt 0 }[/math],

- [math]\displaystyle{ \begin{align} \Pr\left[|X-\mathbf{E}[X]| \ge t\right] \le \frac{\mathbf{Var}[X]}{t^2}. \end{align} }[/math]

- For any [math]\displaystyle{ t\gt 0 }[/math],

Proof. Observe that - [math]\displaystyle{ \Pr[|X-\mathbf{E}[X]| \ge t] = \Pr[(X-\mathbf{E}[X])^2 \ge t^2]. }[/math]

Since [math]\displaystyle{ (X-\mathbf{E}[X])^2 }[/math] is a nonnegative random variable, we can apply Markov's inequality, such that

- [math]\displaystyle{ \Pr[(X-\mathbf{E}[X])^2 \ge t^2] \le \frac{\mathbf{E}[(X-\mathbf{E}[X])^2]}{t^2} =\frac{\mathbf{Var}[X]}{t^2}. }[/math]

- [math]\displaystyle{ \square }[/math]

Higher moments

The above two inequalities can be put into a general framework regarding the moments of random variables.

Definition (moments) - The [math]\displaystyle{ k }[/math]th moment of a random variable [math]\displaystyle{ X }[/math] is [math]\displaystyle{ \mathbf{E}[X^k] }[/math].

The more we know about the moments, the more information we have about the distribution, hence in principle, we can get tighter tail bounds. This technique is called the [math]\displaystyle{ k }[/math]th moment method.

We know that the [math]\displaystyle{ k }[/math]th moment is [math]\displaystyle{ \mathbf{E}[X^k] }[/math]. More generally, the [math]\displaystyle{ k }[/math]th moment about [math]\displaystyle{ c }[/math] is [math]\displaystyle{ \mathbf{E}[(X-c)^k] }[/math]. The central moment of [math]\displaystyle{ X }[/math], denoted [math]\displaystyle{ \mu_k[X] }[/math], is defined as [math]\displaystyle{ \mu_k[X]=\mathbf{E}[(X-\mathbf{E}[X])^k] }[/math]. So the variance is just the second central moment [math]\displaystyle{ \mu_2[X] }[/math].

The [math]\displaystyle{ k }[/math]th moment method is stated by the following theorem.

Theorem (the [math]\displaystyle{ k }[/math]th moment method) - For even [math]\displaystyle{ k\gt 0 }[/math], and any [math]\displaystyle{ t\gt 0 }[/math],

- [math]\displaystyle{ \begin{align} \Pr\left[|X-\mathbf{E}[X]| \ge t\right] \le \frac{\mu_k[X]}{t^k}. \end{align} }[/math]

- For even [math]\displaystyle{ k\gt 0 }[/math], and any [math]\displaystyle{ t\gt 0 }[/math],

Proof. Apply Markov's inequality to [math]\displaystyle{ (X-\mathbf{E}[X])^k }[/math].

- [math]\displaystyle{ \square }[/math]

How about the odd [math]\displaystyle{ k }[/math]? For odd [math]\displaystyle{ k }[/math], we should apply Markov's inequality to [math]\displaystyle{ |X-\mathbf{E}[X]|^k }[/math], but estimating expectations of absolute values can be hard.

Select the Median

The selection problem is the problem of finding the [math]\displaystyle{ k }[/math]th smallest element in a set [math]\displaystyle{ S }[/math]. A typical case of selection problem is finding the median.

Definition - The median of a set [math]\displaystyle{ S }[/math] is the [math]\displaystyle{ (\lceil n/2\rceil) }[/math]th element in the sorted order of [math]\displaystyle{ S }[/math].

The median can be found in [math]\displaystyle{ O(n\log n) }[/math] time by sorting. There is a linear-time deterministic algorithm, "median of medians" algorithm, which is quite sophisticated. Here we introduce a much simpler randomized algorithm which also runs in linear time.

Randomized median selection algorithm

We introduce a randomized median selection algorithm called LazySelect, which is a variant on a randomized algorithm due to Floyd and Rivest

The idea of this algorithm is random sampling. For a set [math]\displaystyle{ S }[/math], let [math]\displaystyle{ m\in S }[/math] denote the median. We observe that if we can find two elements [math]\displaystyle{ d,u\in S }[/math] satisfying the following properties:

- The median is between [math]\displaystyle{ d }[/math] and [math]\displaystyle{ u }[/math] in the sorted order, i.e. [math]\displaystyle{ d\le m\le u }[/math];

- The total number of elements between [math]\displaystyle{ d }[/math] and [math]\displaystyle{ u }[/math] is small, specially for [math]\displaystyle{ C=\{x\in S\mid d\le x\le u\} }[/math], [math]\displaystyle{ |C|=o(n/\log n) }[/math].

Provided [math]\displaystyle{ d }[/math] and [math]\displaystyle{ u }[/math] with these two properties, within linear time, we can compute the ranks of [math]\displaystyle{ d }[/math] in [math]\displaystyle{ S }[/math], construct [math]\displaystyle{ C }[/math], and sort [math]\displaystyle{ C }[/math]. Therefore, the median [math]\displaystyle{ m }[/math] of [math]\displaystyle{ S }[/math] can be picked from [math]\displaystyle{ C }[/math] in linear time.

So how can we select such elements [math]\displaystyle{ d }[/math] and [math]\displaystyle{ u }[/math] from [math]\displaystyle{ S }[/math]? Certainly sorting [math]\displaystyle{ S }[/math] would give us the elements, but isn't that exactly what we want to avoid in the first place?

Observe that [math]\displaystyle{ d }[/math] and [math]\displaystyle{ u }[/math] are only asked to roughly satisfy some constraints. This hints us maybe we can construct a sketch of [math]\displaystyle{ S }[/math] which is small enough to sort cheaply and roughly represents [math]\displaystyle{ S }[/math], and then pick [math]\displaystyle{ d }[/math] and [math]\displaystyle{ u }[/math] from this sketch. We construct the sketch by randomly sampling a relatively small number of elements from [math]\displaystyle{ S }[/math]. Then the strategy of algorithm is outlined by:

- Sample a set [math]\displaystyle{ R }[/math] of elements from [math]\displaystyle{ S }[/math].

- Sort [math]\displaystyle{ R }[/math] and choose [math]\displaystyle{ d }[/math] and [math]\displaystyle{ u }[/math] somewhere around the median of [math]\displaystyle{ R }[/math].

- If [math]\displaystyle{ d }[/math] and [math]\displaystyle{ u }[/math] have the desirable properties, we can compute the median in linear time, or otherwise the algorithm fails.

The parameters to be fixed are: the size of [math]\displaystyle{ R }[/math] (small enough to sort in linear time and large enough to contain sufficient information of [math]\displaystyle{ S }[/math]); and the order of [math]\displaystyle{ d }[/math] and [math]\displaystyle{ u }[/math] in [math]\displaystyle{ R }[/math] (not too close to have [math]\displaystyle{ m }[/math] between them, and not too far away to have [math]\displaystyle{ C }[/math] sortable in linear time).

We choose the size of [math]\displaystyle{ R }[/math] as [math]\displaystyle{ n^{3/4} }[/math], and [math]\displaystyle{ d }[/math] and [math]\displaystyle{ u }[/math] are within [math]\displaystyle{ \sqrt{n} }[/math] range around the median of [math]\displaystyle{ R }[/math].

LazySelect Input: a set [math]\displaystyle{ S }[/math] of [math]\displaystyle{ n }[/math] elements over totally ordered domain.

- Pick a multi-set [math]\displaystyle{ R }[/math] of [math]\displaystyle{ \left\lceil n^{3/4}\right\rceil }[/math] elements in [math]\displaystyle{ S }[/math], chosen independently and uniformly at random with replacement, and sort [math]\displaystyle{ R }[/math].

- Let [math]\displaystyle{ d }[/math] be the [math]\displaystyle{ \left\lfloor\frac{1}{2}n^{3/4}-\sqrt{n}\right\rfloor }[/math]-th smallest element in [math]\displaystyle{ R }[/math], and let [math]\displaystyle{ u }[/math] be the [math]\displaystyle{ \left\lceil\frac{1}{2}n^{3/4}+\sqrt{n}\right\rceil }[/math]-th smallest element in [math]\displaystyle{ R }[/math].

- Construct [math]\displaystyle{ C=\{x\in S\mid d\le x\le u\} }[/math] and compute the ranks [math]\displaystyle{ r_d=|\{x\in S\mid x\lt d\}| }[/math] and [math]\displaystyle{ r_u=|\{x\in S\mid x\lt u\}| }[/math].

- If [math]\displaystyle{ r_d\gt \frac{n}{2} }[/math] or [math]\displaystyle{ r_u\lt \frac{n}{2} }[/math] or [math]\displaystyle{ |C|\gt 4n^{3/4} }[/math] then return FAIL.

- Sort [math]\displaystyle{ C }[/math] and return the [math]\displaystyle{ \left(\left\lfloor\frac{n}{2}\right\rfloor-r_d+1\right) }[/math]th element in the sorted order of [math]\displaystyle{ C }[/math].

"Sample with replacement" (有放回采样) means that after sampling an element, we put the element back to the set. In this way, each sampled element is independently and identically distributed (i.i.d) (独立同分布). In the above algorithm, this is for our convenience of analysis.

Analysis

The algorithm always terminates in linear time because each line of the algorithm costs at most linear time. The last three line guarantees that the algorithm returns the correct median if it does not fail.

We then only need to bound the probability that the algorithm returns a FAIL. Let [math]\displaystyle{ m\in S }[/math] be the median of [math]\displaystyle{ S }[/math]. By Line 4, we know that the algorithm returns a FAIL if and only if at least one of the following events occurs:

- [math]\displaystyle{ \mathcal{E}_1: Y=|\{x\in R\mid x\le m\}|\lt \frac{1}{2}n^{3/4}-\sqrt{n} }[/math];

- [math]\displaystyle{ \mathcal{E}_2: Z=|\{x\in R\mid x\ge m\}|\lt \frac{1}{2}n^{3/4}-\sqrt{n} }[/math];

- [math]\displaystyle{ \mathcal{E}_3: |C|\gt 4n^{3/4} }[/math].

[math]\displaystyle{ \mathcal{E}_3 }[/math] directly follows the third condition in Line 4. [math]\displaystyle{ \mathcal{E}_1 }[/math] and [math]\displaystyle{ \mathcal{E}_2 }[/math] are a bit tricky. The first condition in Line 4 is that [math]\displaystyle{ r_d\gt \frac{n}{2} }[/math], which looks not exactly the same as [math]\displaystyle{ \mathcal{E}_1 }[/math], but both [math]\displaystyle{ \mathcal{E}_1 }[/math] and that [math]\displaystyle{ r_d\gt \frac{n}{2} }[/math] are equivalent to the same event: the [math]\displaystyle{ \left\lfloor\frac{1}{2}n^{3/4}-\sqrt{n}\right\rfloor }[/math]-th smallest element in [math]\displaystyle{ R }[/math] is greater than [math]\displaystyle{ m }[/math], thus they are actually equivalent. Similarly, [math]\displaystyle{ \mathcal{E}_2 }[/math] is equivalent to the second condition of Line 4.

We now bound the probabilities of these events one by one.

Lemma 1 - [math]\displaystyle{ \Pr[\mathcal{E}_1]\le \frac{1}{4}n^{-1/4} }[/math].

Proof. Let [math]\displaystyle{ X_i }[/math] be the [math]\displaystyle{ i }[/math]th sampled element in Line 1 of the algorithm. Let [math]\displaystyle{ Y_i }[/math] be a indicator random variable such that - [math]\displaystyle{ Y_i= \begin{cases} 1 & \mbox{if }X_i\le m,\\ 0 & \mbox{otherwise.} \end{cases} }[/math]

It is obvious that [math]\displaystyle{ Y=\sum_{i=1}^{n^{3/4}}Y_i }[/math], where [math]\displaystyle{ Y }[/math] is as defined in [math]\displaystyle{ \mathcal{E}_1 }[/math]. For every [math]\displaystyle{ X_i }[/math], there are [math]\displaystyle{ \left\lceil\frac{n}{2}\right\rceil }[/math] elements in [math]\displaystyle{ S }[/math] that are less than or equal to the median. The probability that [math]\displaystyle{ Y_i=1 }[/math] is

- [math]\displaystyle{ p=\Pr[Y_i=1]=\Pr[X_i\le m]=\frac{1}{n}\left\lceil\frac{n}{2}\right\rceil, }[/math]

which is within the range of [math]\displaystyle{ \left[\frac{1}{2},\frac{1}{2}+\frac{1}{2n}\right] }[/math]. Thus

- [math]\displaystyle{ \mathbf{E}[Y]=n^{3/4}p\ge \frac{1}{2}n^{3/4}. }[/math]

The event [math]\displaystyle{ \mathcal{E}_1 }[/math] is defined as that [math]\displaystyle{ Y\lt \frac{1}{2}n^{3/4}-\sqrt{n} }[/math].

Note that [math]\displaystyle{ Y_i }[/math]'s are Bernoulli trials, and [math]\displaystyle{ Y }[/math] is the sum of [math]\displaystyle{ n^{3/4} }[/math] Bernoulli trials, which follows binomial distribution with parameters [math]\displaystyle{ n^{3/4} }[/math] and [math]\displaystyle{ p }[/math]. Thus, the variance is

- [math]\displaystyle{ \mathbf{Var}[Y]=n^{3/4}p(1-p)\le \frac{1}{4}n^{3/4}. }[/math]

Applying Chebyshev's inequality,

- [math]\displaystyle{ \begin{align} \Pr[\mathcal{E}_1] &= \Pr\left[Y\lt \frac{1}{2}n^{3/4}-\sqrt{n}\right]\\ &\le \Pr\left[|Y-\mathbf{E}[Y]|\gt \sqrt{n}\right]\\ &\le \frac{\mathbf{Var}[Y]}{n}\\ &\le\frac{1}{4}n^{-1/4}. \end{align} }[/math]

- [math]\displaystyle{ \square }[/math]

By a similar analysis, we can obtain the following bound for the event [math]\displaystyle{ \mathcal{E}_2 }[/math].

Lemma 2 - [math]\displaystyle{ \Pr[\mathcal{E}_2]\le \frac{1}{4}n^{-1/4} }[/math].

We now bound the probability of the event [math]\displaystyle{ \mathcal{E}_3 }[/math].

Lemma 3 - [math]\displaystyle{ \Pr[\mathcal{E}_3]\le \frac{1}{2}n^{-1/4} }[/math].

Proof. The event [math]\displaystyle{ \mathcal{E}_3 }[/math] is defined as that [math]\displaystyle{ |C|\gt 4 n^{3/4} }[/math], which by the Pigeonhole Principle, implies that at leas one of the following must be true: - [math]\displaystyle{ \mathcal{E}_3' }[/math]: at least [math]\displaystyle{ 2n^{3/4} }[/math] elements of [math]\displaystyle{ C }[/math] is greater than [math]\displaystyle{ m }[/math];

- [math]\displaystyle{ \mathcal{E}_3'' }[/math]: at least [math]\displaystyle{ 2n^{3/4} }[/math] elements of [math]\displaystyle{ C }[/math] is smaller than [math]\displaystyle{ m }[/math].

We bound the probability that [math]\displaystyle{ \mathcal{E}_3' }[/math] occurs; the second will have the same bound by symmetry.

Recall that [math]\displaystyle{ C }[/math] is the region in [math]\displaystyle{ S }[/math] between [math]\displaystyle{ d }[/math] and [math]\displaystyle{ u }[/math]. If there are at least [math]\displaystyle{ 2n^{3/4} }[/math] elements of [math]\displaystyle{ C }[/math] greater than the median [math]\displaystyle{ m }[/math] of [math]\displaystyle{ S }[/math], then the rank of [math]\displaystyle{ u }[/math] in the sorted order of [math]\displaystyle{ S }[/math] must be at least [math]\displaystyle{ \frac{1}{2}n+2n^{3/4} }[/math] and thus [math]\displaystyle{ R }[/math] has at least [math]\displaystyle{ \frac{1}{2}n^{3/4}-\sqrt{n} }[/math] samples among the [math]\displaystyle{ \frac{1}{2}n-2n^{3/4} }[/math] largest elements in [math]\displaystyle{ S }[/math].

Let [math]\displaystyle{ X_i\in\{0,1\} }[/math] indicate whether the [math]\displaystyle{ i }[/math]th sample is among the [math]\displaystyle{ \frac{1}{2}n-2n^{3/4} }[/math] largest elements in [math]\displaystyle{ S }[/math]. Let [math]\displaystyle{ X=\sum_{i=1}^{n^{3/4}}X_i }[/math] be the number of samples in [math]\displaystyle{ R }[/math] among the [math]\displaystyle{ \frac{1}{2}n-2n^{3/4} }[/math] largest elements in [math]\displaystyle{ S }[/math]. It holds that

- [math]\displaystyle{ p=\Pr[X_i=1]=\frac{\frac{1}{2}n-2n^{3/4}}{n}=\frac{1}{2}-2n^{-1/4} }[/math].

[math]\displaystyle{ X }[/math] is a binomial random variable with

- [math]\displaystyle{ \mathbf{E}[X]=n^{3/4}p=\frac{1}{2}n^{3/4}-2\sqrt{n}, }[/math]

and

- [math]\displaystyle{ \mathbf{Var}[X]=n^{3/4}p(1-p)=\frac{1}{4}n^{3/4}-4n^{1/4}\lt \frac{1}{4}n^{3/4}. }[/math]

Applying Chebyshev's inequality,

- [math]\displaystyle{ \begin{align} \Pr[\mathcal{E}_3'] &= \Pr\left[X\ge\frac{1}{2}n^{3/4}-\sqrt{n}\right]\\ &\le \Pr\left[|X-\mathbf{E}[X]|\ge\sqrt{n}\right]\\ &\le \frac{\mathbf{Var}[X]}{n}\\ &\le\frac{1}{4}n^{-1/4}. \end{align} }[/math]

Symmetrically, we have that [math]\displaystyle{ \Pr[\mathcal{E}_3'']\le\frac{1}{4}n^{-1/4} }[/math].

Applying the union bound

- [math]\displaystyle{ \Pr[\mathcal{E}_3]\le \Pr[\mathcal{E}_3']+\Pr[\mathcal{E}_3'']\le\frac{1}{2}n^{-1/4}. }[/math]

- [math]\displaystyle{ \square }[/math]

Combining the three bounds. Applying the union bound to them, the probability that the algorithm returns a FAIL is at most

- [math]\displaystyle{ \Pr[\mathcal{E}_1]+\Pr[\mathcal{E}_2]+\Pr[\mathcal{E}_3]\le n^{-1/4}. }[/math]

Therefore the algorithm always terminates in linear time and returns the correct median with high probability.

Chernoff Bound

Suppose that we have a fair coin. If we toss it once, then the outcome is completely unpredictable. But if we toss it, say for 1000 times, then the number of HEADs is very likely to be around 500. This striking phenomenon, illustrated in the right figure, is called the concentration. The Chernoff bound captures the concentration of independent trials.

The Chernoff bound is also a tail bound for the sum of independent random variables which may give us exponentially sharp bounds.

Before proving the Chernoff bound, we should talk about the moment generating functions.

Moment generating functions

The more we know about the moments of a random variable [math]\displaystyle{ X }[/math], the more information we would have about [math]\displaystyle{ X }[/math]. There is a so-called moment generating function, which "packs" all the information about the moments of [math]\displaystyle{ X }[/math] into one function.

Definition - The moment generating function of a random variable [math]\displaystyle{ X }[/math] is defined as [math]\displaystyle{ \mathbf{E}\left[\mathrm{e}^{\lambda X}\right] }[/math] where [math]\displaystyle{ \lambda }[/math] is the parameter of the function.

By Taylor's expansion and the linearity of expectations,

- [math]\displaystyle{ \begin{align} \mathbf{E}\left[\mathrm{e}^{\lambda X}\right] &= \mathbf{E}\left[\sum_{k=0}^\infty\frac{\lambda^k}{k!}X^k\right]\\ &=\sum_{k=0}^\infty\frac{\lambda^k}{k!}\mathbf{E}\left[X^k\right] \end{align} }[/math]

The moment generating function [math]\displaystyle{ \mathbf{E}\left[\mathrm{e}^{\lambda X}\right] }[/math] is a function of [math]\displaystyle{ \lambda }[/math].

The Chernoff bound

The Chernoff bounds are exponentially sharp tail inequalities for the sum of independent trials. The bounds are obtained by applying Markov's inequality to the moment generating function of the sum of independent trials, with some appropriate choice of the parameter [math]\displaystyle{ \lambda }[/math].

Chernoff bound (the upper tail) - Let [math]\displaystyle{ X=\sum_{i=1}^n X_i }[/math], where [math]\displaystyle{ X_1, X_2, \ldots, X_n }[/math] are independent Poisson trials. Let [math]\displaystyle{ \mu=\mathbf{E}[X] }[/math].

- Then for any [math]\displaystyle{ \delta\gt 0 }[/math],

- [math]\displaystyle{ \Pr[X\ge (1+\delta)\mu]\le\left(\frac{e^{\delta}}{(1+\delta)^{(1+\delta)}}\right)^{\mu}. }[/math]

Proof. For any [math]\displaystyle{ \lambda\gt 0 }[/math], [math]\displaystyle{ X\ge (1+\delta)\mu }[/math] is equivalent to that [math]\displaystyle{ e^{\lambda X}\ge e^{\lambda (1+\delta)\mu} }[/math], thus - [math]\displaystyle{ \begin{align} \Pr[X\ge (1+\delta)\mu] &= \Pr\left[e^{\lambda X}\ge e^{\lambda (1+\delta)\mu}\right]\\ &\le \frac{\mathbf{E}\left[e^{\lambda X}\right]}{e^{\lambda (1+\delta)\mu}}, \end{align} }[/math]

where the last step follows by Markov's inequality.

Computing the moment generating function [math]\displaystyle{ \mathbf{E}[e^{\lambda X}] }[/math]:

- [math]\displaystyle{ \begin{align} \mathbf{E}\left[e^{\lambda X}\right] &= \mathbf{E}\left[e^{\lambda \sum_{i=1}^n X_i}\right]\\ &= \mathbf{E}\left[\prod_{i=1}^n e^{\lambda X_i}\right]\\ &= \prod_{i=1}^n \mathbf{E}\left[e^{\lambda X_i}\right]. & (\mbox{for independent random variables}) \end{align} }[/math]

Let [math]\displaystyle{ p_i=\Pr[X_i=1] }[/math] for [math]\displaystyle{ i=1,2,\ldots,n }[/math]. Then,

- [math]\displaystyle{ \mu=\mathbf{E}[X]=\mathbf{E}\left[\sum_{i=1}^n X_i\right]=\sum_{i=1}^n\mathbf{E}[X_i]=\sum_{i=1}^n p_i }[/math].

We bound the moment generating function for each individual [math]\displaystyle{ X_i }[/math] as follows.

- [math]\displaystyle{ \begin{align} \mathbf{E}\left[e^{\lambda X_i}\right] &= p_i\cdot e^{\lambda\cdot 1}+(1-p_i)\cdot e^{\lambda\cdot 0}\\ &= 1+p_i(e^\lambda -1)\\ &\le e^{p_i(e^\lambda-1)}, \end{align} }[/math]

where in the last step we apply the Taylor's expansion so that [math]\displaystyle{ e^y\ge 1+y }[/math] where [math]\displaystyle{ y=p_i(e^\lambda-1)\ge 0 }[/math]. (By doing this, we can transform the product to the sum of [math]\displaystyle{ p_i }[/math], which is [math]\displaystyle{ \mu }[/math].)

Therefore,

- [math]\displaystyle{ \begin{align} \mathbf{E}\left[e^{\lambda X}\right] &= \prod_{i=1}^n \mathbf{E}\left[e^{\lambda X_i}\right]\\ &\le \prod_{i=1}^n e^{p_i(e^\lambda-1)}\\ &= \exp\left(\sum_{i=1}^n p_i(e^{\lambda}-1)\right)\\ &= e^{(e^\lambda-1)\mu}. \end{align} }[/math]

Thus, we have shown that for any [math]\displaystyle{ \lambda\gt 0 }[/math],

- [math]\displaystyle{ \begin{align} \Pr[X\ge (1+\delta)\mu] &\le \frac{\mathbf{E}\left[e^{\lambda X}\right]}{e^{\lambda (1+\delta)\mu}}\\ &\le \frac{e^{(e^\lambda-1)\mu}}{e^{\lambda (1+\delta)\mu}}\\ &= \left(\frac{e^{(e^\lambda-1)}}{e^{\lambda (1+\delta)}}\right)^\mu \end{align} }[/math].

For any [math]\displaystyle{ \delta\gt 0 }[/math], we can let [math]\displaystyle{ \lambda=\ln(1+\delta)\gt 0 }[/math] to get

- [math]\displaystyle{ \Pr[X\ge (1+\delta)\mu]\le\left(\frac{e^{\delta}}{(1+\delta)^{(1+\delta)}}\right)^{\mu}. }[/math]

- [math]\displaystyle{ \square }[/math]

The idea of the proof is actually quite clear: we apply Markov's inequality to [math]\displaystyle{ e^{\lambda X} }[/math] and for the rest, we just estimate the moment generating function [math]\displaystyle{ \mathbf{E}[e^{\lambda X}] }[/math]. To make the bound as tight as possible, we minimized the [math]\displaystyle{ \frac{e^{(e^\lambda-1)}}{e^{\lambda (1+\delta)}} }[/math] by setting [math]\displaystyle{ \lambda=\ln(1+\delta) }[/math], which can be justified by taking derivatives of [math]\displaystyle{ \frac{e^{(e^\lambda-1)}}{e^{\lambda (1+\delta)}} }[/math].

We then proceed to the lower tail, the probability that the random variable deviates below the mean value:

Chernoff bound (the lower tail) - Let [math]\displaystyle{ X=\sum_{i=1}^n X_i }[/math], where [math]\displaystyle{ X_1, X_2, \ldots, X_n }[/math] are independent Poisson trials. Let [math]\displaystyle{ \mu=\mathbf{E}[X] }[/math].

- Then for any [math]\displaystyle{ 0\lt \delta\lt 1 }[/math],

- [math]\displaystyle{ \Pr[X\le (1-\delta)\mu]\le\left(\frac{e^{-\delta}}{(1-\delta)^{(1-\delta)}}\right)^{\mu}. }[/math]

Proof. For any [math]\displaystyle{ \lambda\lt 0 }[/math], by the same analysis as in the upper tail version, - [math]\displaystyle{ \begin{align} \Pr[X\le (1-\delta)\mu] &= \Pr\left[e^{\lambda X}\ge e^{\lambda (1-\delta)\mu}\right]\\ &\le \frac{\mathbf{E}\left[e^{\lambda X}\right]}{e^{\lambda (1-\delta)\mu}}\\ &\le \left(\frac{e^{(e^\lambda-1)}}{e^{\lambda (1-\delta)}}\right)^\mu. \end{align} }[/math]

For any [math]\displaystyle{ 0\lt \delta\lt 1 }[/math], we can let [math]\displaystyle{ \lambda=\ln(1-\delta)\lt 0 }[/math] to get

- [math]\displaystyle{ \Pr[X\ge (1-\delta)\mu]\le\left(\frac{e^{-\delta}}{(1-\delta)^{(1-\delta)}}\right)^{\mu}. }[/math]

- [math]\displaystyle{ \square }[/math]

Some useful special forms of the bounds can be derived directly from the above general forms of the bounds. We now know better why we say that the bounds are exponentially sharp.

Useful forms of the Chernoff bound - Let [math]\displaystyle{ X=\sum_{i=1}^n X_i }[/math], where [math]\displaystyle{ X_1, X_2, \ldots, X_n }[/math] are independent Poisson trials. Let [math]\displaystyle{ \mu=\mathbf{E}[X] }[/math]. Then

- 1. for [math]\displaystyle{ 0\lt \delta\le 1 }[/math],

- [math]\displaystyle{ \Pr[X\ge (1+\delta)\mu]\lt \exp\left(-\frac{\mu\delta^2}{3}\right); }[/math]

- [math]\displaystyle{ \Pr[X\le (1-\delta)\mu]\lt \exp\left(-\frac{\mu\delta^2}{2}\right); }[/math]

- 2. for [math]\displaystyle{ t\ge 2e\mu }[/math],

- [math]\displaystyle{ \Pr[X\ge t]\le 2^{-t}. }[/math]

Proof. To obtain the bounds in (1), we need to show that for [math]\displaystyle{ 0\lt \delta\lt 1 }[/math], [math]\displaystyle{ \frac{e^{\delta}}{(1+\delta)^{(1+\delta)}}\le e^{-\delta^2/3} }[/math] and [math]\displaystyle{ \frac{e^{-\delta}}{(1-\delta)^{(1-\delta)}}\le e^{-\delta^2/2} }[/math]. We can verify both inequalities by standard analysis techniques. To obtain the bound in (2), let [math]\displaystyle{ t=(1+\delta)\mu }[/math]. Then [math]\displaystyle{ \delta=t/\mu-1\ge 2e-1 }[/math]. Hence,

- [math]\displaystyle{ \begin{align} \Pr[X\ge(1+\delta)\mu] &\le \left(\frac{e^\delta}{(1+\delta)^{(1+\delta)}}\right)^\mu\\ &\le \left(\frac{e}{1+\delta}\right)^{(1+\delta)\mu}\\ &\le \left(\frac{e}{2e}\right)^t\\ &\le 2^{-t} \end{align} }[/math]

- [math]\displaystyle{ \square }[/math]

Balls into bins, revisited

Throwing [math]\displaystyle{ m }[/math] balls uniformly and independently to [math]\displaystyle{ n }[/math] bins, what is the maximum load of all bins with high probability? In the last class, we gave an analysis of this problem by using a counting argument.

Now we give a more "advanced" analysis by using Chernoff bounds.

For any [math]\displaystyle{ i\in[n] }[/math] and [math]\displaystyle{ j\in[m] }[/math], let [math]\displaystyle{ X_{ij} }[/math] be the indicator variable for the event that ball [math]\displaystyle{ j }[/math] is thrown to bin [math]\displaystyle{ i }[/math]. Obviously

- [math]\displaystyle{ \mathbf{E}[X_{ij}]=\Pr[\mbox{ball }j\mbox{ is thrown to bin }i]=\frac{1}{n} }[/math]

Let [math]\displaystyle{ Y_i=\sum_{j\in[m]}X_{ij} }[/math] be the load of bin [math]\displaystyle{ i }[/math].

Then the expected load of bin [math]\displaystyle{ i }[/math] is

[math]\displaystyle{ (*)\qquad \mu=\mathbf{E}[Y_i]=\mathbf{E}\left[\sum_{j\in[m]}X_{ij}\right]=\sum_{j\in[m]}\mathbf{E}[X_{ij}]=m/n. }[/math]

For the case [math]\displaystyle{ m=n }[/math], it holds that [math]\displaystyle{ \mu=1 }[/math]

Note that [math]\displaystyle{ Y_i }[/math] is a sum of [math]\displaystyle{ m }[/math] mutually independent indicator variable. Applying Chernoff bound, for any particular bin [math]\displaystyle{ i\in[n] }[/math],

- [math]\displaystyle{ \Pr[Y_i\gt (1+\delta)\mu] \le \left(\frac{e^{\delta}}{(1+\delta)^{1+\delta}}\right)^\mu. }[/math]

When [math]\displaystyle{ m=n }[/math]

When [math]\displaystyle{ m=n }[/math], [math]\displaystyle{ \mu=1 }[/math]. Write [math]\displaystyle{ c=1+\delta }[/math]. The above bound can be written as

- [math]\displaystyle{ \Pr[Y_i\gt c] \le \frac{e^{c-1}}{c^c}. }[/math]

Let [math]\displaystyle{ c=\frac{e\ln n}{\ln\ln n} }[/math], we evaluate [math]\displaystyle{ \frac{e^{c-1}}{c^c} }[/math] by taking logarithm to its reciprocal.

- [math]\displaystyle{ \begin{align} \ln\left(\frac{c^c}{e^{c-1}}\right) &= c\ln c-c+1\\ &= c(\ln c-1)+1\\ &= \frac{e\ln n}{\ln\ln n}\left(\ln\ln n-\ln\ln\ln n\right)+1\\ &\ge \frac{e\ln n}{\ln\ln n}\cdot\frac{2}{e}\ln\ln n+1\\ &\ge 2\ln n. \end{align} }[/math]

Thus,

- [math]\displaystyle{ \Pr\left[Y_i\gt \frac{e\ln n}{\ln\ln n}\right] \le \frac{1}{n^2}. }[/math]

Applying the union bound, the probability that there exists a bin with load [math]\displaystyle{ \gt 12\ln n }[/math] is

- [math]\displaystyle{ n\cdot \Pr\left[Y_1\gt \frac{e\ln n}{\ln\ln n}\right] \le \frac{1}{n} }[/math].

Therefore, for [math]\displaystyle{ m=n }[/math], with high probability, the maximum load is [math]\displaystyle{ O\left(\frac{e\ln n}{\ln\ln n}\right) }[/math].

For larger [math]\displaystyle{ m }[/math]

When [math]\displaystyle{ m\ge n\ln n }[/math], then according to [math]\displaystyle{ (*) }[/math], [math]\displaystyle{ \mu=\frac{m}{n}\ge \ln n }[/math]

We can apply an easier form of the Chernoff bounds,

- [math]\displaystyle{ \Pr[Y_i\ge 2e\mu]\le 2^{-2e\mu}\le 2^{-2e\ln n}\lt \frac{1}{n^2}. }[/math]

By the union bound, the probability that there exists a bin with load [math]\displaystyle{ \ge 2e\frac{m}{n} }[/math] is,

- [math]\displaystyle{ n\cdot \Pr\left[Y_1\gt 2e\frac{m}{n}\right] = n\cdot \Pr\left[Y_1\gt 2e\mu\right]\le \frac{1}{n} }[/math].

Therefore, for [math]\displaystyle{ m\ge n\ln n }[/math], with high probability, the maximum load is [math]\displaystyle{ O\left(\frac{m}{n}\right) }[/math].