高级算法 (Fall 2019)/Concentration of measure

Chernoff Bound

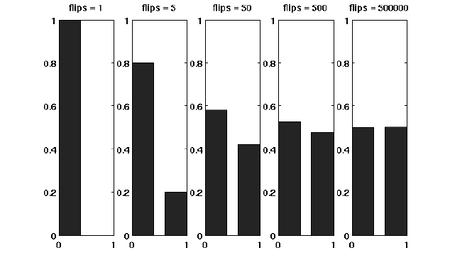

Suppose that we have a fair coin. If we toss it once, then the outcome is completely unpredictable. But if we toss it, say for 1000 times, then the number of HEADs is very likely to be around 500. This phenomenon, as illustrated in the following figure, is called the concentration of measure. The Chernoff bound is an inequality that characterizes the concentration phenomenon for the sum of independent trials.

Before formally stating the Chernoff bound, let's introduce the moment generating function.

Moment generating functions

The more we know about the moments of a random variable [math]\displaystyle{ X }[/math], the more information we would have about [math]\displaystyle{ X }[/math]. There is a so-called moment generating function, which "packs" all the information about the moments of [math]\displaystyle{ X }[/math] into one function.

Definition - The moment generating function of a random variable [math]\displaystyle{ X }[/math] is defined as [math]\displaystyle{ \mathbf{E}\left[\mathrm{e}^{\lambda X}\right] }[/math] where [math]\displaystyle{ \lambda }[/math] is the parameter of the function.

By Taylor's expansion and the linearity of expectations,

- [math]\displaystyle{ \begin{align} \mathbf{E}\left[\mathrm{e}^{\lambda X}\right] &= \mathbf{E}\left[\sum_{k=0}^\infty\frac{\lambda^k}{k!}X^k\right]\\ &=\sum_{k=0}^\infty\frac{\lambda^k}{k!}\mathbf{E}\left[X^k\right] \end{align} }[/math]

The moment generating function [math]\displaystyle{ \mathbf{E}\left[\mathrm{e}^{\lambda X}\right] }[/math] is a function of [math]\displaystyle{ \lambda }[/math].

The Chernoff bound

The Chernoff bounds are exponentially sharp tail inequalities for the sum of independent trials. The bounds are obtained by applying Markov's inequality to the moment generating function of the sum of independent trials, with some appropriate choice of the parameter [math]\displaystyle{ \lambda }[/math].

Chernoff bound (the upper tail) - Let [math]\displaystyle{ X=\sum_{i=1}^n X_i }[/math], where [math]\displaystyle{ X_1, X_2, \ldots, X_n }[/math] are independent Poisson trials. Let [math]\displaystyle{ \mu=\mathbf{E}[X] }[/math].

- Then for any [math]\displaystyle{ \delta\gt 0 }[/math],

- [math]\displaystyle{ \Pr[X\ge (1+\delta)\mu]\le\left(\frac{e^{\delta}}{(1+\delta)^{(1+\delta)}}\right)^{\mu}. }[/math]

Proof. For any [math]\displaystyle{ \lambda\gt 0 }[/math], [math]\displaystyle{ X\ge (1+\delta)\mu }[/math] is equivalent to that [math]\displaystyle{ e^{\lambda X}\ge e^{\lambda (1+\delta)\mu} }[/math], thus - [math]\displaystyle{ \begin{align} \Pr[X\ge (1+\delta)\mu] &= \Pr\left[e^{\lambda X}\ge e^{\lambda (1+\delta)\mu}\right]\\ &\le \frac{\mathbf{E}\left[e^{\lambda X}\right]}{e^{\lambda (1+\delta)\mu}}, \end{align} }[/math]

where the last step follows by Markov's inequality.

Computing the moment generating function [math]\displaystyle{ \mathbf{E}[e^{\lambda X}] }[/math]:

- [math]\displaystyle{ \begin{align} \mathbf{E}\left[e^{\lambda X}\right] &= \mathbf{E}\left[e^{\lambda \sum_{i=1}^n X_i}\right]\\ &= \mathbf{E}\left[\prod_{i=1}^n e^{\lambda X_i}\right]\\ &= \prod_{i=1}^n \mathbf{E}\left[e^{\lambda X_i}\right]. & (\mbox{for independent random variables}) \end{align} }[/math]

Let [math]\displaystyle{ p_i=\Pr[X_i=1] }[/math] for [math]\displaystyle{ i=1,2,\ldots,n }[/math]. Then,

- [math]\displaystyle{ \mu=\mathbf{E}[X]=\mathbf{E}\left[\sum_{i=1}^n X_i\right]=\sum_{i=1}^n\mathbf{E}[X_i]=\sum_{i=1}^n p_i }[/math].

We bound the moment generating function for each individual [math]\displaystyle{ X_i }[/math] as follows.

- [math]\displaystyle{ \begin{align} \mathbf{E}\left[e^{\lambda X_i}\right] &= p_i\cdot e^{\lambda\cdot 1}+(1-p_i)\cdot e^{\lambda\cdot 0}\\ &= 1+p_i(e^\lambda -1)\\ &\le e^{p_i(e^\lambda-1)}, \end{align} }[/math]

where in the last step we apply the Taylor's expansion so that [math]\displaystyle{ e^y\ge 1+y }[/math] where [math]\displaystyle{ y=p_i(e^\lambda-1)\ge 0 }[/math]. (By doing this, we can transform the product to the sum of [math]\displaystyle{ p_i }[/math], which is [math]\displaystyle{ \mu }[/math].)

Therefore,

- [math]\displaystyle{ \begin{align} \mathbf{E}\left[e^{\lambda X}\right] &= \prod_{i=1}^n \mathbf{E}\left[e^{\lambda X_i}\right]\\ &\le \prod_{i=1}^n e^{p_i(e^\lambda-1)}\\ &= \exp\left(\sum_{i=1}^n p_i(e^{\lambda}-1)\right)\\ &= e^{(e^\lambda-1)\mu}. \end{align} }[/math]

Thus, we have shown that for any [math]\displaystyle{ \lambda\gt 0 }[/math],

- [math]\displaystyle{ \begin{align} \Pr[X\ge (1+\delta)\mu] &\le \frac{\mathbf{E}\left[e^{\lambda X}\right]}{e^{\lambda (1+\delta)\mu}}\\ &\le \frac{e^{(e^\lambda-1)\mu}}{e^{\lambda (1+\delta)\mu}}\\ &= \left(\frac{e^{(e^\lambda-1)}}{e^{\lambda (1+\delta)}}\right)^\mu \end{align} }[/math].

For any [math]\displaystyle{ \delta\gt 0 }[/math], we can let [math]\displaystyle{ \lambda=\ln(1+\delta)\gt 0 }[/math] to get

- [math]\displaystyle{ \Pr[X\ge (1+\delta)\mu]\le\left(\frac{e^{\delta}}{(1+\delta)^{(1+\delta)}}\right)^{\mu}. }[/math]

- [math]\displaystyle{ \square }[/math]

The idea of the proof is actually quite clear: we apply Markov's inequality to [math]\displaystyle{ e^{\lambda X} }[/math] and for the rest, we just estimate the moment generating function [math]\displaystyle{ \mathbf{E}[e^{\lambda X}] }[/math]. To make the bound as tight as possible, we minimized the [math]\displaystyle{ \frac{e^{(e^\lambda-1)}}{e^{\lambda (1+\delta)}} }[/math] by setting [math]\displaystyle{ \lambda=\ln(1+\delta) }[/math], which can be justified by taking derivatives of [math]\displaystyle{ \frac{e^{(e^\lambda-1)}}{e^{\lambda (1+\delta)}} }[/math].

We then proceed to the lower tail, the probability that the random variable deviates below the mean value:

Chernoff bound (the lower tail) - Let [math]\displaystyle{ X=\sum_{i=1}^n X_i }[/math], where [math]\displaystyle{ X_1, X_2, \ldots, X_n }[/math] are independent Poisson trials. Let [math]\displaystyle{ \mu=\mathbf{E}[X] }[/math].

- Then for any [math]\displaystyle{ 0\lt \delta\lt 1 }[/math],

- [math]\displaystyle{ \Pr[X\le (1-\delta)\mu]\le\left(\frac{e^{-\delta}}{(1-\delta)^{(1-\delta)}}\right)^{\mu}. }[/math]

Proof. For any [math]\displaystyle{ \lambda\lt 0 }[/math], by the same analysis as in the upper tail version, - [math]\displaystyle{ \begin{align} \Pr[X\le (1-\delta)\mu] &= \Pr\left[e^{\lambda X}\ge e^{\lambda (1-\delta)\mu}\right]\\ &\le \frac{\mathbf{E}\left[e^{\lambda X}\right]}{e^{\lambda (1-\delta)\mu}}\\ &\le \left(\frac{e^{(e^\lambda-1)}}{e^{\lambda (1-\delta)}}\right)^\mu. \end{align} }[/math]

For any [math]\displaystyle{ 0\lt \delta\lt 1 }[/math], we can let [math]\displaystyle{ \lambda=\ln(1-\delta)\lt 0 }[/math] to get

- [math]\displaystyle{ \Pr[X\ge (1-\delta)\mu]\le\left(\frac{e^{-\delta}}{(1-\delta)^{(1-\delta)}}\right)^{\mu}. }[/math]

- [math]\displaystyle{ \square }[/math]

Useful forms of the Chernoff bounds

Some useful special forms of the bounds can be derived directly from the above general forms of the bounds. We now know better why we say that the bounds are exponentially sharp.

Useful forms of the Chernoff bound - Let [math]\displaystyle{ X=\sum_{i=1}^n X_i }[/math], where [math]\displaystyle{ X_1, X_2, \ldots, X_n }[/math] are independent Poisson trials. Let [math]\displaystyle{ \mu=\mathbf{E}[X] }[/math]. Then

- 1. for [math]\displaystyle{ 0\lt \delta\le 1 }[/math],

- [math]\displaystyle{ \Pr[X\ge (1+\delta)\mu]\lt \exp\left(-\frac{\mu\delta^2}{3}\right); }[/math]

- [math]\displaystyle{ \Pr[X\le (1-\delta)\mu]\lt \exp\left(-\frac{\mu\delta^2}{2}\right); }[/math]

- 2. for [math]\displaystyle{ t\ge 2e\mu }[/math],

- [math]\displaystyle{ \Pr[X\ge t]\le 2^{-t}. }[/math]

Proof. To obtain the bounds in (1), we need to show that for [math]\displaystyle{ 0\lt \delta\lt 1 }[/math], [math]\displaystyle{ \frac{e^{\delta}}{(1+\delta)^{(1+\delta)}}\le e^{-\delta^2/3} }[/math] and [math]\displaystyle{ \frac{e^{-\delta}}{(1-\delta)^{(1-\delta)}}\le e^{-\delta^2/2} }[/math]. We can verify both inequalities by standard analysis techniques. To obtain the bound in (2), let [math]\displaystyle{ t=(1+\delta)\mu }[/math]. Then [math]\displaystyle{ \delta=t/\mu-1\ge 2e-1 }[/math]. Hence,

- [math]\displaystyle{ \begin{align} \Pr[X\ge(1+\delta)\mu] &\le \left(\frac{e^\delta}{(1+\delta)^{(1+\delta)}}\right)^\mu\\ &\le \left(\frac{e}{1+\delta}\right)^{(1+\delta)\mu}\\ &\le \left(\frac{e}{2e}\right)^t\\ &\le 2^{-t} \end{align} }[/math]

- [math]\displaystyle{ \square }[/math]

Applications to balls-into-bins

Throwing [math]\displaystyle{ m }[/math] balls uniformly and independently to [math]\displaystyle{ n }[/math] bins, what is the maximum load of all bins with high probability? In the last class, we gave an analysis of this problem by using a counting argument.

Now we give a more "advanced" analysis by using Chernoff bounds.

For any [math]\displaystyle{ i\in[n] }[/math] and [math]\displaystyle{ j\in[m] }[/math], let [math]\displaystyle{ X_{ij} }[/math] be the indicator variable for the event that ball [math]\displaystyle{ j }[/math] is thrown to bin [math]\displaystyle{ i }[/math]. Obviously

- [math]\displaystyle{ \mathbf{E}[X_{ij}]=\Pr[\mbox{ball }j\mbox{ is thrown to bin }i]=\frac{1}{n} }[/math]

Let [math]\displaystyle{ Y_i=\sum_{j\in[m]}X_{ij} }[/math] be the load of bin [math]\displaystyle{ i }[/math].

Then the expected load of bin [math]\displaystyle{ i }[/math] is

[math]\displaystyle{ (*)\qquad \mu=\mathbf{E}[Y_i]=\mathbf{E}\left[\sum_{j\in[m]}X_{ij}\right]=\sum_{j\in[m]}\mathbf{E}[X_{ij}]=m/n. }[/math]

For the case [math]\displaystyle{ m=n }[/math], it holds that [math]\displaystyle{ \mu=1 }[/math]

Note that [math]\displaystyle{ Y_i }[/math] is a sum of [math]\displaystyle{ m }[/math] mutually independent indicator variable. Applying Chernoff bound, for any particular bin [math]\displaystyle{ i\in[n] }[/math],

- [math]\displaystyle{ \Pr[Y_i\gt (1+\delta)\mu] \le \left(\frac{e^{\delta}}{(1+\delta)^{1+\delta}}\right)^\mu. }[/math]

The [math]\displaystyle{ m=n }[/math] case

When [math]\displaystyle{ m=n }[/math], [math]\displaystyle{ \mu=1 }[/math]. Write [math]\displaystyle{ c=1+\delta }[/math]. The above bound can be written as

- [math]\displaystyle{ \Pr[Y_i\gt c] \le \frac{e^{c-1}}{c^c}. }[/math]

Let [math]\displaystyle{ c=\frac{e\ln n}{\ln\ln n} }[/math], we evaluate [math]\displaystyle{ \frac{e^{c-1}}{c^c} }[/math] by taking logarithm to its reciprocal.

- [math]\displaystyle{ \begin{align} \ln\left(\frac{c^c}{e^{c-1}}\right) &= c\ln c-c+1\\ &= c(\ln c-1)+1\\ &= \frac{e\ln n}{\ln\ln n}\left(\ln\ln n-\ln\ln\ln n\right)+1\\ &\ge \frac{e\ln n}{\ln\ln n}\cdot\frac{2}{e}\ln\ln n+1\\ &\ge 2\ln n. \end{align} }[/math]

Thus,

- [math]\displaystyle{ \Pr\left[Y_i\gt \frac{e\ln n}{\ln\ln n}\right] \le \frac{1}{n^2}. }[/math]

Applying the union bound, the probability that there exists a bin with load [math]\displaystyle{ \gt 12\ln n }[/math] is

- [math]\displaystyle{ n\cdot \Pr\left[Y_1\gt \frac{e\ln n}{\ln\ln n}\right] \le \frac{1}{n} }[/math].

Therefore, for [math]\displaystyle{ m=n }[/math], with high probability, the maximum load is [math]\displaystyle{ O\left(\frac{e\ln n}{\ln\ln n}\right) }[/math].

The [math]\displaystyle{ m\gt \ln n }[/math] case

When [math]\displaystyle{ m\ge n\ln n }[/math], then according to [math]\displaystyle{ (*) }[/math], [math]\displaystyle{ \mu=\frac{m}{n}\ge \ln n }[/math]

We can apply an easier form of the Chernoff bounds,

- [math]\displaystyle{ \Pr[Y_i\ge 2e\mu]\le 2^{-2e\mu}\le 2^{-2e\ln n}\lt \frac{1}{n^2}. }[/math]

By the union bound, the probability that there exists a bin with load [math]\displaystyle{ \ge 2e\frac{m}{n} }[/math] is,

- [math]\displaystyle{ n\cdot \Pr\left[Y_1\gt 2e\frac{m}{n}\right] = n\cdot \Pr\left[Y_1\gt 2e\mu\right]\le \frac{1}{n} }[/math].

Therefore, for [math]\displaystyle{ m\ge n\ln n }[/math], with high probability, the maximum load is [math]\displaystyle{ O\left(\frac{m}{n}\right) }[/math].

Conditional Expectations

The conditional expectation of a random variable [math]\displaystyle{ Y }[/math] with respect to an event [math]\displaystyle{ \mathcal{E} }[/math] is defined by

- [math]\displaystyle{ \mathbf{E}[Y\mid \mathcal{E}]=\sum_{y}y\Pr[Y=y\mid\mathcal{E}]. }[/math]

In particular, if the event [math]\displaystyle{ \mathcal{E} }[/math] is [math]\displaystyle{ X=a }[/math], the conditional expectation

- [math]\displaystyle{ \mathbf{E}[Y\mid X=a] }[/math]

defines a function

- [math]\displaystyle{ f(a)=\mathbf{E}[Y\mid X=a]. }[/math]

Thus, [math]\displaystyle{ \mathbf{E}[Y\mid X] }[/math] can be regarded as a random variable [math]\displaystyle{ f(X) }[/math].

- Example

- Suppose that we uniformly sample a human from all human beings. Let [math]\displaystyle{ Y }[/math] be his/her height, and let [math]\displaystyle{ X }[/math] be the country where he/she is from. For any country [math]\displaystyle{ a }[/math], [math]\displaystyle{ \mathbf{E}[Y\mid X=a] }[/math] gives the average height of that country. And [math]\displaystyle{ \mathbf{E}[Y\mid X] }[/math] is the random variable which can be defined in either ways:

- We choose a human uniformly at random from all human beings, and [math]\displaystyle{ \mathbf{E}[Y\mid X] }[/math] is the average height of the country where he/she comes from.

- We choose a country at random with a probability proportional to its population, and [math]\displaystyle{ \mathbf{E}[Y\mid X] }[/math] is the average height of the chosen country.

The following proposition states some fundamental facts about conditional expectation.

Proposition (fundamental facts about conditional expectation) - Let [math]\displaystyle{ X,Y }[/math] and [math]\displaystyle{ Z }[/math] be arbitrary random variables. Let [math]\displaystyle{ f }[/math] and [math]\displaystyle{ g }[/math] be arbitrary functions. Then

- [math]\displaystyle{ \mathbf{E}[X]=\mathbf{E}[\mathbf{E}[X\mid Y]] }[/math].

- [math]\displaystyle{ \mathbf{E}[X\mid Z]=\mathbf{E}[\mathbf{E}[X\mid Y,Z]\mid Z] }[/math].

- [math]\displaystyle{ \mathbf{E}[\mathbf{E}[f(X)g(X,Y)\mid X]]=\mathbf{E}[f(X)\cdot \mathbf{E}[g(X,Y)\mid X]] }[/math].

- Let [math]\displaystyle{ X,Y }[/math] and [math]\displaystyle{ Z }[/math] be arbitrary random variables. Let [math]\displaystyle{ f }[/math] and [math]\displaystyle{ g }[/math] be arbitrary functions. Then

The proposition can be formally verified by computing these expectations. Although these equations look formal, the intuitive interpretations to them are very clear.

The first equation:

- [math]\displaystyle{ \mathbf{E}[X]=\mathbf{E}[\mathbf{E}[X\mid Y]] }[/math]

says that there are two ways to compute an average. Suppose again that [math]\displaystyle{ X }[/math] is the height of a uniform random human and [math]\displaystyle{ Y }[/math] is the country where he/she is from. There are two ways to compute the average human height: one is to directly average over the heights of all humans; the other is that first compute the average height for each country, and then average over these heights weighted by the populations of the countries.

The second equation:

- [math]\displaystyle{ \mathbf{E}[X\mid Z]=\mathbf{E}[\mathbf{E}[X\mid Y,Z]\mid Z] }[/math]

is the same as the first one, restricted to a particular subspace. As the previous example, inaddition to the height [math]\displaystyle{ X }[/math] and the country [math]\displaystyle{ Y }[/math], let [math]\displaystyle{ Z }[/math] be the gender of the individual. Thus, [math]\displaystyle{ \mathbf{E}[X\mid Z] }[/math] is the average height of a human being of a given sex. Again, this can be computed either directly or on a country-by-country basis.

The third equation:

- [math]\displaystyle{ \mathbf{E}[\mathbf{E}[f(X)g(X,Y)\mid X]]=\mathbf{E}[f(X)\cdot \mathbf{E}[g(X,Y)\mid X]] }[/math].

looks obscure at the first glance, especially when considering that [math]\displaystyle{ X }[/math] and [math]\displaystyle{ Y }[/math] are not necessarily independent. Nevertheless, the equation follows the simple fact that conditioning on any [math]\displaystyle{ X=a }[/math], the function value [math]\displaystyle{ f(X)=f(a) }[/math] becomes a constant, thus can be safely taken outside the expectation due to the linearity of expectation. For any value [math]\displaystyle{ X=a }[/math],

- [math]\displaystyle{ \mathbf{E}[f(X)g(X,Y)\mid X=a]=\mathbf{E}[f(a)g(X,Y)\mid X=a]=f(a)\cdot \mathbf{E}[g(X,Y)\mid X=a]. }[/math]

The proposition holds in more general cases when [math]\displaystyle{ X, Y }[/math] and [math]\displaystyle{ Z }[/math] are a sequence of random variables.