随机算法 (Spring 2013)/Threshold and Concentration

Erdős–Rényi Random Graphs

Consider a graph

Such graph is denoted as

Informally, the presence of every edge of

Monotone properties

A graph property is a predicate of graph which depends only on the structure of the graph.

Definition - Let

- Let

We are interested in the monotone properties, i.e., those properties that adding edges will not change a graph from having the property to not having the property.

Definition - A graph property

- A graph property

By seeing the property as a function mapping a set of edges to a numerical value in

Some examples of monotone graph properties:

- Hamiltonian;

- contains a subgraph isomorphic to some

- non-planar;

- chromatic number

- girth

From the last two properties, you can see another reason that the Erdős theorem is unintuitive.

Some examples of non-monotone graph properties:

- Eulerian;

- contains an induced subgraph isomorphic to some

For all monotone graph properties, we have the following theorem.

Theorem - Let

- Let

Although the statement in the theorem looks very natural, it is difficult to evaluate the probability that a random graph has some property. However, the theorem can be very easily proved by using the idea of coupling, a proof technique in probability theory which compare two unrelated random variables by forcing them to be related.

Proof. For any

It is obvious that

Since

Threshold phenomenon

One of the most fascinating phenomenon of random graphs is that for so many natural graph properties, the random graph

A monotone graph property

- when

- when

The classic method for proving the threshold is the so-called second moment method (Chebyshev's inequality).

Threshold for 4-clique

Theorem - The threshold for a random graph

- The threshold for a random graph

We formulate the problem as such.

For any

Let

It is sufficient to prove the following lemma.

Lemma - If

- If

- If

Proof. The first claim is proved by the first moment (expectation and Markov's inequality) and the second claim is proved by the second moment method (Chebyshev's inequality).

Every 4-clique has 6 edges, thus for any

By the linearity of expectation,

Applying Markov's inequality

The first claim is proved.

To prove the second claim, it is equivalent to show that

where the variance is computed as

For any

We now compute the covariances. For any

- Case.1:

- Case.2:

- since there are 11 edges in the union of two 4-cliques that share a common edge. The contribution of these pairs is

- Case.2:

- since there are 9 edges in the union of two 4-cliques that share a triangle. The contribution of these pairs is

Putting all these together,

And

which is

Threshold for balanced subgraphs

The above theorem can be generalized to any "balanced" subgraphs.

Definition - The density of a graph

- A graph

- The density of a graph

Cliques are balanced, because

Theorem (Erdős–Rényi 1960) - Let

- Let

Sketch of proof. For any

Note that

By Markov's inequality,

By Chebyshev's inequality,

The first term

For the covariances,

Therefore, when

Chernoff Bound

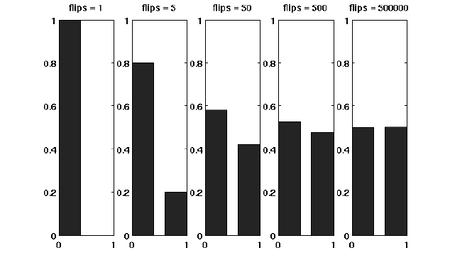

Suppose that we have a fair coin. If we toss it once, then the outcome is completely unpredictable. But if we toss it, say for 1000 times, then the number of HEADs is very likely to be around 500. This striking phenomenon, illustrated in the right figure, is called the concentration. The Chernoff bound captures the concentration of independent trials.

The Chernoff bound is also a tail bound for the sum of independent random variables which may give us exponentially sharp bounds.

Before proving the Chernoff bound, we should talk about the moment generating functions.

Moment generating functions

The more we know about the moments of a random variable

Definition - The moment generating function of a random variable

- The moment generating function of a random variable

By Taylor's expansion and the linearity of expectations,

The moment generating function

The Chernoff bound

The Chernoff bounds are exponentially sharp tail inequalities for the sum of independent trials.

The bounds are obtained by applying Markov's inequality to the moment generating function of the sum of independent trials, with some appropriate choice of the parameter

Chernoff bound (the upper tail) - Let

- Then for any

- Let

Proof. For any where the last step follows by Markov's inequality.

Computing the moment generating function

Let

We bound the moment generating function for each individual

where in the last step we apply the Taylor's expansion so that

Therefore,

Thus, we have shown that for any

For any

The idea of the proof is actually quite clear: we apply Markov's inequality to

We then proceed to the lower tail, the probability that the random variable deviates below the mean value:

Chernoff bound (the lower tail) - Let

- Then for any

- Let

Proof. For any For any

Some useful special forms of the bounds can be derived directly from the above general forms of the bounds. We now know better why we say that the bounds are exponentially sharp.

Useful forms of the Chernoff bound - Let

- 1. for

- 2. for

- Let

Proof. To obtain the bounds in (1), we need to show that for To obtain the bound in (2), let

Balls into bins, revisited

Throwing

Now we give a more "advanced" analysis by using Chernoff bounds.

For any

Let

Then the expected load of bin

For the case

Note that

When

When

Let

Thus,

Applying the union bound, the probability that there exists a bin with load

Therefore, for

For larger

When

We can apply an easier form of the Chernoff bounds,

By the union bound, the probability that there exists a bin with load

Therefore, for