随机算法 (Spring 2014)/Random Variables

Random Variable

Definition (random variable) - A random variable

- A random variable

For a random variable

The independence can also be defined for variables:

Definition (Independent variables) - Two random variables

- for all values

- Two random variables

Note that in probability theory, the "mutual independence" is not equivalent with "pair-wise independence", which we will learn in the future.

Expectation

Let

Definition (Expectation) - The expectation of a discrete random variable

- where the summation is over all values

- The expectation of a discrete random variable

Linearity of Expectation

Perhaps the most useful property of expectation is its linearity.

Theorem (Linearity of Expectations) - For any discrete random variables

- For any discrete random variables

Proof. By the definition of the expectations, it is easy to verify that (try to prove by yourself): for any discrete random variables

The theorem follows by induction.

The linearity of expectation gives an easy way to compute the expectation of a random variable if the variable can be written as a sum.

- Example

- Supposed that we have a biased coin that the probability of HEADs is

- It looks straightforward that it must be np, but how can we prove it? Surely we can apply the definition of expectation to compute the expectation with brute force. A more convenient way is by the linearity of expectations: Let

The real power of the linearity of expectations is that it does not require the random variables to be independent, thus can be applied to any set of random variables. For example:

However, do not exaggerate this power!

- For an arbitrary function

- For variances, the equation

Conditional Expectation

Conditional expectation can be accordingly defined:

Definition (conditional expectation) - For random variables

- where the summation is taken over the range of

- For random variables

There is also a law of total expectation.

Theorem (law of total expectation) - Let

- Let

Distributions of Coin Flips

We introduce several important probability distributions induced by independent coin flips (independent trials), including: Bernoulli trial, geometric distribution, binomial distribution.

Bernoulli trial (Bernoulli distribution)

Bernoulli trial describes the probability distribution of a single (biased) coin flip. Suppose that we flip a (biased) coin where the probability of HEADS is

Geometric distribution

Suppose we flip the same coin repeatedly until HEADS appears, where each coin flip is independent and follows the Bernoulli distribution with parameter

For geometric

Binomial distribution

Suppose we flip the same (biased) coin for

A binomial random variable

As we saw above, by applying the linearity of expectations, it is easy to show that

Balls into Bins

Consider throwing

We are concerned with the following three questions regarding the balls into bins model:

- birthday problem: the probability that every bin contains at most one ball (the mapping is 1-1);

- coupon collector problem: the probability that every bin contains at least one ball (the mapping is on-to);

- occupancy problem: the maximum load of bins.

Birthday Problem

There are

Due to the pigeonhole principle, it is obvious that for

We can model this problem as a balls-into-bins problem.

We first analyze this by counting. There are totally

Thus the probability is given by:

Recall that

There is also a more "probabilistic" argument for the above equation. To be rigorous, we need the following theorem, which holds generally and is very useful for computing the AND of many events.

By the definition of conditional probability, Theorem:

- Let

Proof: It holds that

Recursively applying this equation to

- Let

Now we are back to the probabilistic analysis of the birthday problem, with a general setting of

The first student has a birthday (of course!). The probability that the second student has a different birthday is

which is the same as what we got by the counting argument.

There are several ways of analyzing this formular. Here is a convenient one: Due to Taylor's expansion,

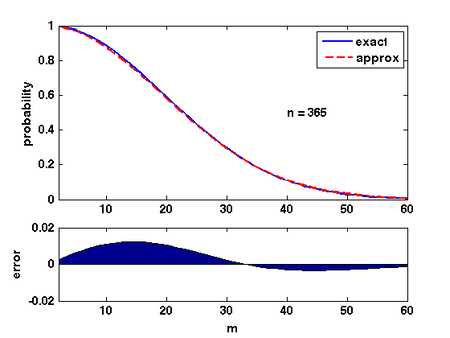

The quality of this approximation is shown in the Figure.

Therefore, for

Coupon Collector

Suppose that a chocolate company releases

The coupon collector problem can be described in the balls-into-bins model as follows. We keep throwing balls one-by-one into

Theorem - Let

- Let

Proof. Let When there are exactly

For a geometric random variable,

Applying the linearity of expectations,

where

Only knowing the expectation is not good enough. We would like to know how fast the probability decrease as a random variable deviates from its mean value.

Theorem - Let

- Let

Proof. For any particular bin By the union bound, the probability that there exists an empty bin after throwing

Stable Marriage

We now consider the famous stable marriage problem or stable matching problem (SMP). This problem captures two aspects: allocations (matchings) and stability, two central topics in economics.

An instance of stable marriage consists of:

- each person associated with a strictly ordered preference list containing all the members of the opposite sex.

Formally, let

A matching is a one-one correspondence

Definition (stable matching) - A pair

- A matching

- A pair

It is unclear from the definition itself whether stable matchings always exist, and how to efficiently find a stable matching. Both questions are answered by the following proposal algorithm due to Gale and Shapley.

The proposal algorithm (Gale-Shapley 1962) - Initially, all person are not married;

- in each step (called a proposal):

- an arbitrary unmarried man

- if

- if

- if otherwise

- an arbitrary unmarried man

The algorithm terminates when the last single woman receives a proposal. Since for every pair

It is obvious to see that the algorithm retruns a macthing, and this matching must be stable. To see this, by contradiction suppose that the algorithm resturns a macthing

We are interested in the average-case performance of this algorithm, that is, the expected number of proposals if everyone's preference list is a uniformly and independently random permutation.

The following principle of deferred decisions is quite useful in analysing performance of algorithm with random input.

Principle of deferred decisions - The decision of random choice in the random input can be deferred to the running time of the algorithm.

Apply the principle of deferred decisions, the deterministic proposal algorithm with random permutations as input is equivalent to the following random process:

- At each step, a man

We then compare the above process with the following modified process:

- The man

It is easy to see that the modified process (sample with replacement) is no more efficient than the original process (sample without replacement) because it simulates the original process if at each step we only count the last proposal to the woman who has not rejected the man. Such comparison of two random processes by forcing them to be related in some way is called coupling.

Note that in the modified process (sample with replacement), each proposal, no matter from which man, is going to a uniformly and independently random women. And we know that the algorithm terminated once the last single woman receives a proposal, i.e. once all

Occupancy Problem

Now we ask about the loads of bins. Assuming that

An easy analysis shows that for every bin

Because there are totally

Therefore, due to the linearity of expectations,

Because for each ball, the bin to which the ball is assigned is uniformly and independently chosen, the distributions of the loads of bins are identical. Thus

Next we analyze the distribution of the maximum load. We show that when

Theorem - Suppose that

- Suppose that

Proof. Let According to Stirling's approximation,

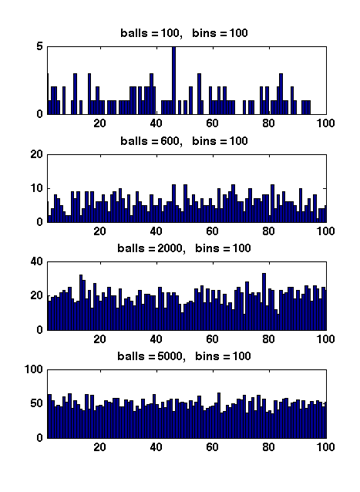

Figure 1 Due to the symmetry. All

When

Therefore,

When

Formally, it can be proved that for