随机算法 (Fall 2015)/Mixing Time and Coupling

Mixing Time

The mixing time of a Markov chain gives the rate at which a Markov chain converges to the stationary distribution. To rigorously define this notion, we need a way of measuring the closeness between two distributions.

Total Variation Distance

In probability theory, the total variation distance measures the difference between two probability distributions.

Definition (total variation distance) - Let

- where

- Let

It can be verified (left as an exercise) that

thus the total variation distance can be equivalently defined as

So the total variation distance between two distributions gives an upper bound on the difference between the probabilities of the same event according to the two distributions.

Mixing Time

Definition (mixing time) - Let

- Let

We note that

Definition (mixing time) - The mixing time

- The mixing time

The mixing time is the time until the total variation distance to the stationary distribution, starting from the worst possible initial state

Proposition

So the distance to stationary distribution

Both the formal proofs of the monotonicity of

Coupling

We will discuss how to bound the mixing time by coupling two Markov chains. Before that, we will first formally introduce the coupling of two probability distributions.

Coupling of Two Distributions

Coupling is a powerful proof technique in probability theory. It allows us to compare two unrelated variables (or processes) by forcing them to share some randomness.

Definition (coupling) - Let

- Let

The interesting case is when

Lemma (coupling lemma) - Let

- For any coupling

- There exists a coupling

- Let

Proof. - 1.

Suppose

Then for any

Therefore,

- 2.

We can couple

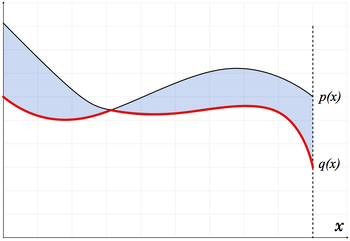

The proof is depicted by the following figure, where the curves are the probability density functions for the two distributions

Monotonicity of

Consider a Markov chain on state space

We will show that

Proposition

Proof. Let

For any fixed

- If

- Otherwise, do the transitions

It is easy to see that the marginal distributions of

In conclusion, it holds that

where the first inequality is due to the easy direction of the Coupling Lemma.

- If

Rapid Mixing by Coupling

Consider an ergodic (irreducible and aperiodic) Markov chain on finite state space

- Consider two random walks starting at state

- where we recall that

Definition (coupling of Markov chain) - A coupling of a Markov chain with state space

- each of

- once

- A coupling of a Markov chain with state space

Lemma (coupling lemma for Markov chains) - Let

- Let

Proof. Due to the coupling lemma (for probability distributions),

where

Therefore,

Corollary - Let

- Let

Geometric convergence

Consider a Markov chain on state space

We prove that

Proposition

Proof. - 1.

We denote that

- If

- For the case that

For any

Thus

Then by induction,

and this holds for any

- 2.

By the definition of

Application: Random Walk on the Hypercube

A

We use coupling to bound the mixing time of the following simple random walk on a hypercube.

Random Walk on Hypercube - At each step, for the current state

- with probability

- else, pick a coordinate

- At each step, for the current state

This is a lazy uniform random walk in an

Random Walk on Hypercube - At each step, for the current state

- pick a coordinate

- let

- At each step, for the current state

It is easy to see the two random walks are the same. The second form hint us to couple the random choice of

- At each step,

Obviously the two individual random walks are both faithful copies of the original walk.

For arbitrary

Thus for any

which implies that

So the random walk achieves a polynomially small variation distance

Note that the number of states (vertices in the

Card Shuffling

We consider the Markov chain of shuffling a deck of cards, and prove the rapid mixing of the chain by coupling.

Riffle Shuffle

The following is the Gilbert-Shannon-Reeds model of riffle shuffle introduced by Gilbert and Shannon in 1955 and independently by Reeds in 1985.

Riffle Shuffle (Gilbert-Shannon 1955; Reeds 1985) - Given a deck of

- Split the original deck into two decks according to the binomial distribution

- Cut off the first

- Cut off the first

- Drop cards in sequence, where the next card comes from one of the two decks with probability proportional to the size of the deck at that time.

- Suppose at a step there are

- Suppose at a step there are

- Given a deck of

The second step actually samples a uniform interleaving of left and right deck. The magician and mathematician Diaconis in 1988 found evidence showing that this mathematical model reasonably approximate the riffle shuffling acted by human.

The above model is equivalent to the following model of inverse riffle shuffle.

Inverse Riffle Shuffle - Label each card with a bit from

- Place all cards labeled with 0 above all cards labeled with 1, keeping the cards with the same label in the same the relative order as before.

- Label each card with a bit from

The process of inverse riffle shuffle is depicted as follows.

For each card, the random bits used for labeling the card compose a binary code

Lemma - After every round of the inverse riffle shuffle, the cards are sorted in non-decreasing order of their corresponding binary codes.

Proof. By induction. After the first round, all

Rapid Mixing of Riffle Shuffle by Coupling

Theorem - The mixing time of inverse riffle shuffle of a deck of

- The mixing time of inverse riffle shuffle of a deck of

Consider two instance of shuffling, started with two decks, each in an arbitrary order. We couple the two shuffling processes by coupling their random choices of labelings:

- In each round, we label the same cards (same content, not the same order) in the two decks with the same random bit.

Formally, for each

Lemma - With the above coupling rule, started with arbitrary two decks of cards, the two decks are the same once all cards in the first (or the second) deck have distinct labels.

Proof. By the definition of the coupling rule, any two identical cards in the two decks always have the same label, since in every round they choose the same random bit. And we have shown that after each round, the labels are sorted in non-decreasing order. Thus, we can conclude:

- after each round, the sequences of the labels of the two decks are identical (and sorted in non-decreasing order).

Therefore, when all cards in the first deck have distinct labels, the second deck have the same set of distinct labels, and the cards are sorted increasingly in their labels in the respective decks. Since any two identical cards have the same labels, the cards in the two decks must be in the same order.

We denote by

This bound can be deduced from the birthday problem. After

Note that the state space is the set of all permutations, which is of size

This estimation of mixing time is fairly tight. For the case that

1 2 3 4 5 6 7 8 9 1.000 1.000 1.000 1.000 0.924 0.614 0.334 0.167 0.003